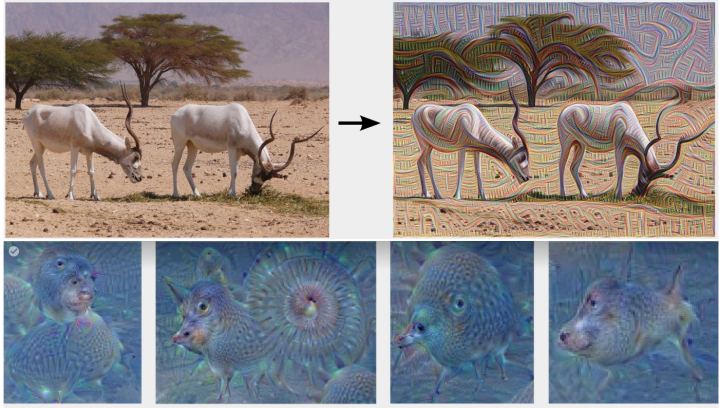

When Google researchers decided to partition out the recognition process, allowing just one aspect of the entire analysis to enhance a certain image, they created some particularly groovy pictures. Calling it inceptionism, Google’s Alexander Mordvintsev explained, “Instead of exactly prescribing which feature we want the network to amplify, we can also let the network make that decision. In this case we simply feed the network an arbitrary image or photo and let the network analyze the picture. We then pick a layer and ask the network to enhance whatever it detected. Each layer of the network deals with features at a different level of abstraction, so the complexity of features we generate depends on which layer we choose to enhance. For example, lower layers tend to produce strokes or simple ornament-like patterns, because those layers are sensitive to basic features such as edges and their orientations.”

Essentially, this pinpointing of one particular recognition layer magnified whatever an image somewhat resembled. Wrote Mordvintsev, “We ask the network: ‘Whatever you see there, I want more of it!’ This creates a feedback loop: if a cloud looks a little bit like a bird, the network will make it look more like a bird. This in turn will make the network recognize the bird even more strongly on the next pass and so forth, until a highly detailed bird appears, seemingly out of nowhere.”

Beyond creating incredibly trippy images, Google believes that the implications they’ve unlocked with this new, deconstructed process are limitless. Concluded the research team, “The techniques presented here help us understand and visualize how neural networks are able to carry out difficult classification tasks, improve network architecture, and check what the network has learned during training. It also makes us wonder whether neural networks could become a tool for artists — a new way to remix visual concepts — or perhaps even shed a little light on the roots of the creative process in general.”

Editors' Recommendations

- Nvidia’s supercomputer may bring on a new era of ChatGPT

- Google Smart Canvas gets deeper integration between apps

- Google’s Pixel 6a could be coming far sooner than expected

- Google’s long rumored Pixel Watch may finally be coming next year

- The Google Pixel 6 and 6 Pro barely charge any faster than the Pixel 5