Professor Harpreet Sareen has a vision for a world in which the plants in our gardens can watch what we’re doing, and Venus Flytraps can be used to display messages, based on the tap of a computer mouse. It sounds crazy, but it really isn’t. And Sareen — currently employed at MIT’s world famous Media Lab and the equally renowned New School’s Parsons School of Design — has the proof-of-concepts to prove it. In fact, he’s spent the past several years of his career making sure that they’re as impressive as possible.

“Plants are naturally occurring interaction devices in nature,” Sareen told Digital Trends. “They change their color, orientation, moisture, position of flowers, and leaves, among many other responses.”

Such interactions may be comparatively subtle next to the ostentatious responses we receive when we use with our everyday devices. That doesn’t make them any less useful, though. By working alongside one another, Sareen is convinced that a hybrid of the natural and artificial world has the potential to shape the future of technology as we know it.

He refers to this unusual, but fast-developing new field as Cyborg Botany.

The cyborg botanist

Ask almost anyone, and they will probably summon up a very similar image when talking about the dream of the smart home. It is, by and large, an indoor world (because what self-respecting geek goes outside?) in which every object possesses not just its own in-built intelligence, but also the ability to talk to every other object it shares a physical space with.

By attaching special electrodes it’s possible to control aspects of the plants’ movement via software.

Wherever we go in our smart homes, the goal is that our movement can be tracked so that an assortment of glowing smart displays, “always listening” speakers, smart sensors and whatever else can spring to life at just the right time with the right, contextually aware information. Sure, our homes will fill with new gadgets, but the downsides — like the cost of replacing our “dumb” household objects with their “smart” counterparts — is surely a small price to pay. Right?

Sareen has a slightly different idea. He dreams of a world in which this same interconnected functionality can be achieved — only instead of using cold, hard smart gadgets churned off some industrial production line in Shenzhen, China, engineers will find ways to harness the natural intelligence of already growing trees, herbs, bushes, grasses, and vines. It’s a vision reminiscent of Richard Brautigan’s beloved 1967 hippy-tech poem, “All Watched Over by Machines of Loving Grace,” in which Brautigan describes a utopia in which the natural and, well, unnatural worlds can sing together in perfect harmony: “I like to think / (right now, please!) / of a cybernetic forest / filled with pines and electronics / where deer stroll peacefully / past computers / as if they were flowers / with spinning blossoms.”

Harpreet Sareen’s work started out as an attempt to better understand the sensing mechanisms inside plants. At first, he focused on one-way interaction by using plants’ naturally occurring electrochemical signals to drive robots. Tethered by wires and silver electrodes, one such plant-robot hybrid was able to navigate toward patches of light in response to light shining on its leaves.

Now he wants to go further. Rather than just listening to signals coming from the plants themselves, Sareen seeks to make this relationship “bi-directional.” That means not just getting machines to move in response to plants, but getting plants to act in response to machines, too. It’s not quite weaponizing flora, but it’s certainly turning it into what he terms “interactive units.”

“Planta Digitalis” and other stories

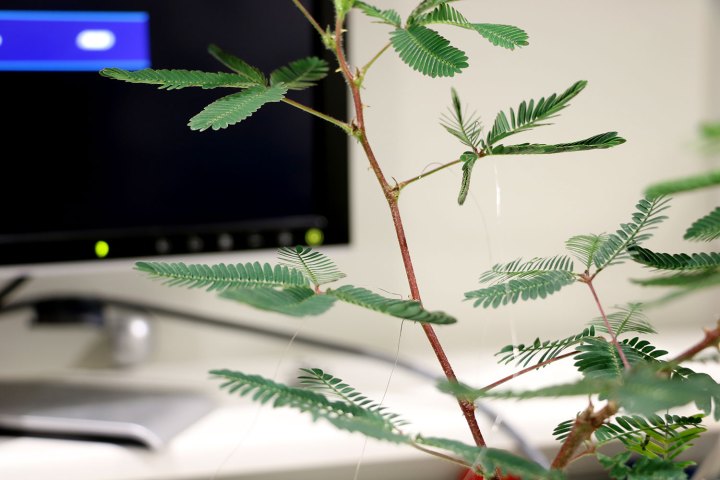

For his latest project, Sareen has created two systems in which external inputs can be used to control plants. The first of these is something he calls Phytoactuators. This refers to plant actuation triggered through software. By attaching special electrodes to parts of a Venus Flytrap and Mimosa plant, it’s possible to control aspects of their movement by interacting with a software interface. When a user clicks on an electrode’s location on an on-screen representation of the plant, the corresponding electrodes are stimulated — thereby triggering motion.

The results are a little bit like a sort of primitive display, able to present information in a way that we’re currently unaccustomed to. No, you probably won’t be willing to ditch your fancy 5K monitor for a room full of Snapdragons. But this could nonetheless provide an alternative (and far less intrusive) way to indicate changes between certain states. For example, imagine being able to look at the plants in your room to get an indication of weather conditions before stepping outside, or finding out, at a glance, whether a new delivery has turned up. Sareen describes this as a “soft notification” system.

His second creation is the wonderfully titled Planta Digitalis. The goal with this work is to turn plants into antennas or motion sensors. This is achieved by implanting conductive wires inside their stems and leaves, which are then connected to a high sampling speed network analyzer. The in-vivo wires, combined with a frequency sweep, result in antenna-like electromagnetic properties.

Seen in the right light, a robust industrial ecosystem is an extension of the biosphere.

With this approach, Sareen imagines that it could be possible to make plants recognize gestural control or carry out motion tracking. Picture waving when you enter or leave a room to turn the lights on or off. How about having plants positioned at the entrance to a garage to reveal whether or not it is full? Or what if certain smart plants could be dispersed through a woodland to help biologists keep track of wildlife passing through?

“The most exciting use cases to me are the ones that push the boundaries of electronics from currently being outside to inside the plants,” Sareen said. “We currently install motion detectors in and outside our homes. But if plants had in-vivo motion detectors and a digital connection, they could potentially be replacing antenna based devices in homes, or even outside in forests.”

Technology vs. nature?

Sareen’s work — as far out as it may be — is interesting because it celebrates a relationship between the technological and natural worlds which is often ignored. Technology and nature are routinely positioned as being fundamentally opposed to one another. The artificial is viewed as the antithesis of the natural; something highlighted whenever we take a walk in the wilderness and discover some candy wrapper, plastic bottle or other piece of detritus as a reminder of civilization.

From humankind’s earliest tools to the latest cutting-edge efforts to extend our abilities and life spans through cyborg implants and medical advances, there’s a tendency to view advances as a fight against the natural world. In his book How to Make a Human Being, the scientific philosopher Christopher Potter writes that, “Humans never were part of nature. We were always part of technology.”

But there is another way to view the relationship between technology and ecology, or the machine world and the natural world. In Wired founding editor Kevin Kelly’s 1994 book Out of Control: The New Biology of Machines, Social Systems and the Economic World, the author writes that: “Seen in the right light, a robust industrial ecosystem is an extension of the biosphere.As a splinter of wood fiber travels from tree to wood chip to newspaper and then from paper to compost to tree again, the fiber easily slips in and out of the natural and industrial spheres of a larger global megasystem. Stuff circles from the biosphere into the technosphere and back again in a grand bionic ecology of nature and artefact.”

“A plant absorbing water acts as a natural motor, their photosynthesis as optoelectronics, their growth as a 3D printer, and so on.”

Of course, not everything is quite as compostable — and, thus, circular — as a wood fiber, as proven by the thousands of tons of electronic waste produced each year. But the idea that the natural world poses a model for technology to follow has its own history. This suggestion dates back to Norbert Wiener, the father of a field known as “cybernetics.” Cybernetics described the world as continuous feedback loops of information between living systems, encapsulating every aspect of life as we know it. It was a concept which excited both technologists and naturalists alike — and made Wiener a surprise celebrity among the general public.

Sun shines in the cybernetic pines

“Norbert Wiener’s seminal work has really influenced me in shaping this work and its future goals,” Sareen said. “We already design augmentations for humans through devices, correcting our body systems and connecting our physiology to the digital work. I am looking broadly at plant ecology to analyze such micro-capabilities inside plants. A plant absorbing water acts as a natural motor, their photosynthesis as optoelectronics, their growth as a 3D printer, and so on. If we were to install our designed capabilities in connection to these physiological components, we would perhaps be making components that are not only functional, but can self-repair, self-power, self-fabricate with plants.”

Ultimately, Sareen hopes his work can help open people’s eyes about the potential of harnessing “the fabric of nature” alongside the artificial devices that we create. “Changing our thought processes from completely artificial fabrication to a hybridization can lead us to an active forefront of designing with nature,” he said.

The results may result in a new era in convergent design: way beyond what even the latest advanced biomimicry projects may be working with. “All of these methods are currently within research realms,” he concluded. “It’ll still be a few years before we can start putting them all together as components that could come as soft circuits assembling inside plants. [However,] Cyborg Botany intends to communicate that vision and show the possibilities of such research.”

Things may have taken a little longer than Richard Brautigan hoped, but — thanks to Harpreet Sareen — we may very well soon be watched over by machines of loving grace, after all. Now if only someone would get started on that cybernetic forest…