The “uncanny valley” is often used to describe artificial intelligence (A.I.) mimicking human behavior. But Nvidia’s new voice A.I. is much more realistic than anything we’ve ever heard before. Using a combination of A.I. and a human reference recording, the fake voice sounds almost identical to a real one.

In a video (above), Nvidia’s in-house creative team describes the process of achieving accurate voice synthesis. The team equates speech to music, featuring complex and nuanced rhythms, pitches, and timbres that aren’t easy to replicate. Nvidia is creating tools to reproduce these intricacies with A.I.

The company unveiled its latest advancements at Interspeech, which is a technical conference dedicated to research into speech processing technologies. Nvidia’s voice tools are available through the open-source NeMo toolkit, and they’re optimized to run on Nvidia GPUs (according to Nvidia, of course).

The A.I. voice isn’t just a demo, either. Nvidia has transitioned to an A.I. narrator for its I Am A.I. video series, which shows the impacts of machine learning across various industries. Now, Nvidia is able to an artificial voice as a narrator, free of the usual audio artifacts that come along with synthesized voices.

Nvidia tackles A.I. voices in one of two ways. The first is to train a text-to-speech model on a speech given by a human. After enough training, the model can take any text input and convert it into speech. The other method is voice conversion. In this case, the program uses an audio file of a human speaking and converts the voice to an A.I. one, matching the pattern and intonation.

For practical applications, Nvidia points to the countless virtual assistants helming customer service lines, as well as the ones present in smart devices like Alexa and Google Assistant. Nvidia says this technology reaches much further, however. “Text-to-speech can be used in gaming, to aid individuals with vocal disabilities or to help users translate between languages in their own voice,” Nvidia’s blog post reads.

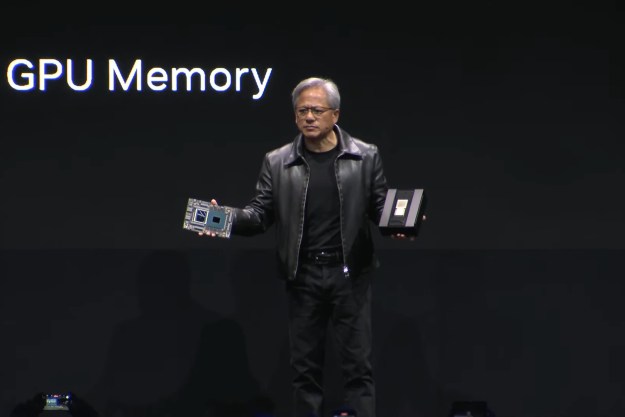

Nvidia is developing a knack for tricking people using A.I. The company recently went into detail about how it created a virtual CEO for its GPU Technology Conference, aided in part by its own Omniverse software.

Editors' Recommendations

- I’ve reviewed every AMD and Nvidia GPU this generation — here’s how the two companies stack up

- At this point, we know just about everything about Nvidia’s new GPUs

- Why I leave Nvidia’s game-changing tech off in most games

- I tested Nvidia’s new RTX feature, and it fixed the worst part of PC gaming

- Nvidia doesn’t want you to know about its controversial new GPU