Predictive policing was supposed to transform the way policing was carried out, ushering us into a world of smart law enforcement in which bias was removed and police would be able to respond to the data, not to hunches. But a decade after most of us first heard the term “predictive policing” it seems clear that it has not worked. Driven by a public backlash, the technology is experiencing a significant decline in its usage, compared to just a few years ago.

In April this year, Los Angeles — which, according to the LA Times, “pioneered predicting crime with data” — cut funding for its predictive policing program, blaming the cost. “That is a hard decision,” Police Chief Michel Moore told the LA Times. “It’s a strategy we used, but the cost projections of hundreds of thousands of dollars to spend on that right now versus finding that money and directing that money to other more central activities is what I have to do.”

What went wrong? How could something advertised as “smart” technology wind up further entrenching biases and discrimination? And is the dream of predictive policing one that could be tweaked with the right algorithm — or a dead-end in a fairer society that’s currently grappling with how police should operate?

The promise of predictive policing

Predictive policing in its current form dates back around one decade to a 2009 paper by psychologist Colleen McCue and Los Angeles police chief Charlie Beck, titled “Predictive Policing: What Can We Learn from Walmart and Amazon about Fighting Crime in a Recession?” In the paper, they seized upon the way that big data was being used by major retailers to help uncover patterns in past customer behavior that could be used to predict future behavior. Thanks to advances in both computing and data-gathering, McCue and Beck suggested that it was possible to gather and analyze crime data in real time. This data could then be used to anticipate, prevent, and respond more effectively to crimes that had not yet taken place.

In the years since, predictive policing has transitioned from a throwaway idea to a reality in many parts of the United States, along with the rest of the world. In the process, it has set out to change policing from a reactive force into a proactive one; drawing on some of the breakthroughs in data-driven technology which make it possible to spot patterns in real time — and act upon them.

“There are two main forms of predictive policing,” Andrew Ferguson, professor of law at the University of the District of Columbia David A. Clarke School of Law and author of The Rise of Big Data Policing: Surveillance, Race, and the Future of Law Enforcement, told Digital Trends. “[These are] place-based predictive policing and person based-predictive policing.”

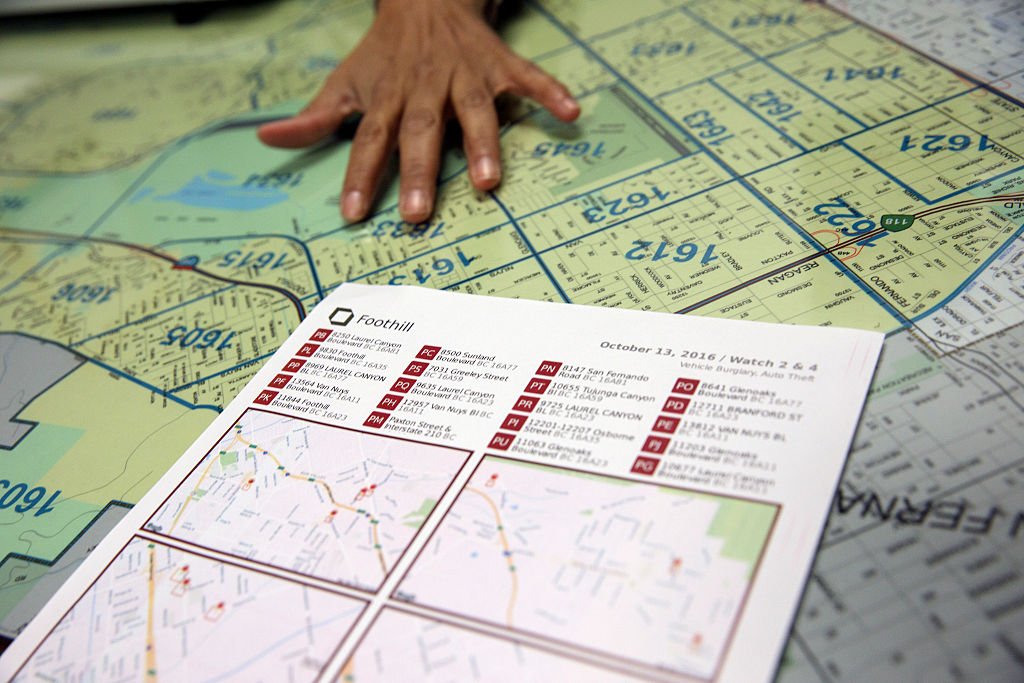

In both cases, the predictive policing systems assign a risk score to the person or place in question, which encourages police to follow up at a given interval. The first of these approaches, place-based predictive policing, focuses predominantly on police patrols. It involves the use of crime mapping and analytics about the likely places of future crimes, based on previous statistics.

Rather than helping to get rid of problems like racism and other systemic biases, predictive policing may actually help entrench them.

The second approach focuses on predicting the likelihood that an individual poses a potential future risk. For example, in 2013, a Chicago Police commander was sent to the home of a 22-year-old Robert McDaniel, who had been flagged as a potential risk or perpetrator of gun violence in inner-city Chicago by an algorithm. The “heat list” the algorithm helped assemble looked for patterns that might be able to predict future offenders or victims, even if they themselves had not done anything to warrant this scrutiny beyond conforming to a profile.

As the Chicago Tribune noted: “The strategy calls for warning those on the heat list individually that further criminal activity, even for the most petty offenses, will result in the full force of the law being brought down on them.”

The dream of predictive policing was that, by acting upon quantifiable data, it would make policing not only more efficient, but also less prone to guesswork and, as a result, bias. It would, proponents claimed, change policing for the better, and usher in a new era of smart policing. However, from almost the very start, predictive policing has had staunch critics. They argue that, rather than helping to get rid of problems like racism and other systemic biases, predictive policing may actually help entrench them. And it’s hard to argue they don’t have a point.

Discriminatory algorithms

The idea that machine learning-based predictive policing systems can learn to discriminate based on factors such as race is nothing new. Machine-learning tools are trained with massive gobs of data. And, so long as that data is gathered by a system in which race continues to be an overwhelming factor, that can lead to discrimination.

As Renata M. O’Donnell writes in a 2019 paper, titled “Challenging Racist Predictive Policing Algorithms Under the Equal Protection Clause,” machine learning algorithms are learning from data derived from a justice system in which “Black Americans are incarcerated in state prisons at a rate that is 5.1 times the imprisonment of whites, and one of every three Black men born today can expect to go to prison in his lifetime if current trends continue.”

“Data isn’t objective,” Ferguson told Digital Trends. “It’s just us reduced to binary code. Data-driven systems that operate in the real world are no more objective, fair, or unbiased than the real world. If your real world is structurally unequal or racially discriminatory, a data-driven system will mirror those societal inequities. The inputs going in are tainted by bias. The analysis is tainted by bias. And the mechanisms of police authority don’t change just because there is technology guiding the systems.”

Ferguson gives the example of arrests as one seemingly objective factor in predicting risk. However, arrests will be skewed by the allocation of police resources (such as where they patrol) and the types of crime that typically warrant arrests. This is just one illustration of potentially problematic data.

The perils of dirty data

Missing and incorrect data is sometimes referred to in data mining as “dirty data.” A 2019 paper by researchers from the A.I. Now Institute at New York University expands this term to also refer to data that is influenced by corrupt, biased, and unlawful practices — whether that be from intentionally manipulated that’s distorted by individual and societal biases. It could, for instance, include data that is generated from the arrest of an innocent person who has had evidence planted on them or who is otherwise falsely accused.

There is a certain irony in the fact that, over the past decades the demands of the data society, in which everything is about quantification and cast-iron numerical targets, has just led to a whole lot of … well, really bad data. The HBO series The Wire showcased the real-world phenomenon of “juking the stats,” and the years since the show went off the air have offered up plenty of examples of actual systemic data manipulation, faked police reports, and unconstitutional practices that have sent innocent people to jail.

Bad data that allows people in power to artificially hit targets is one thing. But combine that with algorithms and predictive models that use this as their basis for modeling the world and you potentially get something a whole lot worse.

Researchers have demonstrated how questionable crime data plugged into predictive policing algorithms can create what is referred to as “runaway feedback loops,” in which police are repeatedly sent to the same neighborhoods regardless of the true crime rate. One of the co-authors of that paper, computer scientist Suresh Venkatasubramanian, says that machine learning models can build in faulty assumptions through their modeling. Like the old saying about how, for the person with a hammer, every problem looks like a nail, these systems model only certain elements to a problem — and imagine only one possible outcome.

“[Something that] doesn’t get addressed in these models is to what extent are you modeling the fact that throwing more cops into an area can actually lower the quality of life for people who live there?” Venkatasubramanian, a professor in the School of Computing at the University of Utah, told Digital Trends. “We assume more cops is a better thing. But as we’re seeing right now, having more police is not necessarily a good thing. It can actually make things worse. In not one model that I’ve ever seen has anyone ever asked what the cost is of putting more police into an area.”

The uncertain future of predictive policing

Those working in predictive policing sometimes unironically use the term “Minority Report” to refer to the kind of prediction they are doing. The term is frequently invoked as a reference to the 2002 movie of the same name, which in turn was loosely based on a 1956 short story by Philip K. Dick. In Minority Report, a special PreCrime police department apprehends criminals based on foreknowledge of crimes that are going to be committed in the future. These forecasts are provided by three psychics called “precogs.”

But the twist in Minority Report is that the predictions are not always accurate. Dissenting visions by one of the precogs provide an alternate view of the future, which is suppressed for fear of making the system appear untrustworthy.

Internal audits that show the tactics didn’t work. Not only were the predictive lists flawed, but they were also ineffective.

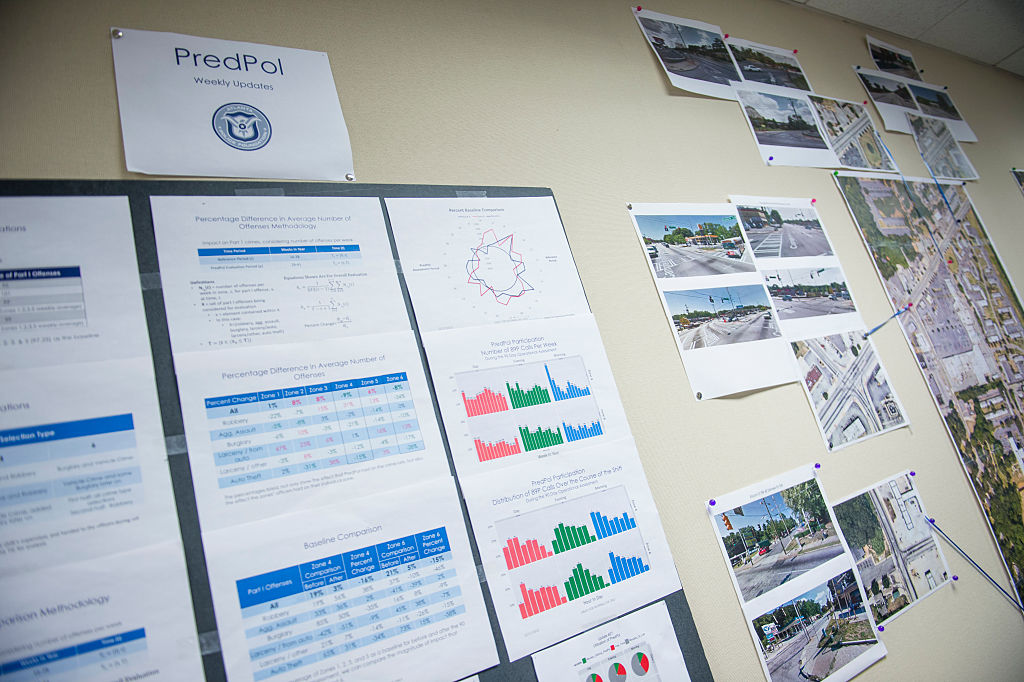

Right now, predictive policing is facing its own uncertain future. Alongside new technologies such as facial recognition, the technology available to law enforcement for possible usage has never been more powerful. At the same time, awareness of the use of predictive policing has caused a public backlash that may actually have helped quash it. Ferguson told Digital Trends that the use of predictive policing tools has been on a “downswing” for the past few years.

“At its zenith, [place-based predictive policing] was in over 60 major cities and growing, but as a result of successful community organizing, it has largely been reduced and or replaced with other forms of data-driven analytics,” he said. “In short, the term predictive policing grew toxic, and police departments learned to rename what they were doing with data. Person-based predictive policing had a steeper fall. The two main cities invested in its creation — Chicago and Los Angeles — backed off their person-based strategies after sharp community criticism and devastating internal audits that show the tactics didn’t work. Not only were the predictive lists flawed, they were also ineffective.”

The wrong tools for the job?

However, Rashida Richardson, Director of Policy Research at the A.I. Now Institute said that there is too much opacity about the use of this technology. “We still don’t know due to the lack of transparency regarding government acquisition of technology and many loopholes in existing procurement procedures that may shield certain technology purchases from public scrutiny,” she said. She gives the example of technology that might be given to a police department for free or purchased by a third party. “We know from research like mine and media reporting that many of the largest police departments in the U.S. have used the technology at some point, but there are also many small police departments that are using it, or have used it for limited periods of time.”

Given the current questioning about the role of police, will there be a temptation to re-embrace predictive policing as a tool for data-driven decision making — perhaps under less dystopian sci-fi branding? There’s the possibility that such a resurgence could emerge. But Venkatasubramanian is highly skeptical that machine learning, as currently practiced, is the right tool for the job.

“The entirety of machine learning and its success in modern society is based on the premise that, no matter what the actual problem, it ultimately boils down to collect data, build a model, predict outcome — and you don’t have to worry about the domain,” he said. “You can write the same code and apply it in 100 different places. That’s the promise of abstraction and portability. The problem is that when we use what people call socio-technical systems, where you have humans and technology intermeshed in complicated waves, you cannot do this. You can’t just plug in a piece and expect it to work. Because [there are] ripple effects with putting that piece in and the fact that there are different players with different agendas in such a system, and they subvert the system to their own needs in different ways. All of these things have to be factored in when you’re talking about effectiveness. Yes, you can say in the abstract that everything will work fine, but there is no abstract. There is only the context you’re working in.”