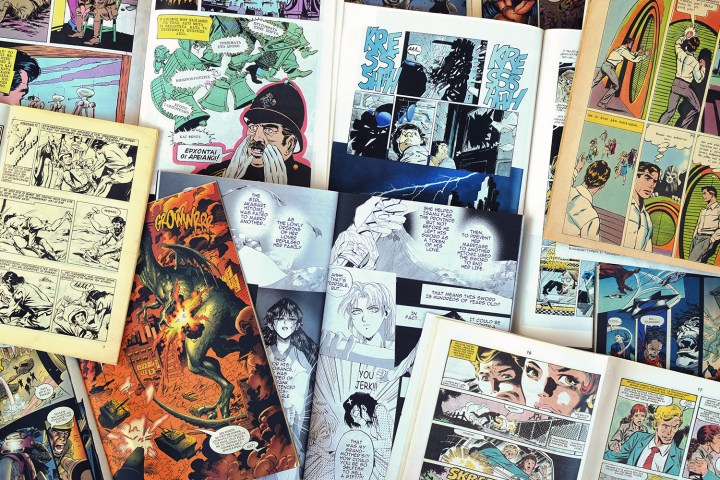

More recently, however, the bar has been raised — and a new research project carried out at the University of Maryland and University of Colorado has another recognition task in its sights: whether or not an AI can read comic books.

In some ways, this is deeply ironic. For a long time, comics were dismissed as a junk medium for kids and barely-literate adults. In recent decades, that perception has shifted, but this present work is enough to have Fredric Wertham (the German psychologist whose 1950s book Seduction of the Innocent came close to de-railing the comics industry) turning in his grave. Simply put: the ability to read and understand comics may turn out to be the next big benchmark for a AI.

“The task requires a lot of common sense and inference”

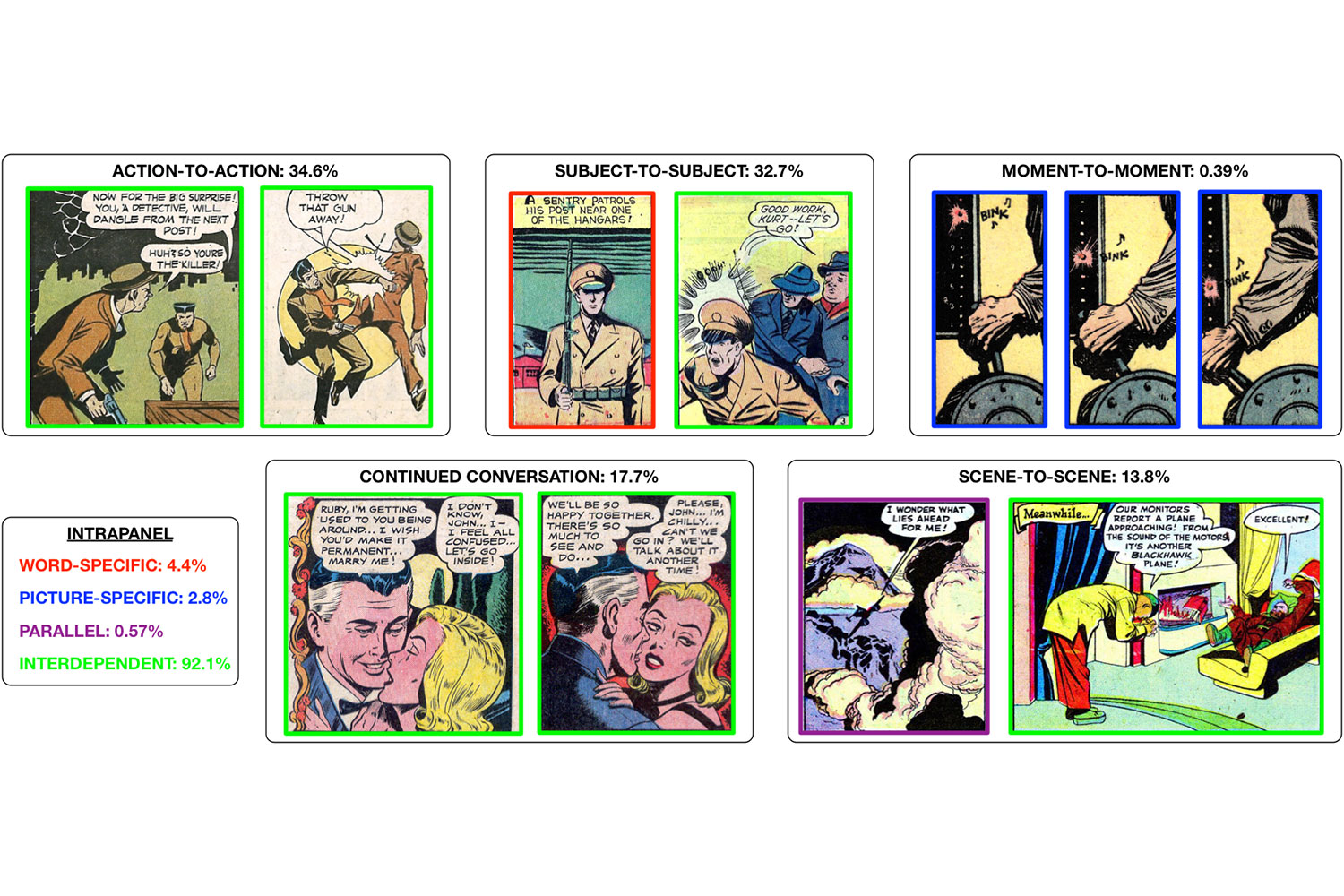

As it happens, there’s nothing straightforward about comic books. Despite the fact reading them is a relatively simple task for us humans, the ability to juggle both images and text, while understanding a narrative told through deceptively simple graphics, with the brain filling in the story blanks between panels is… well, complex stuff for computers.

“The task requires a lot of common sense and inference,” Mohit Iyyer, a fifth year Ph.D. student in the Department of Computer Science at the University of Maryland, College Park, told Digital Trends. “There can be drastic shifts in scene, camera, and subject from one panel to the next. For example, if panel 1 shows a woman walking towards a car with keys in her hand and panel 2 shows the same car driving on a highway, we as readers would infer that in between the two panels, the woman got into the car, started it, and drove onto the highway. But we can only connect these two panels because we are familiar with how cars work; a computer needs lots of data to be able to make the same types of inferences.”

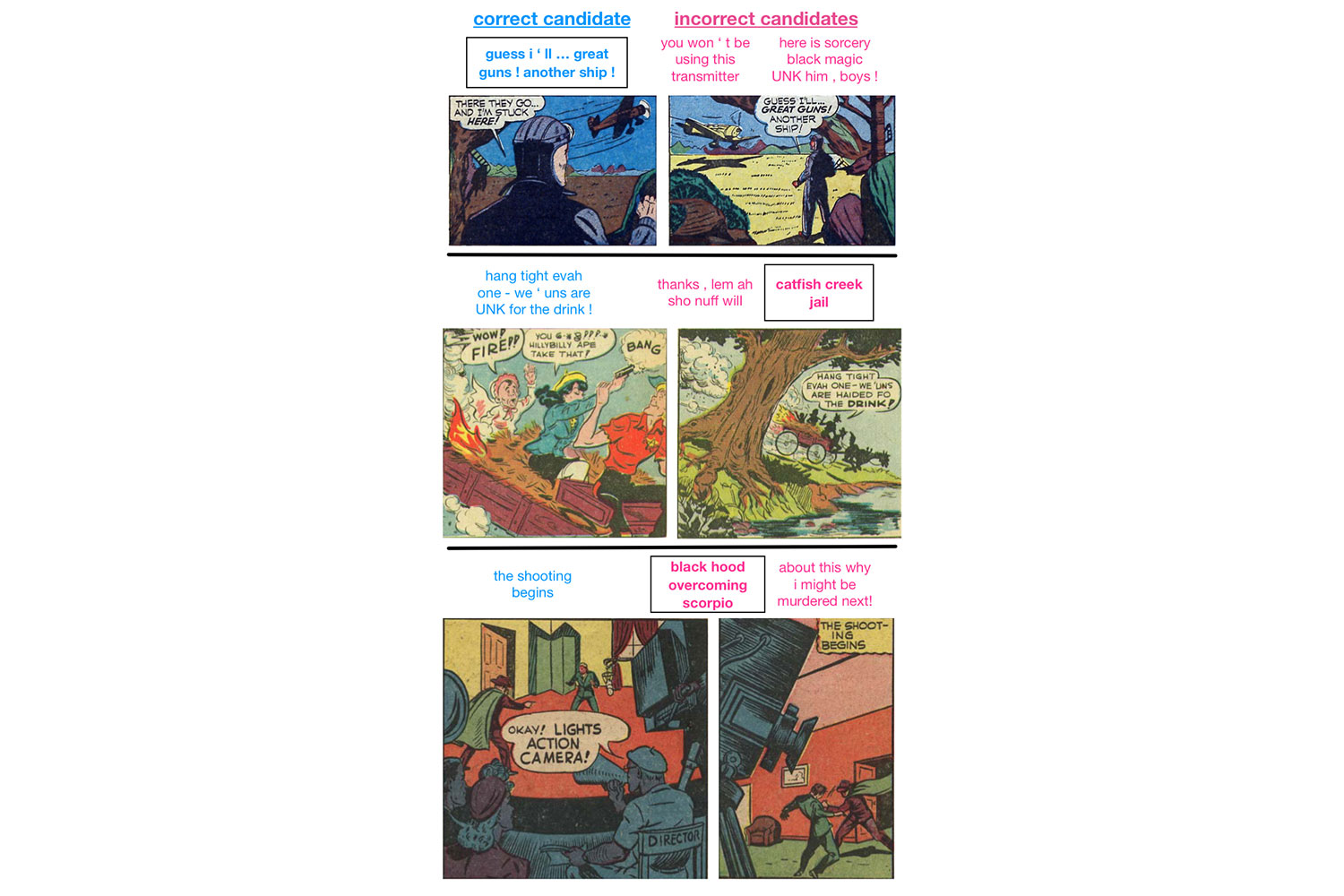

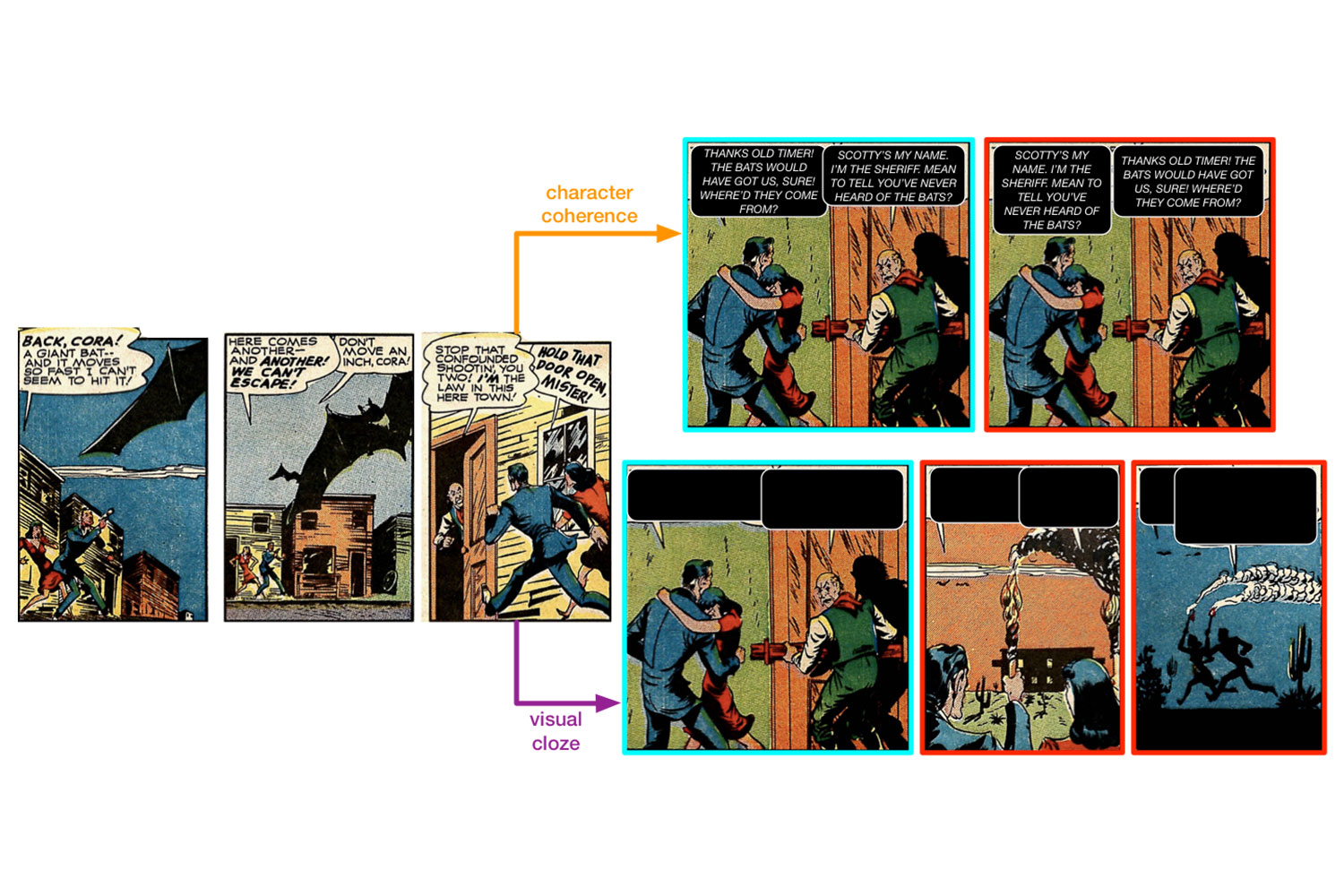

For their study, Iyyer and his fellow researchers chose to answer the question of whether or not a machine can understand a sequence of panels by looking at whether or not it was able to predict what happens next. More specifically, that meant giving an AI access to three preceding panels and then asking it to guess the next dialog or action to take place, using three multiple choice options.

As if that wasn’t enough of a challenge, the researchers also added an extra task in the form of matching the right dialog to its speaker in panels with multiple dialog boxes.

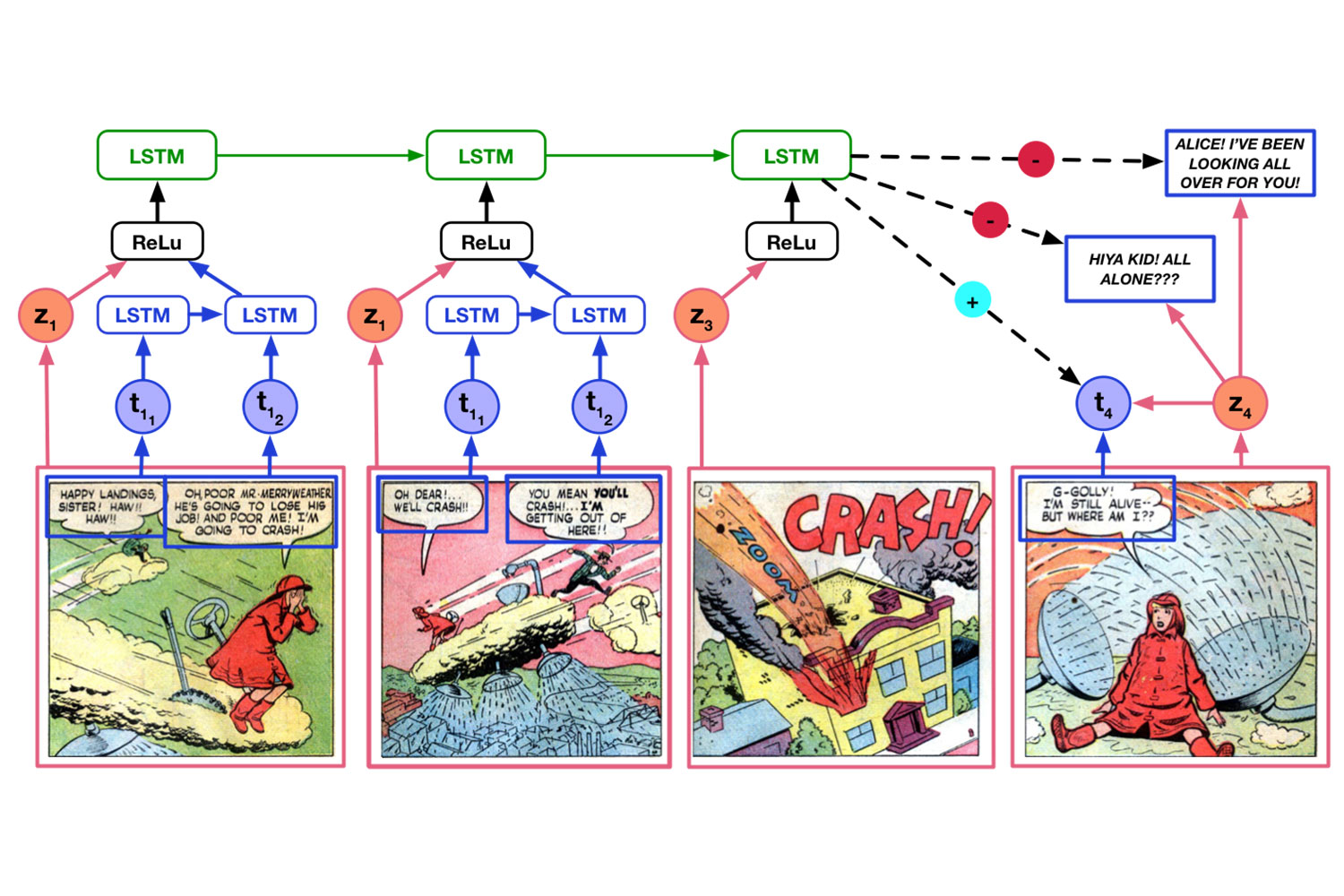

“To proceed with this task, we first needed to create a fairly large dataset,” fellow researcher Varun Manjunatha told Digital Trends. “We downloaded nearly 4,000 comic books from the Golden Age of comics (read: comics published from 1930-50, whose copyright has expired and are therefore now free for download on the internet). Using neural networks called Faster-RCNNs, [we] extracted panels from pages, and speech bubbles from panels. We then sent these speech bubbles through Google’s OCR engine to extract text.”

However, while this gave a large dataset of panels and text from said panels, the team had to find a way of training the network to make sense of what it was seeing. This was achieved using a cascade of neural networks called LSTMs (“long short-term memory”) to predict the next panel. To put it another way, as with any slightly nerdy kid with a stack of comic books in his or her bedroom, the machine learned the tropes of comics by reading a boatload of comic books. Wait until it starts asking panel questions at next year’s Comic-Con!

“Can we build models that generate artwork as well as dialogue?”

So how did it do? Like some lumbering robot that would have done battle with Marvel’s cadre of super heroes back in the 1960s, it managed well enough to offer a threatening splashpage, but not quite well enough to best the human heroes at the end of the issue.

As Manjunatha said, “Our findings are that while these networks are quite good at predicting what speech or panel might occur next — which is impressive given the highly non-trivial nature of the task — they are not nearly as good as human beings in doing the same.”

In other words, in a world in which we’re quite used to hearing about how machines can do things as well — or better — than us puny humans, reading comic books remains an ability in our favor.

The team isn’t giving up hope, however. In fact, not only are they confident that future versions of the project will be better at carrying out this task, but computer-generated comics may be their next frontier.

“One of the most exciting future directions is in generation,” Iyyer said. “There have been many recent breakthroughs in generating both text and images. Comics present an interesting combination of the two: Can we build models that generate artwork as well as dialogue? The sequential aspect is also interesting. Given a sequence of panels as context, can we generate a new panel that makes sense in this context?”

In other words, mankind’s greatest heroes may have bested the machine menace for now, but they’ll be back in a later installment with an even bigger robot. To be continued…

Editors' Recommendations

- I’m a laptop reviewer. Here’s why I still use a laptop from 2021

- 23% of PC gamers probably can’t play Alan Wake 2. Here’s why

- Why new OLED gaming monitors still can’t beat the best from last year

- Newegg’s AI PC Builder is a dumpster fire that I can’t look away from

- Grammarly’s new ChatGPT-like AI generator can do a lot more than proofread your writing