It’s easier to relate to events which take place closer to home than ones that happen thousands of miles away.

There are plenty of possible explanations for why this might be the case, but a big one could simply be that it’s easier to relate to events which take place closer to home than ones that happen thousands of miles away. If an incident happens on your street, or in your neighborhood, or in your city, country, or a neighboring country, there’s a higher likelihood that you’ll have some personal connection to it. You might know someone who lives there or has lived there, you may have visited in the past, or it could simply look a bit more familiar in a way that means that it is easier to empathize. Is that a trait we should be proud of? Not really. Is it a part of human nature? Almost certainly.

But in a globalized world, building empathy with people elsewhere is essential. While it’s understandable that we care for the people around us, we should also be able to put ourselves in the shoes of people from all over the world — especially when it comes to helping victims of disasters.

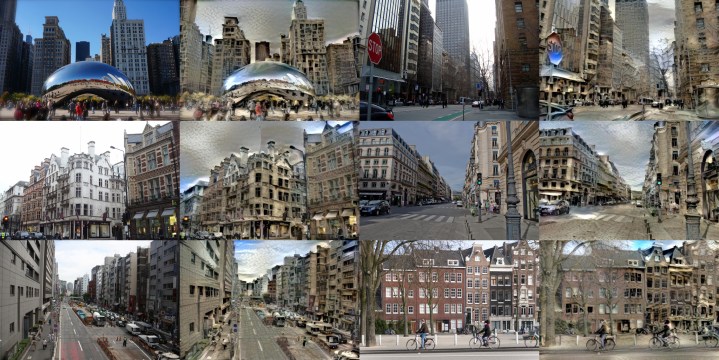

Could A.I. help? That’s a bold ambition, but it’s one that researchers from MIT Media Lab have embraced with a new project. Called “Deep Empathy,” their system uses a popular deep learning method called neural style transfer to create images showing neighborhoods from around the word as if they’ve been hit by some of the disasters afflicting other countries.

Using A.I. for social good

For example, it’s almost impossible to imagine the scale of the brutal six-year war in Syria, which has affected more than 13.5 million people, displaced hundreds of thousands, and destroyed an unimaginable number of homes. But what if some of those scenes took place in your local city of, say, Boston? That’s where Deep Empathy comes in. Rather than requiring human creators to mock up the scenes, it takes two images, namely a “source” and a “style” image as input, and then simulates a third image which reflects the semantic content of the source image and the texture of the style image.

- 1. Toronto Input

- 2. Toronto Output

- 3. San Francisco Output

- 4. San Francisco Input

“Researchers and artists used this technique to create interesting art pieces in the past, where they transform arbitrary images to Van Gogh-esque pictures, but this is among the first instances to use this technique towards social good,” Pinar Yanardag, one of the researchers on the project, told Digital Trends. “We also experimented the idea on different types of disasters, such as earthquake and wildfires, and got promising results. By using this technique, we wondered whether we can use A.I. to increase empathy for victims of far away disasters by making our homes appear similar to the homes of victims?”

The idea of using tech to promote empathy is a tricky one.

The idea of using tech to promote empathy is a tricky one. After all, as psychologists like Sherry Turkle have spent a career pointing out, technology can serve to distance us from others — even while it provides the opportunity to connect with an unprecedented number of people. Her 2011 non-fiction book perfectly encapsulated this idea with its title: Alone Together.

Other writers like the noted technology critic Evgeny Morozov have explored similar territory. Morozov’s tetchy, though brilliantly argued, 2013 book To Save Everything, Click Here takes issue with what he calls, “the folly of technological solutionism.” At its most basic, it’s the idea that — whatever the giant social, political, or philosophical problem — there’s an app, a smart device, or an algorithm that will fix it.

In the most cynical reading, Deep Empathy is solutionism. It algorithmically airbrushes out the otherness of foreign places and makes them seem more relatable by showing them as taking place to people on our home turf, presumably to people who look like us. But this is also a harsh reading of a project that could genuinely do some good.

Zoe Rahwan, a research associate at London School of Economics, told us that: “We are seeking to understand whether artificial intelligence can be used to evoke empathy for disaster victims from far away places. As humans, we have a range of biases which can limit our care for people who are different from us, and numb us to large numbers of injuries and deaths. We hope that Deep Empathy will help to overcome these biases, enabling empathy to be scaled in an unprecedented manner.”

Building in empathy

This isn’t the only use of technology we’ve come across that aims to make us into more empathic human beings. In their book The New Digital Age: Reshaping the Future of People, Nations and Business, authors Eric Schmidt and Jared Cohen imagine how virtual reality could be used to make people better capable of empathy by, for instance, transporting them to the Dharavi slum in Mumbai. Since then, we have seen numerous real world illustrations of virtual reality allowing us to literally see the world through the eyes of people we might otherwise never come into contact with, or hear about only as numbers on an international news report.

A.I. could help break down the polarization of views that exist in society.

A.I. could help break down the polarization of views that exist in society, too, by making sure we get exposed to views other than our own. Researchers in Europe have developed an algorithm which aims to burst the filter bubble of social networks by making sure we see stories reflecting a variety of perspectives about issues on which we may have views that are rarely challenged.

At the University of North Carolina at Charlotte, researchers are developing chatbot whose goal is not to simply obey orders, but to engage users in arguments and counterarguments with the specific aim of changing a person’s mind. Such tools could help pull us up on biases and see other points of view. Heck, there are even devices that remind us of important issues like energy usage.

The so-called Forget Me Not reading lamp starts closing like a flower, throwing out less and less light the moment you turn it on. To reactivate it, touch one of its petals — thereby constantly offering a reminder of your responsibility to use energy responsibly.

There’s even an exoskeleton that’s designed not to make you feel younger, fitter and physically stronger, but rather to simulate the effects of old age. The goal? You guessed it: to make you more empathic to the struggles of being a senior citizen.

Ultimately, no one tool is going to make us more empathic. It’s not something that can be easily “augmented,” like adding a sixth sense that buzzes when we face north. Technology doesn’t offer quick fixes to these kinds of challenges, although it can provide creative new solutions to big problems. Deep Empathy is one such approach. If it can succeed at opening a few people’s eyes about global problems about which they might otherwise not consider, that can only be a good thing. Is it a comprehensive perfect solution? No. But it’s an attempt at using these tools for genuine social change.

Frankly, we’d love to see more computer science projects like it.

Editors' Recommendations

- Scientists are using A.I. to create artificial human genetic code

- We used an A.I. design tool to come up with a new logo. Here’s what happened

- A.I. upscaling makes this film from 1896 look like it was shot in dazzling 4K

- Mind-reading A.I. analyzes your brain waves to guess what video you’re watching

- Here’s an A.I. preview of what climate change will do to your neighborhood