San Francisco has become the first city in America to ban facial recognition. Well, kind of.

In fact, the ban — voted on this week by the city’s Board of Supervisors — bars only the use of facial recognition by city agencies, such as the police department. It doesn’t affect the use of facial recognition technology in locations like airports or ports, for example, or from private applications orchestrated by private companies. Oh yes, and because the San Francisco police department doesn’t currently use facial recognition technology, this ruling bans something that wasn’t actually being used. While the department tested the technology on booking photos between 2013 and 2017, it has not implemented this use-case on a permanent basis.

So is this just meaningless legislation, something that sounds and looks good on paper, but ultimately achieves nothing? Not at all. It’s a proactive move that will hopefully lead to others following in its footsteps. As I wrote recently, facial recognition has grown in its application very, very quickly. Technology which seemed like science fiction within the span of many of our lives is now everywhere. Heck, today’s top-selling smartphone models use facial recognition as a way of granting us access to our devices. (As noted, this won’t be affected by the new laws, coming into practice on July 1.)

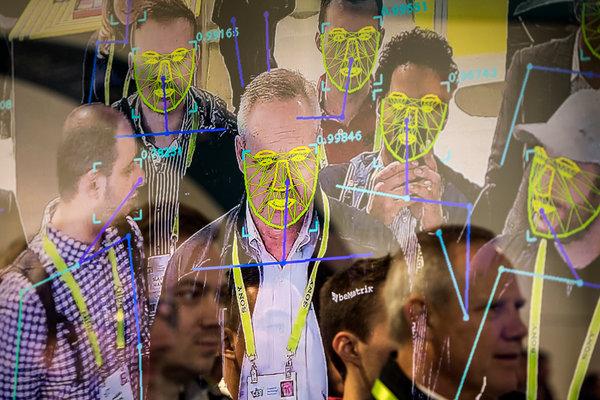

A display showing a facial recognition system for law enforcement during the NVIDIA GPU Technology ConferenceMuch of the adoption (and a great deal of the money) in facial recognition comes from security applications. This is something we’re seeing a great deal of today in places like China, where the state media frequently talks up its abilities to identify (and, in turn, take action against) individual criminals within crowds of thousands of others. Thanks to advances in surveillance technology, more and more facial recognition tools are used for what is called live facial recognition (LFR), meaning the automated real-time “matching” of individuals with a curated “watchlist” in video footage.

There are really two problems with this application. The first is the question of accuracy. Despite advances in facial recognition technology, there is still concern about error and bias. The dangers of algorithmic bias have rightly been flagged by many concerned voices — and facial recognition is one more area where this risk of bias has proven problematic, particularly when it comes to people of color.

While it differs according to both the algorithm and the training data, many facial recognition tools have been shown to be less effective at recognizing individuals with darker skin. Misrecognition by a facial recognition algorithm could falsely flag law-abiding black citizens as wrongdoers, who must either identify themselves or potentially even face wrongful arrest. Although being identified by a facial recognition system is not foolproof “evidence” in itself, reliance on these tools by law enforcement could still cause harm to communities who already have cause to feel marginalized.

Do we want facial recognition at all?

Over time, some of these technical problems can and will be solved. More inclusive datasets could be created with greater diversity and accuracy levels. But even if these tools were to be entirely effective, there are still reasons why we might be skeptical of their use. You don’t need to be a privacy absolutist to believe that citizens should be able to conduct their lives without being constantly monitored. Like large scale phone surveillance, mass facial recognition means surveilling everyone — regardless of what they may or may not have done.

Proving that facial recognition is sufficiently beneficial to rule out the loss of liberty and privacy that it entails is something that is nowhere near having been proven, let alone agreed upon. In short, if one of the goals of government bodies should be to engender trust in police, then tools like facial recognition may not be the best way to achieve it — regardless of whether it’s free from bias or not.

There are two ways to regulate. One is to wait until something bad happens and then figure out, retroactively, how to put a stop to it and punish the wrongdoers. The other is to stop it before it reaches that point. San Francisco is choosing the latter. Whether this ruling is revised at a later date, when more inclusive facial recognition systems have been developed and publicly demonstrated, remains to be seen. But it’s setting an example which could lead to other cities and states deciding on similar rules. After all, if the denizens of tech hotbed San Francisco are worried about facial recognition technology, then what are the odds that plenty of citizens elsewhere are as well?

Tech companies talk about the imperative of moving fast and breaking things. Laws, which move slowly and try to fix them, can often lag hopelessly behind. This is one very welcome exception to the rule.

Editors' Recommendations

- Facial recognition tech for bears aims to keep humans safe

- Federal bill would ban corporate facial recognition without consent

- Facebook ordered to pay $650 million in facial recognition lawsuit

- ACLU files complaint against Detroit police for false facial recognition arrest

- Microsoft won’t sell facial recognition technology to police