Artificial intelligence technology has proven itself useful in many different areas, and now birdwatching has gotten the A.I. treatment. A new A.I. tool can identify up to 200 different species of birds just by looking at one photo.

The technology comes from a team at Duke University that used over 11,000 photos of 200 bird species to teach a machine to differentiate them. The tool was shown birds from ducks to hummingbirds and was able to pick out specific patterns that match a particular species of bird.

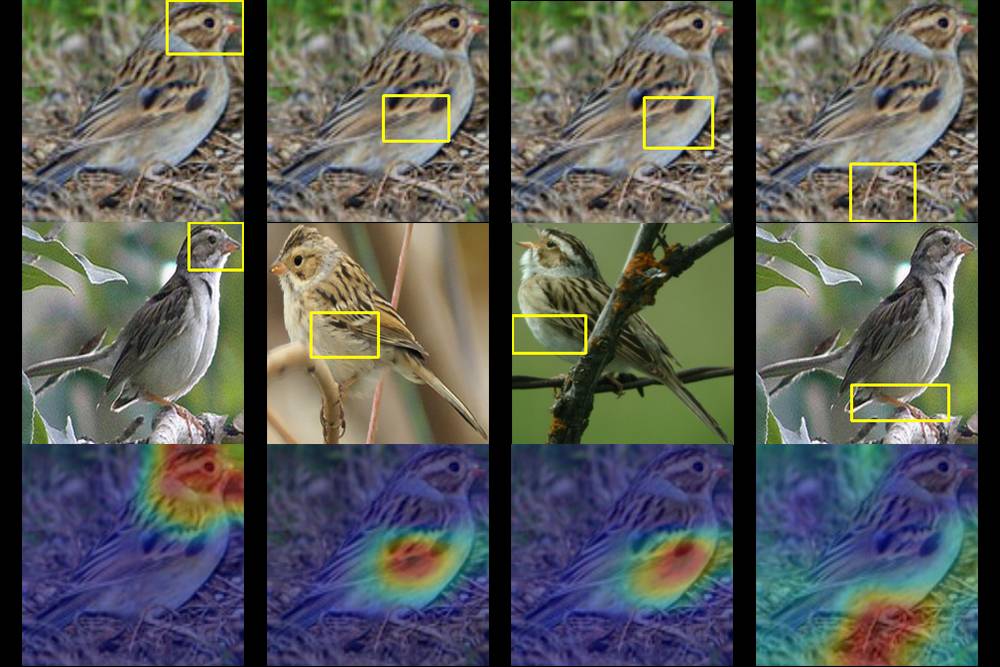

“Along the way, it spits out a series of heat maps that essentially say: ‘This isn’t just any warbler. It’s a hooded warbler, and here are the features — like its masked head and yellow belly — that give it away,’” wrote Robin Smith, senior science writer in Duke’s communications department, in a blog post about the new technology.

The researchers included Duke computer science Ph.D. student Chaofan Chen, Duke undergraduate Oscar Li, team members from the Prediction Analysis Lab, and Duke professor Cynthia Rudin. The team found that the machine learning correctly identified bird species 84% of the time.

Essentially, the technology is similar to facial-recognition software, which remembers faces on social media sites to suggest tags or to identify people in surveillance videos. Unlike other controversial facial-recognition software, however, the technology from Duke is meant to be transparent in how the machine learns identifiable features.

“[Rudin] and her lab are designing deep learning models that explain the reasoning behind their predictions, making it clear exactly why and how they came up with their answers. When such a model makes a mistake, its built-in transparency makes it possible to see why,” the blog post reads.

The hope is to take this technology to another level so it can be used to classify areas in medical images, such as identifying a lump in a mammogram.

“It’s case-based reasoning,” Rudin said. “We’re hoping we can better explain to physicians or patients why their image was classified by the network as either malignant or benign.”

Digital Trends reached out to Duke University to find out what other ways the new tool can be used for, but we haven’t heard back.

Editors' Recommendations

- Are we about to see ‘the iPhone of artificial intelligence’?

- How will we know when an AI actually becomes sentient?

- Read the eerily beautiful ‘synthetic scripture’ of an A.I. that thinks it’s God

- Like a wearable guide dog, this backback helps Blind people navigate

- Scientists are using A.I. to create artificial human genetic code