When you look back a decade, it’s easy to feel that little has changed. Sure, you had a different job back then, there were other shows on TV, and no one had described your shoes as “on fleek” just yet, but what’s different, really? Yet when asked to think about the year 2006, most people today would probably Google it on a smartphone.

Right then and there, you have your answer: Smartphones have changed everything.

Ten years ago, there was a good chance you owned a Motorola Razr flip phone, sent about 65 text messages a month to close friends, and did almost everything digital on your home computer, likely a bulky desktop PC. YouTube was less than a year old. Netflix was still mailing DVDs around. The iPhone was still a year away, and its famous App Store wouldn’t launch until 2008.

These days, an ordinary phone owner send 65 texts every two days, and more than half of all internet browsing takes place on a smartphone. You can reach anyone, anywhere, instantly, and you can communicate with them in ways you couldn’t imagine even a decade ago.

We’re in the middle of the largest communication shift in human history, and absolutely none of us are seeing the forest for the trees when it comes to this technology. But if the digital world has changed what we talk about, what kind of impact is it having on how we talk? In what ways is it altering English, and how we think and process language?

Texting is a new language, and you’re bilingual

If you read anything about the English language in the news, there is a good chance that it has a headline like “The death of grammar and punctuation?” Everything is falling apart, and it’s all technology’s fault. A lot of smart people believe that popular new ways of communicating — like texting, tweeting, and updating your status on Facebook — are so informal that they are guiding English to its grave.

“Now we can write the way we talk.”

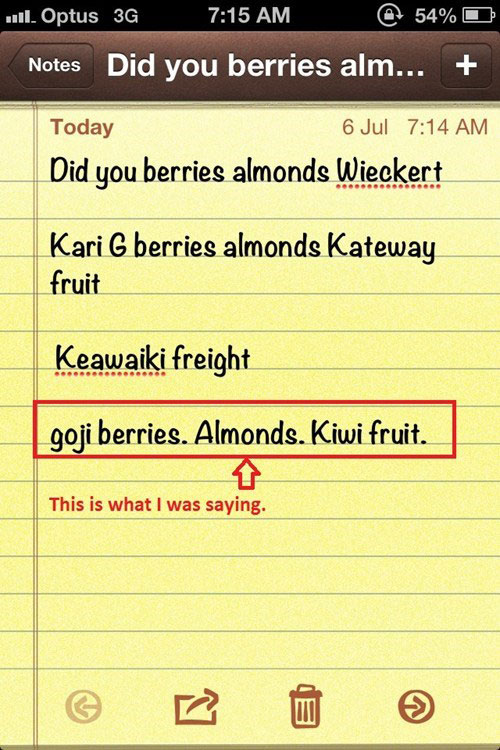

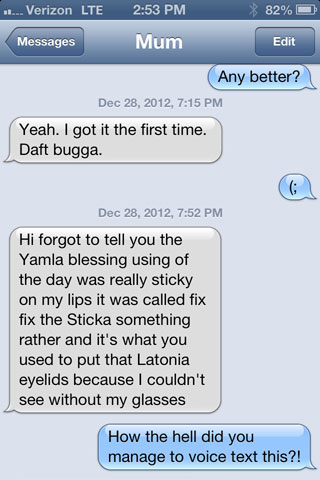

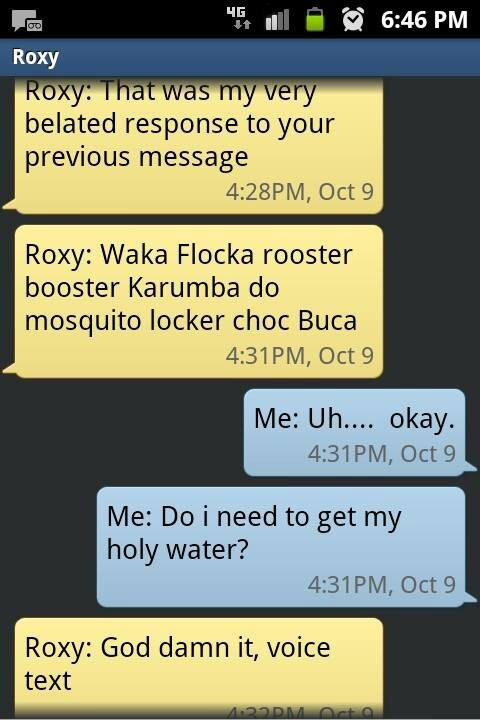

They have a name for this phenomenon, too: textisms, the abbreviations, acronyms, emoticons, emoji, and other attributes associated with the rise of texting and instant messaging. A lack of capitalization, too much CAPSLOCK, failure to punctuate properly, using asterisks to convey an emotion like *scared*, #hashtagging, and gamer l33t speech are also all considered textisms — netspeak, if u prefer.

The lack of proper grammar and spelling in 160-character text messages or 140 -character tweets, they argue, is a clear sign that the English language is dying, and the next generation of kids will grow up illiterate. And it’s hard to blame them when this sentence:

“I had a great time. Thanks for your present. See you tomorrow”

… often looks like this in a text:

“Had a gr8 time tnx 4 ur present. C u 2mrw :)”

“It’s almost scary to think of what the future holds,” Emily Green wrote on the Grammarly blog (the word blog itself is an early textism of sorts) in an article titled Why text messaging is butchering grammar. “Texting is eroding literacy in young adults. The next generation of adults will be faced with serious literacy issues, which could lead to even more serious problems. We’re already facing some grammar and literacy barriers between generations.”

She’s right, in one way: USA Today’s list of “essential texting acronyms every parent must know” is an eye-opening read for any PIR (parent in room) who just learned what “Netflix & chill” means. Some research, like this 2012 Penn State study, seems to corroborate the alarming message about grammar as well, arguing that teenagers who use “techspeak” perform worse on grammar tests.

But what’s really happening may be the opposite. Instead of getting dumber, teenagers may have gained a new cognitive ability. You could consider texting, chatting, and instant messaging entirely new forms of communication, different from spoken or traditional written English. For the first time in the history of man, technology has put our friends and contacts within fingers’ reach at all times. Anyone with a phone or computer can now write to each other as fast as they speak in person.

“What texting is, despite the fact that it involves the brute mechanics of something that we call writing, is fingered speech,” John McWhorter, author and associate professor of English and Comparative Literature at Columbia University, said in a 2013 TED Talk. “Now we can write the way we talk.”

Texting, he argues, is ushering in new writing forms and rules, meaning that not only is it not a bad thing for grammar, it actually means we have a generation of people who are bilingual in a new way — able to seamlessly switch between speech, writing, and an entirely new mode of communication that has its own linguistic rules. The bad grammar in our texts is a sign that we’re more literate than ever, not less, he says.

“Texting is an expansion of young people’s linguistic repertoire.”

“What we’re seeing is a whole new way of writing that young people are developing, which they’re using alongside their ordinary writing skills, and that means that they’re able to do two things,” McWhorter said. “Increasing evidence is that being bilingual is cognitively beneficial. That’s also true of being bidialectal, that’s certainly true of being bidialectal in terms of your writing. Texting is actually evidence of a balancing act that young people are using today, not consciously of course, but it’s an expansion of their linguistic repertoire.”

Teenagers seem to agree. As far back as a 2008 Pew study, 60 percent of teens questioned did not think that their “electronic texts” were the same as “writing.” But they also admitted that they weren’t perfect. Sixty-four percent said that at least some informal writing had slipped into their schoolwork from time to time, but 86 percent believed strong formal writing skills were important to their future success.

Words never stop changing

The invention of a secondary language may seem impressive, but many people still believe literacy is dying. Professors and writers have complained about the decline in grammar and spelling among the youth for centuries.

“Over the history of time, there has always been people saying ‘the language is going to hell’ — and it’s always the older generation talking about the newer generation,” Jane Solomon, a lexicographer and senior content editor at Dictionary.com, told Digital Trends. “It’s just a matter of perception. English is constantly evolving, constantly changing. Some people believe that English stopped evolving slightly before they were born, and that’s just not the case.”

Is the Texas Drawl dying?

Smartphone users have learned to “code switch,” or easily swap, between writing formally and writing in textisms, and this newfound skill to swap identities from one text to the next may carry over to their voices and dialects as well. Lars Hinrichs calls this the “modularizing” of dialects — and he thinks human beings are getting very good at it.

Hinrichs is a part of the Texas English Project at the University of Texas at Austin, and has studied dialect changes in Texas English since 2008. Texas English is that extra-strong Texas South dialect, which uses words and phrases like “Thank ye kindly,” “y’all,” “winduh” (window), “howdy,” or “git-r-done!”

The Texas English dialect and others around the country are taking on a completely new, more modular life, thanks in part to the internet, smartphones, and other communication technology. Texans are now able to switch in and out of dialects and accents, swapping them strategically at the drop of a dime.

“[Barbara Johnstone, professor of English and linguistics at Carnegie Mellon University] was talking to businesswomen from Texas who don’t really use [Texas English] across the board anymore,” Hinrichs explained. “One woman said, ‘My Texas accent makes me $80,000 a year,’ because she can use it to butter up her clients. There are certain social stereotypes that are ideologically connected to those traditional accents. You can use them playfully whenever you want to activate those stereotypes.”

Before the digital age, Texans would have what Hinrichs described as a continuum of an accent that merely ranged from formal to informal. They might try and speak standard English in church, but elsewhere they would speak in their normal accent or dialect. These days, the switch can occur minute to minute.

Modularizing our dialects/accents (and identity, to some degree) is a new ability that, like texting, seems to represent an added level of depth to modern language skills.

The way someone talks and writes, she said, is a product of where and when that person grew up.

“Look at prepositions that are changing that people in my generation say, which people in the previous generation would never say,” Solomon said. “One example of this is the expression ‘bored of’ versus ‘bored with’ or ‘bored by.’ ‘Bored of’ is more recent, and it’s really a generational divide. This is something I don’t even think about at all but it’s a mark of when I happened to be born.”

Such shifts in grammar and wording are always happening. It’s natural for words to drift into one another over time, McWhorter writes in his latest book, Words on the Move — but they seem to be shifting faster than ever thanks to technology. And regional words and phrases are also bubbling up to national or worldwide levels at a faster pace.

“[Previously,] if there was a word that was used by a small group of friends in the mountains somewhere in the world, and those friends never left the mountains, that word would never leave the mountains. Now, with the internet, if one of them has a really big Twitter following, that word can suddenly spread beyond that geographically isolated place,” Solomon told us. “There’s a difference in how words spread because of how the world is connected now by the internet and technology.”

In much the same way that modern smartphone users are becoming bilingual, they’re also learning to swap between other, new types of communication. Communities often have their own language rules and dialects, and people are learning how to “code switch” between them.

“The internet has its own regions,” said Solomon. “There’s a style that’s very particular to Reddit; there’s a style that’s very particular to Twitter; there’s a style that’s really particular to Instagram. In all these different places, people talk a little bit differently. But I think people know how to code switch. Someone who speaks on Reddit would not turn in a college paper in that language, and they know not to do that.”

Are emoji replacing English?

In online communities like Reddit, words can disappear just as quickly as they spread these days. LOL or “laugh out loud” was one of the first acronyms associated with netspeak online, originating in the 1980s and flourishing in the late 90s and early 2000s, but it may be on its way out. Facebook studied posts in May 2015 and found that LOL was used only 1.9 percent of the time when compared to competing words like haha (51.4 percent) and hehe (13.1 percent), along with emoji (33.7 percent).

Emoji started as prettier versions of text emoticons like the smiley face or sad face, but have quickly grown into much more. There are now 1,922 emoji on the iPhone. Hundreds of faces, objects, flags, families of every skin color, activities, and more now have an emoji image. Apple is such a fan that in iOS 10, a feature will suggest emoji replacements for words.

For example, if you plug in this familiar text:

“I had a great time. Thanks for your present. See you tomorrow”

On an iPhone with iOS 10, Apple will suggest you change it to:

“I had a

Emoji are the hottest new texting trend, and there is already a debate over the negative impact they’re having on language. (And no, I’m not talking about the peach butt emoji.)

According to Kyle Smith of the New York Post, emoji are ruining civilization. Smith argued, using broad strokes, that sentences like “Had a gr8 time tnx 4 ur present. C u 2mrw” look like a “sonnet” compared to what people are doing with emoji these days. For example, many people go far beyond what Apple suggests, ending up with texts more like this: “Had a

Citing USA Today’s use of emoji in one of its weekend newspaper editions, Smith said that cavemen drew pictures to communicate because their ideas were simple; language is supposed to offer subtlety and complexity.

“Leaving the language corner of your brain to grow cobwebs and instead turning your attention to the picture-generating muscles isn’t a bold leap into the future, it’s a giant leap backward: in ambition, in maturity, in evolution,” wrote Smith. “It’s like deciding that going to the restroom is too much trouble and relying on Depends instead.”

But are typed smiley-face emoticons and their emoji descendants really turning smartphone users into cavemen that wear diapers?

So the USA today put FB’s new emojis in their print edition. It feels so wrong on paper IMHO via @jodiontheweb pic.twitter.com/dv7AhFL1Ke

— Felicity Morse (@FelicityMorse) October 9, 2015

Linguist Gretchen McCulloch took the time to see if it was possible to speak in all emoji and rid ourselves of words forever. The answer was a definitive no.

Emoji help add emotion to text to avoid being misunderstood, and have evolved into a fun tool to add new meanings to pictures, but she argued that they are mainly a fun new “supplement to language,” and cannot replace it entirely, no matter what language doomsdayers say.

“Emoji and other forms of creative punctuation are the digital equivalent of making a face or a silly hand gesture while you’re speaking,” McCulloch wrote on Mental Floss. “You’d feel weird having a conversation in a monotone with your hands tied behind your back, but that’s kind of what it’s like texting in plain vanilla standard English.”

Emoji add that personal touch, according to Paul JJ Payack, president and chief word analyst at the Global Language Monitor. His site has been at the forefront of using big data to understand how technology is impacting languages around the world since 2003.

“Emoji are every bit as much a communication tool as the letter A,” Payack told Digital Trends. “We don’t know how it’s going to evolve so some people poo-poo it, but they’re fantastic, useful bits of language.”

Payack believes emoji and textisms are such a revolution that they may eventually become a formal part of English. Instead of an alphabet, there will be an “AlphaBit” also filled with letters, numbers, emoticons, and emoji. Other languages may not be as lucky as English, though.

Thousands of languages are dying

You are reading this article in English, but there are around 7,100 other known living languages around the globe, according to Ethnologue. You wouldn’t know it from browsing the World Wide Web, though. You might like to think of the web as a diverse place filled with the world’s knowledge, but only a fraction of active languages spoken around the globe are online. English and other dominant languages have a stranglehold on the internet, explained linguist and mathematician András Kornai in a recent scientific paper titled “Digital Language Death.” The future for a language that isn’t properly maintained and spoken online may not be rosy.

“The internet is bringing into more stark relief the fact that there are languages out there that are dying,” Kornai, a professor at the Budapest Institute of Technology and senior scientific advisor at the Computer and Automation Research Institute at the Hungarian Academy of Sciences, told Digital Trends. “By the traditional criteria of language vitality, 30 to 40 percent of [all] languages are endangered and on the verge of dying.”

“30 to 40 percent of all languages are endangered and on the verge of dying.”

The scale is staggering. More than 2,800 languages could disappear, according to the Endangered Languages Project, and take hundreds, maybe thousands of years of human history with them. Look online and the problem compounds.

“By digital criteria, we see that the vast majority of languages, although they may be preserved, they are not making the transition to the digital realm,” Kornai continued. “That means that 95 percent of them, or more, are left behind, and we’re never going to see them on the internet other than as preserved specimens.”

The die-off is even worse, according to Kornai’s research, on places like Wikipedia. Of the roughly 7,100 languages still alive, he concluded that only 2,500 of them may survive for another century, fully intact and healthy. Worse, only about 250 or so “vital” languages will make the digital leap in any meaningful way.

The dying languages probably aren’t ones you’ve heard of. Many are spoken in remote villages where elders either do not have the means to get online or simply aren’t interested, and the youth are learning a more common language like English or Hindi instead of their parents’ tongue.

“India is a good example where most people, if they’re literate, are literate in English,” Alan Black, a professor at the Language Technologies Institute at Carnegie Mellon University, told Digital Trends. Black is teaching a seminar on endangered languages this fall. “Once you get into middle school and beyond, they’re probably being taught in English, mainly because regional languages don’t really work. People in the big cities will typically speak multiple big languages and the only real common language is probably English — or maybe Hindi in the north, but mostly English.”

“People become more literate in English, so if you give them technology, they’ll do their short messaging in English,” Black continued. “They will not send their messages in Hindi, Marathi, Gujarati, etc., because there isn’t good support for them.”

Imagine if you couldn’t buy a laptop with a keyboard in English? Chances are, you’d learn to type in whatever language your keyboard came in. Broad support for a language on a software and hardware level indicates that the region where it is spoken has a strong enough economy that it will carve out a little piece of the internet for its language.

But the internet has clear favorites — call them incumbents. There are two dozen or so of what Kornai calls “thriving” hyper-connected languages like Japanese, Spanish, and Mandarin, which are used by native speakers and foreigners all over the world. These languages dominate the internet and technology industries. And online, one rules them all.

English dominance and the rise of China

English reigns supreme. Even if English isn’t your first language, it is probably your second. It’s not the most spoken language, but it was the first online and has become the global standard for aviation, software, film, military, and science communication, according to Payack. Part of the reason for its incredible success is how malleable it is. English can and does change as new people begin speaking it.

Some of China’s larger language traits could creep into English as more Chinese people learn to speak it.

“English has about a million words and French has 100,000 or 200,000 words,” he told Digital Trends, explaining how tightly France holds onto its language. “But in English, we take everything. If someone comes up with a word in a call center in India, it can become a part of the language. The English language is welcoming.”

Payack’s Global Language Monitor tracks the robustness of the English language, which reached 1 million words back in 2009 and grows at about 14.7 words per day, according to his methods.

The next decade may be especially interesting for English. The rise of China as a global superpower has brought hundreds of millions of Chinese speakers online, and we’re only beginning to feel the impact of its many languages and dialects on technology and the internet. Some of China’s larger language traits could creep into English as more Chinese people learn to speak it.

“There are a lot of Chinese English speakers — think about the things that they have in their language that could come into English,” Black, the Carnegie Mellon university professor, told us. “They don’t have determiners, so no ‘the’ and ‘a.’ Could we drop those? They don’t have plural in the same sense, so would we remove that? They also don’t make a distinction between he and she, and you wonder whether that might eventually come into English.”

“There may be simplifications that happen in the common language that are coming from the individual languages of the new speakers, and that is sort of possible,” he continued. “We may see some things in the future of international English that are simplified in order to make it easier for nonnative speakers.”

The future may have a lot more Chinese in it, but it probably won’t sound a lot like the inter-planetary sci-fi western verse of Joss Whedon’s Firefly, where English speakers merely say a few expletives in Chinese. International English may one day sound as foreign to us as a teenager’s text messages might look to a time traveler from 1975.

Slow down for Siri

People speaking International English may have a tough time trying to talk to modern computers, too. It seems like every piece of tech now takes voice commands, from cars to phones, to Xboxes. Voice assistants like Apple’s Siri or Amazon’s Alexa are embedded in all sorts of products, each eager to hear your commands. Unfortunately, they do a poor job understanding what you’re saying even if you speak in perfectly clear English. And if you have an accent, your chance of being understood by a computer is dangerously close to zero.

“Are we modifying the way we speak when we’re speaking to machines?”

But that doesn’t mean Texans or other folks with a strong accent can’t use Siri. They still try, but they have to work around its inability to understand dialects.

Many people have actually developed a new accent that they use to communicate with tech products, according to Alan Black, and it evolved from failed attempts to be understood on the telephone, and talking to automated telephone voices. People often talk more slowly and clearly on the phone, and tend to use a similar clear, slow, monotone voice, to talk to tech, hoping against hope that it will understand what they’re saying.

“We’ve clearly learned to speak on the telephone. We speak in a different way. We speak clearer, with less dialect, less accent,” said Black. “The question is, are we modifying the way we speak when we’re speaking to machines? And the answer is yes, we are. If you’re standing next to somebody and they’re talking on their phone, you can usually tell if they’re talking to a machine of if they’re talking to a human because if they’re talking to a machine, they’re more articulate, speak in clear sentences, and they don’t interrupt the machine because that doesn’t work.”

Black said companies like Apple do not encourage people to consider voice assistants as friends because they might start talking too casually to them. This is partially because they cannot yet understand complex sentences or requests — but also so that users actively tame their accents and dialects.

“If you look at Siri, the voice they’ve chosen for it is a helpful agent who is not your friend,” continued Black. “I have no idea whether they did this deliberately, but one of the effects is that people speak to her more clearly and more articulate than what they would do if she was their friend.”

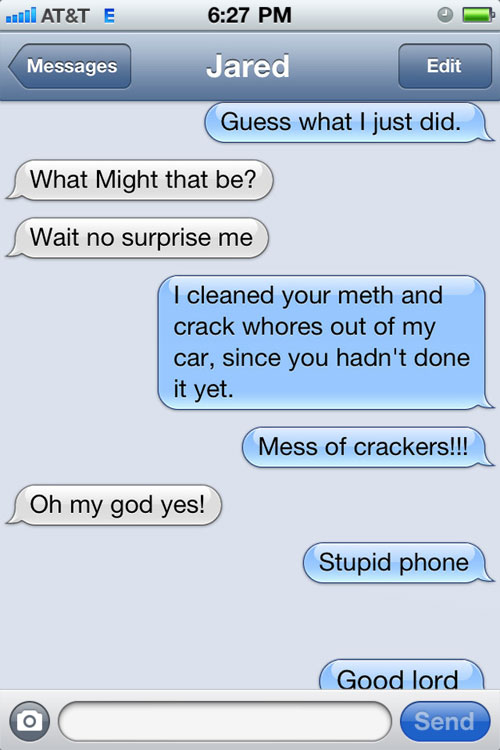

Siri is teaching folks how to modularize their accents and dialects to a greater degree, but voice isn’t the only affected area. Typing in accents has gotten more difficult as well.

“They should deal with accents and not force the spell checkers to erase them.”

According to Lars Hinrichs, who is part of the Texas English Project at the University of Texas at Austin, the increase in autocorrect features on smartphones and computers is rubbing out accents and unique dialects right along with spelling errors.

“Every day I find myself trying to write something nonstandard, but [spell check is] on your case. You can’t have an ‘in’ ending, and they give you the ‘g’ whether you want it or not,” Black said. “It’s very constraining … It should be the next frontier with these companies. They should deal with [accents] and not force the spell checkers to erase them.”

Overeager spell checking is especially concerning for English dialects that are endangered, like Jamaican Creole. According to Hinrichs, 90 percent of Jamaicans grow up speaking it, but don’t know how to write it. Thanks to autocorrect on smartphones and computers, many attempts to write Creole are converted into standard English. Personality and uniqueness are drained from the language one word at a time. Autocorrect is also teaching those who use smartphones, web browsers, or word processors that the way they’re writing is wrong, and this is the right way.

The way to fix this is to get the Jamaican dialect recognized more officially inside the country, around the world, and online, but like many endangered languages around the world, what’s missing is the infrastructure to make this happen. Jamaica is not a rich country, and those affected by this change may feel lucky to have a smartphone at all.

What language will AI speak?

Voice assistants, smartphones, PCs, and most of the tech world may have a tough time with dialects and accents today, but in a few years, they may begin to talk and write back to people so frequently that they form their own dialects and begin to affect ours.

Mohamed Musbah is the vice president of product at Maluuba, a Canadian company working on the next generation of artificial intelligence. He spends his days imagining a world where AI assistants like Siri and Alexa actually work.

Maluuba’s goal, he told Digital Trends, is to help machines (AI) converse with us in much more natural dialogue, and to give them actual ‘reading comprehension’ so they can truly understand words and learn how to interact with people. It has had some luck, too. Earlier this year, Maluuba’s AI was able to ‘read’ Harry Potter and the Sorcerer’s Stone and answer multiple choice questions correctly with about 70 percent accuracy.

In the future, he imagines AI assistants, or “bots,”’ that people chat with all the time, at work and at home. They can do a lot more than tell us the weather, and are a much more vocal, contributing part of society.

The growth of AI

There are so many limitations with AI voice and text assistants that we humans are bending over backward trying to get them to understand a single word we’re saying, according to Mohamed Musbah of Canadian company Maluuba, which focuses on machine literacy.

“When you unpack Siri, it’s not really an AI assistant,” Musbah told Digital Trends. “It’s more a system that’s trained to understand 20 to 25 [questions] really well.”

Currently, an AI assistant like Siri can understand preprogrammed language. You can ask it where the nearest sushi restaurant is, but ask a follow-up or anything about that restaurant and it will fall flat: The calculations are just too complex, explained Musbah. It has no idea how to make sense of our second or third question and put it in context. This is why people talk in a monotone to Siri, and type robotic queries when they search for things on Google.

There’s a long journey ahead. “Five years from now, if we’ve taught a machine to be as intelligent as a 2-year-old child — a 5-year-old child would be amazing — but if we teach it to the point where it has simple fundamental reasoning capabilities or understanding capabilities against the environment that it’s in, then we’ve truly solved something remarkable,” said Musbah.

In a world where voice assistants can comprehend words, and hopefully understand a few more languages and dialects easier, Musbah believes humans will give their mundane tasks to machines. Businesses will have human workers and bot workers, and both will likely have to learn new language tricks to understand one another.

But if machines play a more active role speaking with humans and writing to them, will English take on a new robotic tone? Will people all learn how to code switch to yet another new dialect?

Payack isn’t optimistic: “Bill Gates says by 2040, robots will take over many jobs,” he said, adding that “the next horizon is ordering at McDonalds and computers will talk back to you.” When that happens, robots “could actually limit the expansion of words because they aren’t thinking like we are. They are straight and narrow.” Payack believes that “if you have enough robots in society,” they could limit word growth.

The best way to prevent that may be to make sure that robots and AI of the future are well read and are fed a healthy diet of unbiased, clean data. Much like a child who grows up sheltered and brainwashed may end up believing lies are truth, a bot that is fed biased data will turn into a bot that uses skewed language.

“If you teach a machine to learn language that’s heavily biased or skewed toward one topic, let’s say being taught to learn like a conservative or a liberal, it becomes really, really good at just doing that,” Musbah said. “It’s one area we researchers have to think a lot about. Diversity of data is an extremely important aspect of advancing this space.”

For proof of this, look no further than the chatbot Microsoft “Tay” that launched in March. After one day interacting with people on Twitter, enough people fed it racist, sexist, horribly mean tweets that it became a completely deplorable (to borrow a term) sounding tweeter, and had to be shut down.

Regulating the data that creators feed AI in the next decade may be as important to their language development as their ability to reason.

The future is going to be totally :)

So, assuming the aliens from the movie Arrival do not arrive and upend language altogether, what do the next 10 years hold, as language and tech continue to intermingle in new ways?

Despite the alarm bells raised by some who fear that the quick and informal ways teenagers text warn of dark days ahead, the future of English, both written and verbal, looks rosy. There are no signs that teenagers are worse at reading or writing. It is also unlikely that they’ll ride off into the sunset and leave English behind for a new language made entirely of acronyms and emoji.

We are all learning how to quickly switch between different modes of speech and writing.

Growing up in this hyperconnected Facebook and smartphone decade, the internet decade before that, or the dawn of personal PCs seems to have endowed each generation with more and more advanced language skills. Younger generations are harnessing texting and instant messaging and turning it into an entirely new form of communication: written speech, or fingered speech. They text more like they talk, and have found new ways to include emotion, affirmations that they’re listening to one another, and signs that they understand each other into simple texts. They’re thinking about writing in ways no one has before.

All tech users are becoming bilingual, in a way, learning to quickly switch between different modes of speech and writing based on who they’re chatting with, and how.

Every new mode of communication — be it Facebook, Twitter, or new visual sharing apps like Instagram, Periscope, Reddit, or Snapchat — has its own rules and style, and members of those communities are creating sophisticated new language rules for each of them. More incredible is the rate at which they’re learning to swap between them.

For some, the ability to switch between language styles extends to verbal communication. Dialects, like Texas English, used to be hard-coded into folks, but now they’re gaining the cognitive ability to transform them into a tool that they can turn on and off. Much like swapping between Twitter and email, some people can now swap between accents and dialects without hassle as they talk to different people. This is due to the effects of modern society: increasing rates of moving, urban growth, the internet, and smartphones. Most tech users have even created their own “machine voice” dialect to talk to voice assistants like Siri and those awful automated telephone bots.

If you speak English, you may have to modify your speech so Siri understands you, but your language is fundamentally healthy. Right now, English is the top language in the world by many measures, and is likely to stay that way, even as thousands of languages fail to make the transition to the digital world and die off in the next century. Only about 5 percent of active languages may make it, and only a handful will prosper in the same league as English.

But even English has challenges ahead of it. With an increasing number of Chinese and many other countries taking up international English as a secondary language, it will probably change, and possibly end up sounding a little foreign to Americans. It could become simpler, losing some of its key aspects like plural words and determiners like “the” as more Chinese speakers join the fray.

The effect of Chinese speakers on English may seem small in comparison to the unknown effects that machines may have once they begin speaking more. Bots are only just beginning to write articles on the internet, and in the next few years, AI assistants may finally get smart enough that we want to talk to them and ask them to help us with more complex tasks. How much will English and other languages transform as machines begin to participate in the world of language in a tangible way? Will they standardize English more, limiting our words, or will they enrich it?

Whatever tech hits us with in the decade, and century, it looks like we humans may be a lot more creative and adaptable than we give ourselves credit for. What new language skills will a child born with an iPhone 7 or Google Pixel have? How will they think differently than we do? What happens if you learn how to Snapchat as you learn how to read? Even 20-somethings may have a tough time keeping up with smartphone native kids. They’ll likely think and speak in entirely new ways, and that is something to celebrate and study.