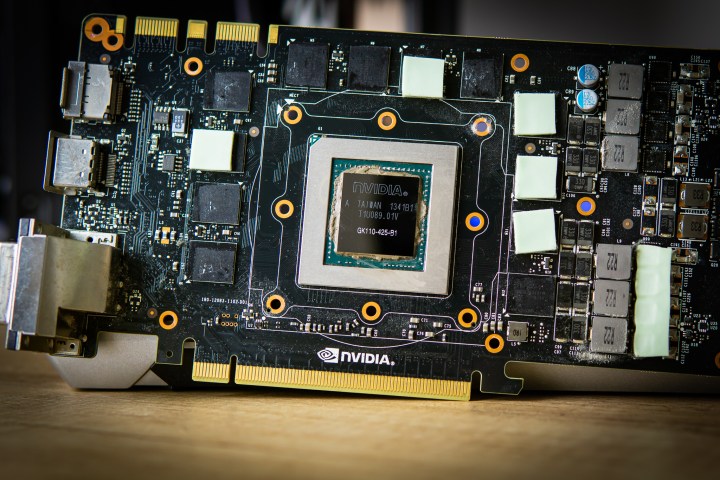

Performance boosts are expected with each new generation of the best graphics cards, but it seems that Nvidia and IBM have their sights set on greater changes.

The companies teamed up to work on Big accelerator Memory (BaM), a technology that involves connecting graphics cards directly to superfast SSDs. This could result in larger GPU memory capacity and faster bandwidth while limiting the involvement of the CPU.

This type of technology has already been thought of, and worked on, in the past. Microsoft’s DirectStorage application programming interface (API) works in a somewhat similar way, improving data transfers between the GPU and the SSD. However, this relies on external software, only applies to games, and only works on Windows. Nvidia and IBM researchers are working together on a solution that removes the need for a proprietary API while still connecting GPUs to SSDs.

The method, amusingly referred to as BaM, was described in a paper written by the team that designed it. Connecting a GPU directly to an SSD would provide a performance boost that could prove to be viable, especially for resource-heavy tasks such as machine learning. As such, it would mostly be used in professional high-performance computing (HPC) scenarios.

The technology that is currently available for processing such heavy workloads requires the graphics card to rely on large amounts of special-purpose memory, such as HBM2, or to be provided with efficient access to SSD storage. Considering that datasets are only growing in size, it’s important to optimize the connection between the GPU and storage in order to allow for efficient data transfers. This is where BaM comes in.

“BaM mitigates the I/O traffic amplification by enabling the GPU threads to read or write small amounts of data on-demand, as determined by the compute,” said the researchers in their paper, first cited by The Register. “The goal of BaM is to extend GPU memory capacity and enhance the effective storage access bandwidth while providing high-level abstractions for the GPU threads to easily make on-demand, fine-grain access to massive data structures in the extended memory hierarchy.”

For many people who don’t work directly with this subject, the details may seem complicated, but the gist of it is that Nvidia wants to rely less on the processor and connect directly to the source of the data. This would both make the process more efficient and free up the CPU, making the graphics card much more self-sufficient. The researchers claim that this design would be able to compete with DRAM-based solutions while remaining cheaper to implement.

Although Nvidia and IBM are undoubtedly breaking new ground with their BaM technology, AMD worked in this area first: In 2016, it unveiled the Radeon Pro SSG, a workstation GPU with integrated M.2 SSDs. However, the Radeon Pro SSG was intended to be strictly a graphics solution, and Nvidia is taking it a few steps further, aiming to deal with complex and heavy compute workloads.

The team working on BaM plans to release the details of their software and hardware optimization as open source, allowing others to build on their findings. There is no mention as to when, if ever, BaM might find itself implemented in future Nvidia products.

Editors' Recommendations

- The war between PC and console is about to heat up again

- RTX 4090 owners are in for some bad news

- Don’t buy the RTX 3060 in 2024

- Intel may fire the first shots in the next-gen GPU war

- Nvidia RTX 50-series graphics cards: news, release date, price, and more