Nvidia is introducing its new NeMo Guardrails tool for AI developers, and it promises to make AI chatbots like ChatGPT just a little less insane. The open-source software is available to developers now, and it focuses on three areas to make AI chatbots more useful and less unsettling.

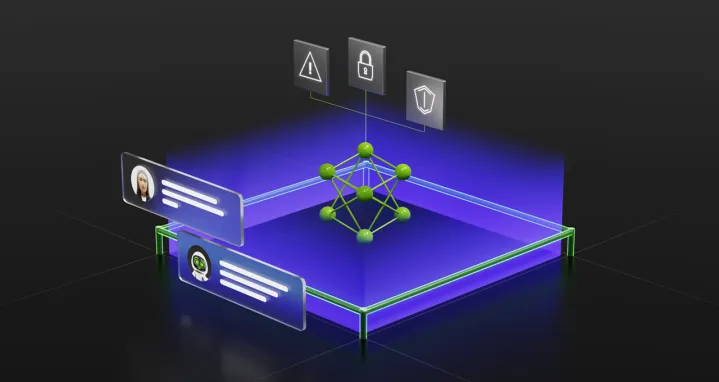

The tool sits between the user and the Large Language Model (LLM) they’re interacting with. It’s a safety for chatbots, intercepting responses before they ever reach the language model to either stop the model from responding or to give it specific instructions about how to respond.

Nvidia says NeMo Guardrails is focused on topical, safety, and security boundaries. The topical focus seems to be the most useful, as it forces the LLM to stay in a particular range of responses. Nvidia demoed Guardrails by showing a chatbot trained on the company’s HR database. When asked a question about Nvidia’s finances, it gave a canned response that was programmed with NeMo Guardrails.

This is important due to the many so-called hallucinations we’ve seen out of AI chatbots. Microsoft’s Bing Chat, for example, provided us with several bizarre and factually incorrect responses in our first demo. When faced with a question the LLM doesn’t understand, it will often make up a response in an attempt to satisfy the query. NeMo Guardrails aims to put a stop to those made-up responses.

The safety and security tenets focus on filtering out unwanted responses from the LLM and preventing it from being toyed with by users. As we’ve already seen, you can jailbreak ChatGPT and other AI chatbots. NeMo Guardrails will take those queries and block them from ever reaching the LLM.

Although NeMo Guardrails to built to keep chatbots on-topic and accurate, it isn’t a catch-all solution. Nvidia says it works best as a second line of defense, and that companies developing and deploying chatbots should still train the model on a set of safeguards.

Developers need to customize the tool to fit their applications, too. This allows NeoMo Guardrails to sit on top of middleware that AI models already use, such as LangChain, which already provides a framework for how AI chatbots are supposed to interact with users.

In addition to being open-source, Nvidia is also offering NeMo Guardrails as part of its AI Foundations service. This package provides several pre-trained models and frameworks for companies that don’t have the time or resources to train and maintain their own models.

Editors' Recommendations

- The best ChatGPT plug-ins you can use

- GPT-4 vs. GPT-3.5: how much difference is there?

- OpenAI needs just 15 seconds of audio for its AI to clone a voice

- Nvidia turns simple text prompts into game-ready 3D models

- I was wrong — Nvidia’s AI NPCs could be a game changer