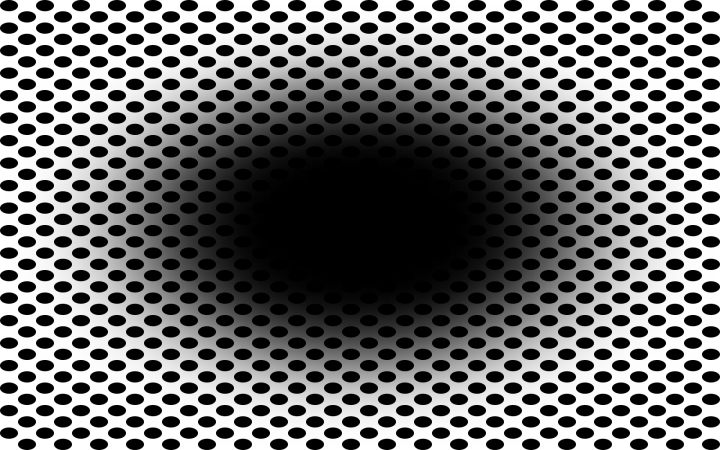

You look at an image of a black circle on a grid of circular dots. It resembles a hole burned into a piece of white mesh material, although it’s actually a flat, stationary image on a screen or piece of paper. But your brain doesn’t comprehend it like that. Like some low-level hallucinatory experience, your mind trips out; perceiving the static image as the mouth of a black tunnel that’s moving towards you.

Responding to the verisimilitude of the effect, the body starts to unconsciously react: the eye’s pupils dilate to let more light in, just as they would adjust if you were about to be plunged into darkness to ensure the best possible vision.

The effect in question was created by Akiyoshi Kitaoka, a psychologist at Ritsumeikan University in Kobe, Japan. It’s one of the dozens of optical illusions he’s created over a lengthy career. (“I like them all,” he said, responding to Digital Trend’s question about whether he has a favorite.)

This new illusion was the subject of a piece of research published recently in the journal Frontiers in Human Neuroscience. While the focus of the paper is firmly on the human physiological responses to the novel effect (which it turns out that some 86 percent of us will experience), the overall topic may also have a whole lot of relevance when it comes to the future of machine intelligence — as one of the researchers was eager to explain to Digital Trends.

An evolutionary edge

Something’s wrong with your brain. At least, that’s one easy conclusion to be drawn from the way that the human brain perceives optical illusions. What other explanation is there for a two-dimensional, static image that the brain perceives as something totally different? For a long time, mainstream psychology figured exactly that.

“Initially people thought, ‘Okay, our brain is not perfect … It doesn’t get it always right.’ That’s a failure, right?” said Bruno Laeng, a professor at the Department of Psychology of the University of Oslo and first author of the aforementioned study. “Illusions in that case were interesting because they would reveal some kind of imperfection in the machinery.”

The brain has no way to know what’s [really] out there.”

Psychologists no longer view them that way. If anything, research such as this highlights how the visual system is not just a straightforward camera. The “Illusory Expanding Hole” optical illusion makes clear that the eye adjusts to perceived, even imagined, light and darkness, rather than to physical energy.

Most significantly, it showcases that we don’t just dumbly record the world with our visual systems, but instead perform a continuous set of scientific experiments in order to gain a slight evolutionary advantage. The goal is to analyze data presented to us and try to preemptively deal with problems before they become, well, problems.

“The brain has no way to know what’s [really] out there,” Laeng said. “What it’s doing is building up a sort of virtual reality of what could be out there. There’s a little bit of guesswork. In this respect, you can think of the brain as a kind of probabilistic machine. You can call it a Bayesian machine if you want. It’s using some prior hypothesis and trying to test it all the time to see whether that works.”

Laeng gives the example of our eyes making adjustments based on nothing more than the impression of light from the sun: even when this is sighted through cloud cover or an overhead canopy of leaves. Just in case.

“What matters in evolution is not that it is true [at that moment], but it is probable,” he continued. “By constricting the pupil, your body is already adjusting to a situation that is very likely to happen in a short period of time. What happens [if the sun suddenly comes out] is that you are dazzled. Dazzled means incapacitated temporarily. That has enormous consequences whether you’re a prey or whether you’re a predator. You lose a fraction of a second in a particular situation and you may not survive.”

It’s not just light and darkness where our visual systems need to make guesses, either. Think about a game of tennis, where the ball is traveling at high speed. Were we to base our behavior wholly on what the visual system is receiving at any given moment, we would lag behind reality and fail to return the ball. “We are able to perceive the present although we are really stuck in the past,” Laeng said. “The only way to do it is by predicting the future. It sounds a bit like a word game, but that is it in a nutshell.”

Machine vision is getting better

So what does this have to do with computer vision? Potentially everything. In order for a robot, for instance, to be able to function effectively within the real world it needs to be able to make these kinds of adjustments on the fly. Computers have an advantage when it comes to their ability to perform extremely fast computations. What they don’t have is millions of years of evolution on their side.

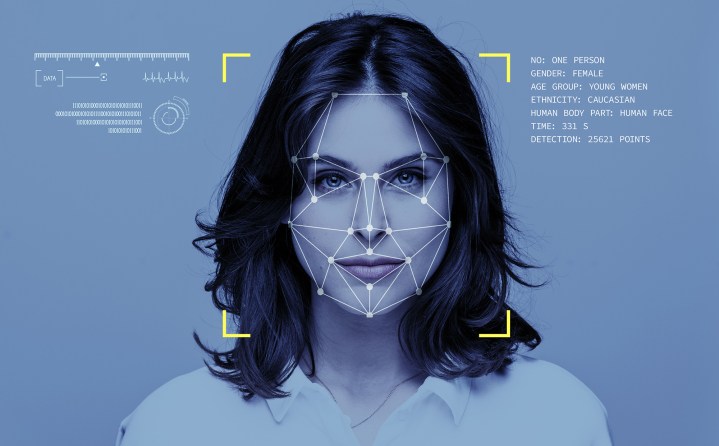

In recent years, machine vision has nonetheless made enormous strides. They can identify faces or gaits in real-time video streams — potentially even in vast crowds of people. Similar image classification and tech tools can recognize the presence of other objects, too, while object segmentation breakthroughs make it possible to better understand the content of different scenes. There has also been significant progress made when it comes to extrapolating 3D images from 2D scenes, allowing machines to “read” three-dimensional information, such as depth, from scenes. This takes modern computer vision closer to human image perception.

However, there still exists a gulf between the best machine vision algorithms and the kinds of vision-based capabilities the overwhelming majority of humans are able to carry out from a young age. While we can’t articulate exactly how we perform these vision-based tasks (to quote the Hungarian-British polymath Michael Polanyi, “we can know more than we can tell”), we are nonetheless able to perform an impressive array of tasks that allow us to harness our eyesight a variety of smart ways.

A Turing Test for machine vision

If researchers and engineers hope to create computer vision systems that operate at least on par with the visual processing skills of the wetware brain, building algorithms that can understand optical illusions is not a bad starting point. At the very least, it could prove a good way of measuring how well machine vision systems operate to our own brains. It may not be the answer to the mythical Artificial General Intelligence, but it might be the key to unlocking General Vision.

“If someone would develop, one day, an artificial visual system that commits the same illusory perception errors that we do, you would know at this point that they’re [achieving] a good simulation of how our brain works,” Laeng said. “It would be a sort of Turing Test. If you have an artificial network that is fooled by illusion as we are, then we [would be] very close to understanding the underlying computation of the brain itself.”

Yi-Zhe Song, reader of Computer Vision and Machine Learning at the Center for Vision Speech and Signal Processing at the U.K.’s University of Surrey, agrees with the hypothesis. “Asking vision algorithms to understand optical illusions as a general topic is of great value to the community,” he told Digital Trends. “It goes beyond the current community focus of asking machines to [recognize], by pushing the envelope further [and] asking machines to reason. This push [would represent] a significant step forward towards ‘General Vision,’ where subjective interpretations of visual concepts need to be accommodated for.”

Use your illusion

To date, there has been some limited research toward this goal — although it remains at a relatively early stage. Nasim Nematzadeh, a researcher who holds a Ph.D. in Artificial Intelligence and Robotics-Low-level vision models, is one person who has published work on this topic.

“We believe that further exploration of the role of simple Gaussian-like models in low-level retinal processing and Gaussian kernel in early stage [deep neural networks], and its prediction of loss of perceptual illusion, will lead to more accurate computer vision techniques and models,” Nematzadeh told Digital Trends. “[This could] contribute to higher level models of depth and motion processing and generalized to computer understanding of natural images.”

Max Williams, an AI researcher who helped compile a dataset of thousands of optical illusion images for computer vision systems, puts the relationship between general vision and optical illusions most succinctly: “Illusions exist because our eyes and brains are performing a messy and ad-hoc process to extract a visual scene from an otherwise incomprehensible light field, created by a physical world which we are almost completely sealed off from,” they told Digital Trends. “I don’t think it’s possible to make a visual system expressive enough to be considered ‘perception’ which is also free from illusions.”

Achieving General Vision

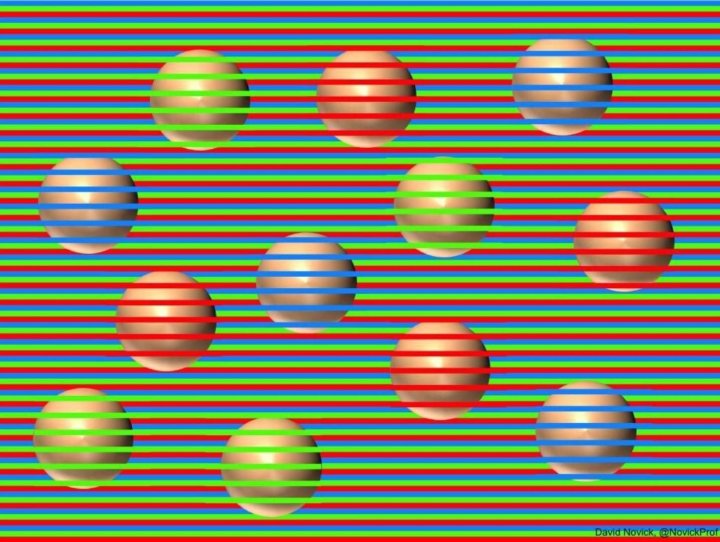

To be clear, achieving human-level (or better) General Vision for AI isn’t simply going to be training them to recognize standard optical illusions. No hyper-specific ability to, say, decode Magic Eye illusions with 99.9% accuracy in 0.001 seconds is going to substitute for millions of years of human evolution.

(Interestingly, machine vision does already have its own version of optical illusions in the form of adversarial models, which can make them mistake – as in one alarming illustration – a 3D-printed toy turtle for a rifle. However, these do not yield the same evolutionary benefits as the optical illusions which work on humans.)

Still, getting machines to understand human optical illusions, and respond to them in the way that we do, could be very useful research.

And one thing’s for sure: When General Vision AI is achieved, it’ll fall for the same kinds of optical illusions as we do. At least, in the case of the Illusory Expanding Hole, 86% of us.