ChatGPT is exploding, and the backbone of its AI model relies on Nvidia graphics cards. One analyst said around 10,000 Nvidia GPUs were used to train ChatGPT, and as the service continues to expand, so does the need for GPUs. Anyone who lived through the rise of crypto in 2021 can smell a GPU shortage on the horizon.

I’ve seen a few reporters build that exact connection, but it’s misguided. The days of crypto-driven-type GPU shortages are behind us. Although we’ll likely see a surge in demand for graphics cards as AI continues to boom, that demand isn’t directed toward the best graphics cards installed in gaming rigs.

Why Nvidia GPUs are built for AI

First, we’ll address why Nvidia

CUDA is Nvidia’s Application Programming Interface (API) used in everything from its most expensive data center GPUs to its cheapest gaming GPUs. CUDA acceleration is supported in machine learning libraries like TensorFlow, vastly speeding training and inference. CUDA is the driving force behind AMD being so far behind in AI compared to Nvidia.

Don’t confuse CUDA with Nvidia’s CUDA cores, however. CUDA is the platform that a ton of AI apps run on, while CUDA cores are just the cores inside Nvidia GPUs. They share a name, and CUDA cores are better optimized to run CUDA applications. Nvidia’s gaming GPUs have CUDA cores and they support CUDA apps.

Tensor cores are basically dedicated AI cores. They handle matrix multiplication, which is the secret sauce that speeds up AI training. The idea here is simple. Multiply multiple sets of data at once, and train AI models exponentially faster by generating possible outcomes. Most processors handle tasks in a linear fashion, while Tensor cores can rapidly generate scenarios in a single clock cycle.

Again, Nvidia’s gaming GPUs like the RTX 4080 have Tensor cores (and sometimes even more than costly data center GPUs). However, for all of the specs Nvidia cards have to accelerate AI models, none of them are as important as memory. And Nvidia’s gaming GPUs don’t have a lot of memory.

It all comes down to memory

“Memory size is the most important,” according to Jeffrey Heaton, author of several books on artificial intelligence and a professor at Washington University in St. Louis. “If you do not have enough GPU

Heaton, who has a YouTube channel dedicated to how well AI models run on certain GPUs, noted that CUDA cores are important as well, but memory capacity is the dominant factor when it comes to how a GPU functions for AI. The RTX 4090 has a lot of memory by gaming standards — 24GB of GDDR6X — but very little compared to a data center-class GPU. For instance, Nvidia’s latest H100 GPU has 80GB of HBM3 memory, as well as a massive 5,120-bit memory bus.

You can get by with less, but you still need a lot of memory. Heaton recommends beginners have no less than 12GB, while a typical machine learning engineer will have one or two 48GB professional Nvidia GPUs. According to Heaton, “most workloads will fall more in the single A100 to eight A100 range.” Nvidia’s A100 GPU has 40GB of memory.

You can see this scaling in action, too. Puget Systems shows a single A100 with 40GB of memory performing around twice as fast as a single RTX 3090 with its 24GB of memory. And that’s despite the fact that the RTX 3090 has almost twice as many CUDA cores and nearly as many Tensor cores.

Memory is the bottleneck, not raw processing power. That’s because training AI models relies on large datasets, and the more of that data you can store in memory, the faster (and more accurately) you can train a model.

Different needs, different dies

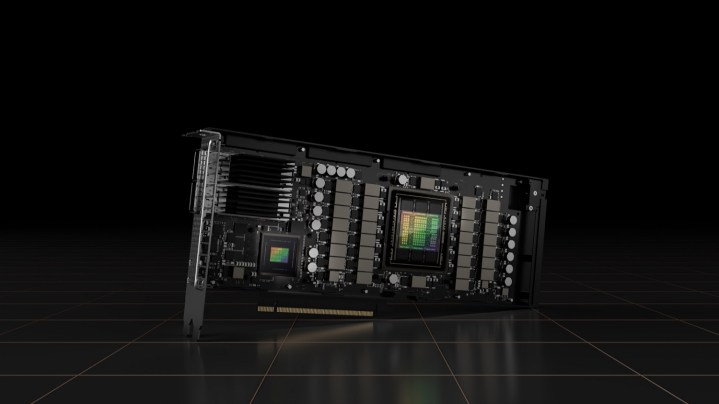

Nvidia’s gaming GPUs generally aren’t suitable for AI due to how little video memory they have compared to enterprise-grade hardware, but there’s a separate issue here as well. Nvidia’s workstation GPUs don’t usually share a GPU die with its gaming cards.

For instance, the A100 that Heaton referenced uses the GA100 GPU, which is a die from Nvidia’s Ampere range that was never used on gaming-focused cards (including the high-end RTX 3090 Ti). Similarly, Nvidia’s latest H100 uses a completely different architecture than the RTX 40-series, meaning it uses a different die as well.

There are exceptions. Nvidia’s AD102 GPU, which is inside the RTX 4090 and RTX 4080, is also used in a small range of Ada Lovelace enterprise GPUs (the L40 and RTX 6000). In most cases, though, Nvidia can’t just repurpose a gaming GPU die for a data center card. They’re separate worlds.

There are some fundamental differences between the GPU shortage we saw due to crypto-mining and the rise in popularity of AI models. According to Heaton, the GPT-3 model required over 1,000 A100 Nvidia GPUs to trains and about eight to run. These GPUs have access to the high-bandwidth NVLink interconnect as well, while Nvidia’s RTX 40-series GPUs don’t. It’s comparing a maximum of 24GB of memory on Nvidia’s gaming cards to multiple hundreds on GPUs like the A100 with NVLink.

There are some other concerns, such as memory dies being allocated for professional GPUs over gaming ones, but the days of rushing to your local Micro Center or Best Buy for the chance to find a GPU in stock are gone. Heaton summed that point up nicely: “Large language models, such as ChatGPT, are estimated to require at least eight GPUs to run. Such estimates assume the high-end A100 GPUs. My speculation is that this could cause a shortage of the higher-end GPUs, but may not affect gamer-class GPUs, with less

Editors' Recommendations

- Apple finally has a way to defeat ChatGPT

- OpenAI needs just 15 seconds of audio for its AI to clone a voice

- We may have just learned how Apple will compete with ChatGPT

- I used ChatGPT to help me make my first game. Don’t make the same mistakes I did

- This one image breaks ChatGPT each and every time