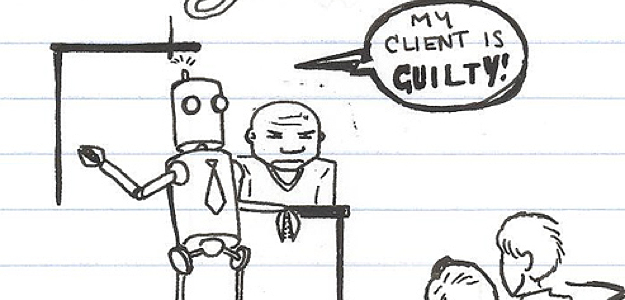

Prepare for the era of the robot cross-examination. A study at Mississippi State University found that the best way to combat the potential for leading the testimony of a witness during legal proceedings is to remove humans from the equation as much as possible. This can only mean one thing: Out with the lawyers – human lawyers, at least.

Prepare for the era of the robot cross-examination. A study at Mississippi State University found that the best way to combat the potential for leading the testimony of a witness during legal proceedings is to remove humans from the equation as much as possible. This can only mean one thing: Out with the lawyers – human lawyers, at least.

The study was led by Mississippi State’s Cindy Bethel, with her team showing 100 “witnesses” a slide show that illustrated a man stealing money and a calculator from a desk drawer while in the room under the pretext of fixing a chair next to the desk. After the slide show finished, the witnesses were split into four groups, with two groups interviewed about what they had seen by a human, and the other two interviewed by a robot remote-controlled by the researchers.

The reason for four groups instead of two is that two groups – one each belonging to the human and robot interviewers – were asked leading questions that offered false information about what appeared in the slide show, while the other groups were simply asked about what they had seen. Surprisingly, the group fed false information by the human interviewer ended up scoring roughly 40 percent lower in terms of accurate recall, remembering objects that were not visible during the slide show but were mentioned by the person interviewing them. Conversely, the same questions delivered by the remote-controlled robot appeared to have no impact on the recall of the witnesses.

Bethel called the results “a very big surprise,” adding that the group fed false information by the robot “just were not affected by what the robot was saying. The scripts were identical. We even told the human interviewers to be as robotic as possible.”

What was being demonstrated – perhaps more than Bethel had initially suspected would be the case – is something called the misinformation effect, in which people’s memories are “nudged” in particular directions by the choice of words being used in the questions responsible for the memories. Children are said to be particularly susceptible to this phenomenon, which explains why the Starksville Police Department, just ten minutes away from the university hosting Bethel’s experiments, is said to be interested in the possibilities of using a robotic interviewer when dealing with child testimony in future. Mark Ballard, an office at the police department, said that officers work hard to be as neutral as possible when dealing with children, favoring the use of certified child psychologists where possible. Still, any accidental influence might be eliminated altogether with a robot interviewer.

“It’s tough to argue that the robot brings any memories or theories with it from its background,” he says.

Well, unless someone programs it to, of course.

Image via Tom-One

Editors' Recommendations

- Robot fry cook Flippy is getting a makeover to make it even more useful

- What happens when you mix foosball and robots? A new game called RoboSoccer

- Amazon and Postmates’ delivery robots heading to more sidewalks in the U.S.