The details of how Nvidia trained two test cars are in a file titled End to End Learning for Self-Driving Cars. The basics are as follows: Nvidia used three cameras and two Nvidia DRIVE PX computers to watch humans drive cars for 72 hours. The car training was conducted in several states, in varied weather conditions, day and night, and on a wide variety of road types and conditions. The cameras captured massive amounts data in 3D, which was tracked and stored by the on-board GPUs. That data was then analyzed and broken into learning steps with Torch 7, a machine learning system. The output? A trained system for driving cars without driver intervention.

In subsequent tests using the trained system in cars on test roads and public roads (including highways), the system achieved 98 to 100 percent autonomy. When a driver intervenes, the system continues to learn.

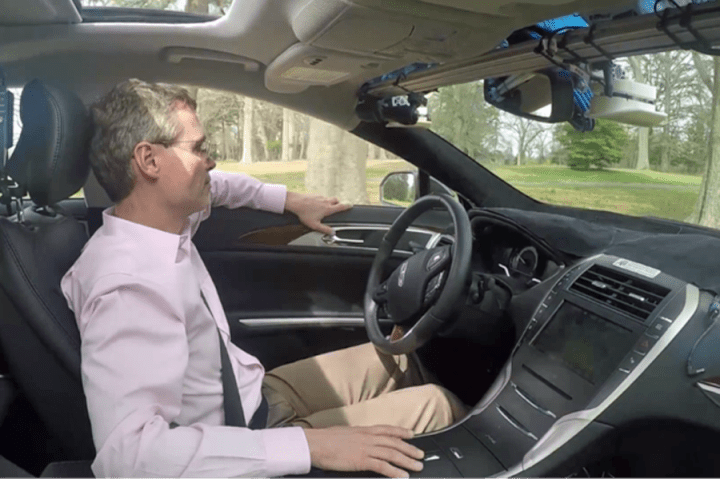

Only one camera and one computer are needed in a car based on Nvidia’s GPU system. When the time comes that you can buy a car with this system, it will arrive ready to drive itself — you won’t need to give it more training runs, as told to Digital Trends. While the car can drive itself, when it detects (via the camera) something new (as in something it hasn’t “seen” before), it alerts the driver to take over and then goes into learning mode. Whatever it learns is later transmitted to the cloud and that information is incorporated into the next software update so the learning can benefit all cars using Nvidia’s self-driving system. You can watch Nvidia’s GPU-based car training and performance here.

When speaking with Digital Trends, a spokesperson from Nvidia used the example of encountering a moose. The moose example works because chancing upon a moose in the road is rare in most parts of the U.S. and apparently has never happened in their car training. So if I was driving a car with the Nvidia self-driving system and a moose was on or beside the road, the system would alert me to take over. The onboard system would watch what I did and record changes to steering and other systems like brakes and acceleration. My reactions would be transmitted to the cloud. After the next software update, if another person encountered a moose, that car would know how to react based on my reactions.

This learn-by-watching method of training driverless vehicles is both more realistic and inclusive than chains of rules written by programmers based on highly varied elements like road surface, lane markers, lights, and traffic conditions. The GPU-based system does the data-gathering and the machine learning system creates the rules.

Digital Trends was informed that Nvidia is now in conversations with more than 80 major OEMs (original equipment manufacturers) and research institutions. The one manufacturer Nvidia was able speak about publicly is Volvo. Next year Volvo’s Drive Me project in Gothenburg, Sweden will use 100 Volvo XC90s equipped with the Nvidia system to observe how the system works on “a defined set of roads” in the Swedish city.

Driverless cars are coming and Nvidia’s system looks to be on pace to speed up the transition.

Editors' Recommendations

- Meet Blackwell, Nvidia’s next-generation GPU architecture

- How to watch Nvidia’s launch of the RTX 4000 Super today

- Nvidia’s next GPU might follow Apple’s lead — and not in a good way

- Watch your back, Tesla. Volvo’s EX30 just raised the bar on EV value

- Nvidia RTX 4090 prices are skyrocketing as stocks run seriously low