AI is taking the media world by storm, and that’s not an exaggeration. This week, it was revealed that CNET and the venture capital firm that owns it, Red Ventures, have been using AI to generate content across the various brands it owns.

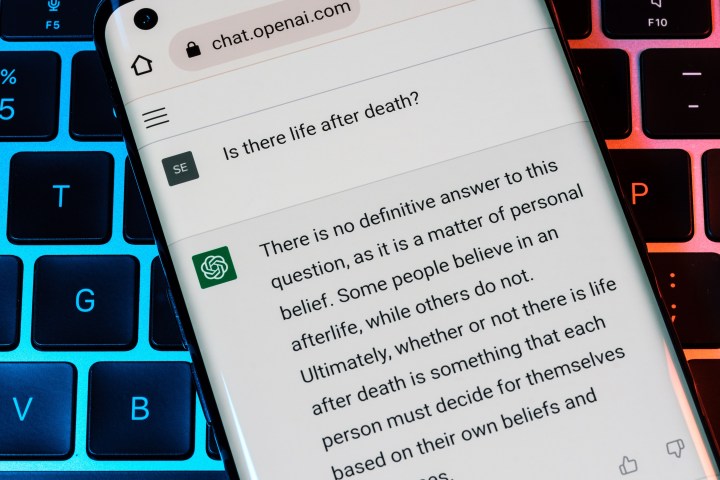

The story picked up, and journalists uncovered that these AI-generated articles were littered with inaccuracies and often incorrectly attributed to human writers. There’s a moral and philosophical question about AI generating content that mimics a human, but I wanted to go to the source and ask AI what it thought about writing articles. Its own response to the ethical dilemma shows that it’s not as confident in its own technology as some companies are.

ChatGPT says no

Before getting into the responses, all AI-generated content in this article will be in quotes, separated into its own paragraph, and include a note that it was generated by ChatGPT. Everything else comes from me.

ChatGPT is the forerunner in the world of AI chatbots right now, so I started off by asking it to react to CNET using AI in particular:

“I am an AI model, so I do not have personal opinions. However, I can tell you that using AI to generate articles can be a useful tool for media companies, as it can save time and resources. However, the quality and accuracy of the generated articles will depend on the data and algorithms used. It is important for media companies to have a process in place for fact-checking and editing the content generated by AI to ensure accuracy and integrity.” (Generated by ChatGPT).

That seems straightforward enough, and it mirrors the process that CNET has taken toward its AI-generated articles. Even if the fact-checking policy isn’t up to snuff, CNET still insists that its articles are reviewed and fact-checked by human editors. But I posed the more general question of “should ChatGPT be used to write articles” and got a slightly more detailed response:

“ChatGPT and other language models like it can be a useful tool for generating written content, but it should not be used as the sole source for creating articles. The quality and accuracy of the content generated by a language model like ChatGPT will depend on the data and algorithms used, and it is important to have a process in place for fact-checking and editing the content generated by the model to ensure accuracy and integrity.

It is also important to note that while ChatGPT can generate text that is coherent and grammatically correct, it may lack the depth and nuance that human writers bring to their work. It may also not be able to fully understand and interpret complex and nuanced information.

In summary, ChatGPT can be used as a tool to assist in the writing process, but it should not be used to replace human writers. It’s important to have human oversight and editing to ensure the accuracy and integrity of the content generated.” (Generated by ChatGPT).

That sounds fairly self-aware — an admission that ChatGPT itself lacks the nuance and depth to write articles on its own. But I had a feeling there was more missing than just nuance. After all, AI-generated content also lacks context, and that’s what I wanted to test out for myself.

Lacking nuance

In the case of CNET, the AI was being used to generate content surrounding credit cards, which is a topic that needs a lot of context in 2023. Interest rates are continuing to rise, and job growth is slowing. There’s an ethical dilemma around these finance topics that AI can’t account for and react to. Or, at the very least, AI can’t bring human empathy that opening a new line of credit might not be the best idea under certain circumstances.

But I’m not a finance writer, so I might not even spot some of the mishandling of that context. I am, however, an expert in gaming PCs and

“The choice between an Nvidia or AMD

“Nvidia

“AMD

“When making your decision, it’s important to consider the specific needs of your application. If gaming is your primary focus, Nvidia

“It’s also important to check the compatibility of the card with your computer’s power supply and motherboard, and the supported resolution and refresh rate of your monitor. It’s always recommended to check the benchmarks, reviews and technical specifications of the models you are considering before making a purchase.” (Generated by ChatGPT).

At first blush, the response doesn’t seem too bad. Nvidia cards are more powerful overall, but AMD cards offer a better value. The devil is in the details here, though. For example, ChatGPT recommends an AMD

There are some critical elements missing, too. The response would lead you to believe that Nvidia

Perhaps that’s the nuance ChatGPT admits it lacks. And sure, you can see the bones of something accurate here and prod the copy into a more usable state. Still, it’s important to remember that none of this comes from a human, and the AI doesn’t care about inaccuracies (or anything, for that matter). It’s OK to be wrong, and I don’t want advice from a source that’s willing to do that.

To drive this point home, I asked ChatGPT a final question about if the AMD RX 7900 XT or RTX 4080 was better:

“As of 2021, the RTX 3080 is currently considered a better overall

Bluntly, that’s an asinine response — you can read our human-written best graphics cards roundup for context. But my point is that it’s not enough for an editor to go in and tweak some of the wording or fact-check a couple of claims. To the average person, it might sound sensible and well-written — but to someone knowledgeable on the topic, the reasoning and logic behind its answers are entirely unfounded. But hey, I’d expect no more from content generated by AI.

Editors' Recommendations

- No, you shouldn’t just buy whatever Brother printer is cheapest

- These are the PC apps you’re still using — but probably shouldn’t be

- You shouldn’t buy these Nvidia GPUs right now

- OpenAI needs just 15 seconds of audio for its AI to clone a voice

- How much does an AI supercomputer cost? Try $100 billion