Google’s latest update to its privacy policy will make it so that the company has free range to scrape the web for any content that can benefit building and improving its AI tools.

“Google uses information to improve our services and to develop new products, features, and technologies that benefit our users and the public,” the new Google policy says. “For example, we use publicly available information to help train Google’s AI models and build products and features like Google Translate, Bard, and Cloud AI capabilities.”

Gizmodo notes that the policy has been updated to say “AI models” when it previously said, “for language models.” Additionally, the policy added Bard and Cloud AI, when it previously only mentioned Google Translate, for which it collected data.

The privacy policy, which was updated over the weekend, appears especially ominous because it indicates that any information you produce online is up for grabs for Google to use for training its AI models.

The aforementioned wording seems to describe not just those in the Google ecosystem in one way or another but is detailed in such a way that the brand could have access to information from any part of the web.

Major issues surrounding the mass development of artificial intelligence are questions about privacy, plagiarism, and whether AI can dispel correct information. Early versions of chatbots such as ChatGPT are based on large language models (LLMs) that used already public sources, such as the common crawl web archive, WebText2, Books1, Books2, and Wikipedia as training data.

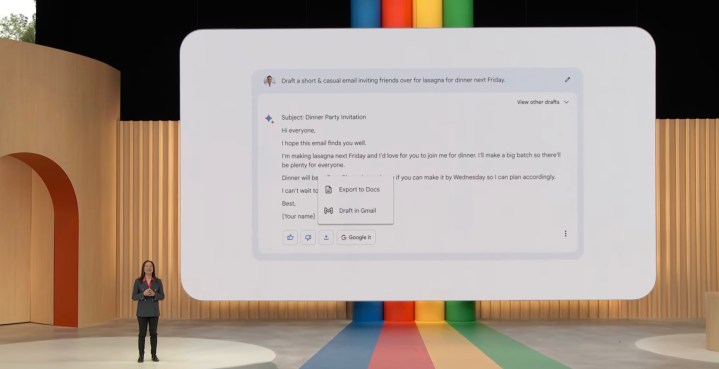

Early ChatGPT was infamous for becoming stuck on information beyond 2021 and subsequently filling in responses with false data. This could likely be one of the reasons Google would want unfettered access to web data to benefit tools such as Bard, to have real-world and potentially real-time training for its AI models.

Gizmodo also noted that Google could use this new policy to collect old, but still human-generated content, such as long-forgotten reviews or blog posts, to still have a feel of how human text and speech is developed and distributed. Still, it remains to be seen exactly how Google will use the data it collects.

Several social media platforms, including Twitter and Reddit, which are major sources of up-to-date information have already limited their public access in the wake of AI chatbot popularity, to the chagrin of their entire communities.

Both platforms have closed free access to their APIs, which restricts users from downloading massive amounts of posts for sharing elsewhere, under the guise of protecting their intellectual property. This instead broke many of the third-party tools that make both Twitter and Reddit run smoothly.

Both Twitter and Reddit have had to deal with other setbacks and controversies as their owners’ concerns heighten about AI taking over.