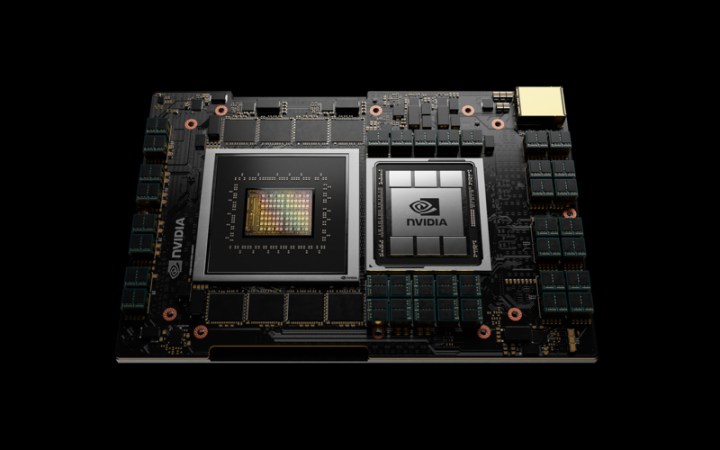

After a lot of speculation, Nvidia finally announced its powerful supercomputer CPU called Nvidia Grace at its annual GPU Technology Conference. Named after famed American computer scientist and computer programming pioneer Grace Hopper, Nvidia’s first data-center processor is based on architecture from ARM. The company claimed in a press session ahead of GTC that Nvidia Grace is capable of delivering “10x the performance of today’s fastest servers on the most complex A.I. and high-performance computing workloads.”

This is Nvidia’s first partnership with ARM since announcing its $40 billion acquisition of the company in 2020.

Unlike Apple’s ARM-based M1 processor, Nvidia Grace won’t be headed to a computer near you. Instead, it is designed to be used on diverse applications like natural language processing, recommender systems, and A.I. supercomputing, Nvidia’s executives said. Nvidia Grace is adopted by both the Swiss National Supercomputing Centre (CSCS) and the United States Department of Energy Los Alamos National Laboratory to help power and drive scientific research.

The company claimed that the volume of data and size of models used to train A.I. systems are growing exponentially, and the largest models are doubling every two-and-a-half months. To help reduce the bottleneck in communication between the processing and graphics core, Grace employs Nvidia’s NVLink interconnect technology, allowing large chunks of data to move between system memory, the CPU, and the GPU.

By tightly coupling the CPU and GPU inside Grace’s architecture, Nvidia stated that this processor will be seven times as fast on natural language training as Nvidia’s Selene supercomputer. Selene has a performance of 2.8-A.I. exaflops and is arguably one of the world’s fastest supercomputers for artificial intelligence applications today.

Grace combines energy-efficient ARM CPU cores with an innovative low-power memory subsystem.

And compared to traditional x86 server processors, like those made by rival Intel, Nvidia claimed that Grace is 10 times faster.

“A Grace-based system will be able to train a one trillion parameter NLP model 10x faster than today’s state-of-the-art NVIDIA DGXTM-based systems, which run on x86 CPUs,” the company added. This means that data that would have taken a month to analyze would now take just three days with Grace.

Powering this performance is ARM’s next-generation Neoverse cores alongside Nvidia’s fourth-generation NVLink interconnect, which has a bandwidth of 900 GB/s between the CPU and GPU. Grace will also use faster LPDDR5X memory, which is faster and more energy-efficient than DDR4 memory.

“It combines energy-efficient ARM CPU cores with an innovative low-power memory subsystem to deliver high performance with an energy-efficient design,” the company said.

Nvidia Grace will be used by Hewlett Package Enterprise in its Alps supercomputer, which will become available in 2023.

And Bluefield 3 for data centers too

In addition to Nvidia Grace, the company also took the wraps off of its data center CPU called Bluefield 3. Referred to as the DPU, or data processing unit, Bluefield 3 is built for A.I. and accelerated computing that’s optimized for “multi-tenant, cloud-native environments, offering software-defined, hardware-accelerated networking, storage, security, and management services at data-center scale.”

The DPU delivers the equivalent of 300 cores to power data center services, which can free up the CPU to run other applications.

“Modern hyperscale clouds are driving a fundamental new architecture for data centers,” said Nvidia CEO Jensen Huang. “A new type of processor, designed to process data center infrastructure software, is needed to offload and accelerate the tremendous compute load of virtualization, networking, storage, security, and other cloud-native A.I. services. The time for BlueField DPU has come.”

Nvidia claims that Bluefield 3 delivers 10x the performance of the prior generation Bluefield 2, and the DPU is powered by 16x Arm X78 cores and has four times the acceleration for cryptography. The chip is the first 400 SeE/NDR DPU, Nvidia claimed, and it supports PCIe 5.0.

Additionally, Nvidia also built in network security to allow the DPU to detect and respond to cyber threats.

Bluefield 3 is expected to become available in early 2022.

Editors' Recommendations

- Nvidia RTX 50-series graphics cards: news, release date, price, and more

- Nvidia just fixed a major issue with its GPUs

- RTX 4080 Super vs. RTX 4070 Ti Super vs. RTX 4070 Super: Nvidia’s new GPUs, compared

- What AMD needs to do to beat Nvidia in 2024

- Nvidia’s new GPUs could be right around the corner