High-end gaming has been fixated on DirectX 11 technology lately, but that doesn’t mean OpenGL is out of the picture: the Khronos Group has just announced the OpenGL 4.0 specification, the first major update to the open graphics standard since the launch of OpenCL in late 2008. OpenGL 4 builds on the work of OpenCL by enabling modern application to tap into the computing power of graphics processors, boosting performance and freeing up a computer’s main processor for other tasks. In addition, the OpenGL 4 standard includes support for hardware-accelerated geometry tessellation (essentially, simplifying shapes), can render content and apply shaders with 64-bit accuracy, and sports improved shaders for better rendering quality and antialiasing capability.

“The release of OpenGL 4.0 is a major step forward in bringing state-of-the-art functionality to cross-platform graphics acceleration, and strengthens OpenGL’s leadership position as the epicenter of 3D graphics on the web, on mobile devices as well as on the desktop,” said OpenGL ARB working group chair (and Nvidia’s Core openGL senior manager) Barthold Lichtenbelt, in a statement.

Not all existing hardware will be able to handle OpenGL 4 capabilities; in the meantime, Khronos has released an OpenGL 3.3 specification to bring as much OpenGL 4 functionality to existing GPU hardware as possible. Nvidia says its forthcoming Fermi-based graphics systems will fully support OpenGL 4.0.

The advantage of OpenGl from a developer’s point of view is that applications targeting the OpenGL 3D graphics platform can be deployed across a broad range of devices, from high-end desktop systems down through mobile devices like the iPhone via OpenGL ES, and potentially even to Web browsers via WebGL, which aims to bring OpenGL ES 2.0 to Web browsers via HTML 5’s Canvas element—WebGL is being backed by Mozilla, Opera, Apple, and Google.

Editors' Recommendations

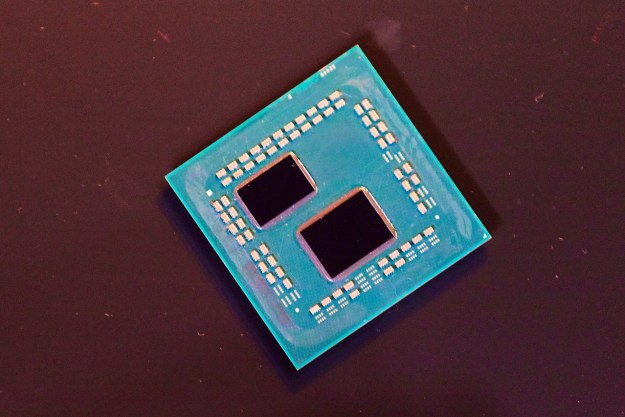

- 4 CPUs you should buy instead of the Ryzen 7 7800X3D

- AMD’s Ryzen 9 7950X3D pricing keeps the pressure on Intel

- What is DirectX, and why is it important for PC games?

- AMD, please don’t make the same mistake with the Ryzen 7 7700X3D

- Intel drops support for DirectX 9, but it may be a good thing