It turns out that a similar idea can be applied to robots.

In a new piece of research — presented at the recent 2017 International Conference on Robotics and Automation (ICRA) — engineers from Google and Carnegie Mellon University demonstrated that robots learn to grasp objects more robustly if another robot can be made to try and snatch it away from them while they’re doing so.

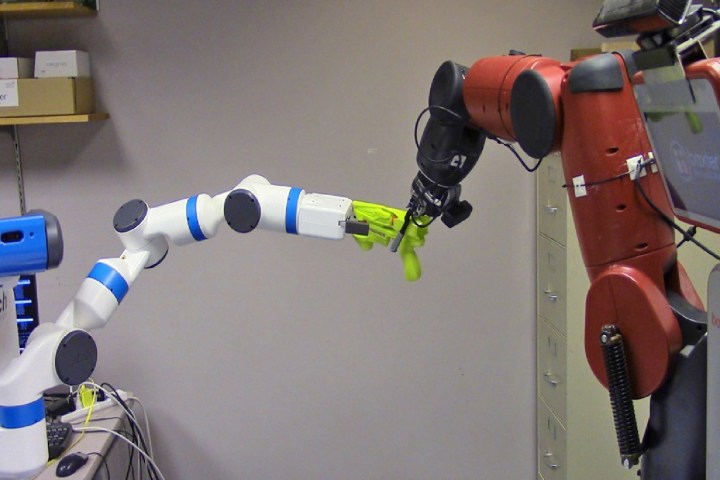

When one robot is given the task of picking up an object, the researchers made its evil twin (not that they used those words exactly) attempt to grab it from them. If the object isn’t properly held, the rival robot would be successful in its snatch-and-grab effort. Over time, the first robot learns to more securely hold onto its object — and with a vastly accelerated learning time, compared to working this out on its own.

“Robustness is a challenging problem for robotics,” Lerrel Pinto, a PhD student at Carnegie Mellon’s Robotics Institute told Digital Trends. “You ideally want a robot to be able to transfer what it has learnt to environments that it hasn’t seen before, or even be stable to risks in the environment. Our adversarial formulation allows the robot to learn to adapt to adversaries, and this could allow the robot to work in new environments.”

The work uses deep learning technology, as well as insights from game theory: the mathematical study of conflict and cooperation, in which one party’s gain can mean the other party’s loss. In this case, a successful grab from the rival robot is recorded as a failure for the robot it grabbed the object from — which triggers a learning experience for the loser. Over time, the robots’ tussles make each of them smarter.

That sounds like progress — just as long as the robots don’t eventually form a truce and target us with their adversarial AI, we guess!

Editors' Recommendations

- Boston Dynamics uses ChatGPT to create a robot tour guide

- I pitched my ridiculous startup idea to a robot VC

- Check out this robot restaurant built for the Winter Games

- Exoskeletons with autopilot: A peek at the near future of wearable robotics

- Like a wearable guide dog, this backback helps Blind people navigate