Stiffs are also expensive to care for—they have to be refrigerated, which requires special equipment and trained staff. Heap on a little bad publicity about the organ-donor trade and you’ve got an environment Dr. Frankenstein would find a challenge.

So to solve the shortage of real dead folks, anatomists decided to create virtual ones.

An early pioneer of such virtual simulation was Norman Eizenberg, now an associate professor at the Department of Anatomy and Developmental Biology at Monash University in Australia. Over 20 years ago, he began collecting dissection data and storing it in an electronic form. That resulted in the creation of An@omedia’s virtual dissection software, which tremendously sped up the traditional process of learning human anatomy.

According to Eizenberg, the ratio of students to cadavers at his school is 80 to one, and slicing up a stiff is a slow meticulous process.

“You can’t just take a knife and fork and start cutting. You need to clear away fat, clear away fibers—all the tissues that hold us together.”

And that’s just the start; others want to push it even further. Robert Rice is a former NASA consultant who had built virtual astronauts for the agency and holds a Ph.D. in anatomy, while Peter Moon is the CEO of Baltech, a sensing and simulation technology company in Australia. They want to create a 3-D virtual human whose anatomy the students will be able to not only see but actually feel. And they’re not talking about a plastic imitation body made from synthetic tissues. They’re talking about a haptic, computerized human model the aspiring medics will be able to slice away on a computer screen while experiencing the sensation of cutting through the skin, pushing away fat and uncovering blood vessels. Moon calls is “putting technologies and innovation together to create a new norm.”

How does one build a tactile experience for dissecting a human body on a flat piece of glass?

The idea may sound far out, but each and every one of us is already using haptic electronic devices—the touchscreens on our smartphones and tablets that vibrate when we type a phone number or text a friend. That glass can respond to your taps only in a simple way—it can’t convey the flexibility or density of what you’re touching. But other, more advanced and sophisticated haptic devices can do that, and they already exist. Such devices can create the sense of touch by applying forces, vibrations or motions to their user. This mechanical stimulation helps create haptic virtual objects (HVOs) in a computer simulation.

With the help of the tactile device as an intermediary, the users can manipulate HVOs on the screen while experiencing them as if they’re real. The concept is similar to the flight simulator a student pilot may use, where simple controls such as a joystick let her fly a virtual plane. The haptic human will be far more sophisticated, allowing student doctors to perform virtual dissections and surgeries.

“We’ll offer multi-touch, both-hands haptics which invokes the remarkable human sense of touch, sensitivity and meaning,” Rice says.

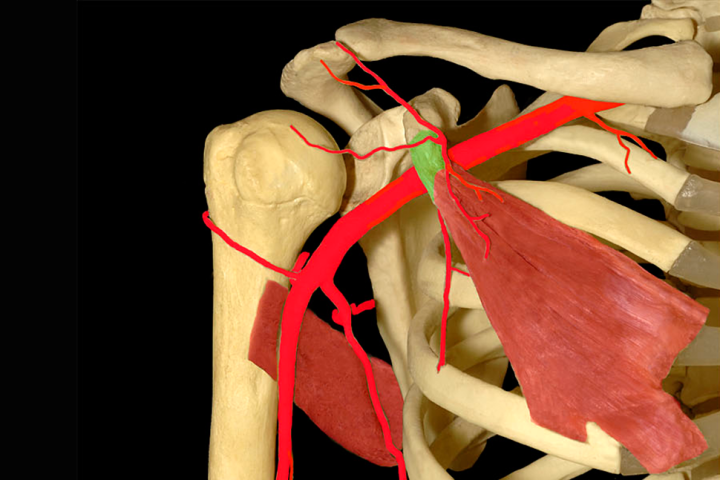

He has already laid out a roadmap to the haptic human. Anatomedia has the database of photos and scans depicting various body parts, bones, muscles and tissues. Using a haptic programming language such as H3DAPI—an open source software development platform—programmers can assign tactile qualities to the Anatomedia objects and make them respond to the movements of the student’s virtual scalpel just like they would in a real life. Such tactile qualities can be stiffness, deformability or various textures.

“You will feel the texture of skin, the firmness of an athletic muscle or the flabbiness of belly fat, the rigidity of your bony elbow or the pulsatile flow of blood at your wrist pulse point,” says Rice. All of the physical properties that exist in the world are built into the haptic programming language.

Aspiring medics will be able to slice away on a computer screen while experiencing the sensation of cutting through the skin, pushing away fat and uncovering blood vessels.

Rice and Moon titled their virtual human software Interactive Human Anatomy Visualization Instructional Technology—or simply IHAVIT.

So when can we expect the computer cadavers to replace the real ones, making the anatomy labs obsolete? Once the project is funded, says Rice, it would take about three to four years to program such virtual human. But the task requires a significant investment—more than a typical IndieGoGo campaign can amass. “If we do the human arm as a proof of concept,” Rice says, “we’re looking for a budget of three quarters of a million dollars and we would deliver it in 12 months.”

To build the rest would take 36 to 48 months, Rice estimates, and would cost $15 million as a ballpark figure — with a state-of-the-art version adding up to $24 million. If it sounds like a lot, dig this: to run a mid-size cadaver lab costs a medical school about $3 to $4 million a year. If a handful of medical schools pitch in for the idea, in a few years they’ll be saving those millions. And they would no longer have to deal with the dead-people problems such as shipping, preserving, returning and cremating. “It would probably reduce the overall cost of medical education,” Rice says.

But the team yet has to find the investor to back the project—that would take someone like Elon Musk, Bill Gates, Mark Cuban or Mark Zuckerberg, Rice says. “The individual needs to be a champion of our opportunity to integrate advanced technology with traditional healthcare,” he says. “We need to touch the mind and heart of an investor inspired to support this opportunity.”