There’s a nightmarish scene in Guillermo del Toro’s 2006 movie Pan’s Labyrinth in which we are confronted by a sinister humanoid creature called the Pale Man. With no eyes in his monstrous, hairless head, the Pale Man, who resembles an eyeless Voldemort, sees with the aid of eyeballs embedded in the palms of his hands. Using these ocular-augmented appendages, which he holds up in front of his eyeless face like glasses, the Pale Man is able to visualize and move through his surroundings.

This to a degree describes work being carried out by researchers at the U.K’.s Bristol Robotics Laboratory — albeit without the whole terrifying body horror aspect. Only in their case, the Pale Man substitute doesn’t simply have one eyeball in the palm of each hand; he’s got one on each finger.

“In the last sort of four or five years, there’s been a change that has happened in the field of tactile sensing and robotics [in the form of] a move towards using cameras for sensors,” professor Nathan Lepora, who leads the 15-member Tactile Robotics Research Group for the Bristol Robotics Laboratory, told Digital Trends. “It’s called optical- and vision-based tactile sensing. The reason that’s caught on is because there’s an understanding that the high-resolution information content from the fingertips is crucial for the artificial intelligence [needed] to control these systems.”

Digital Trends first covered Lepora’s work in 2017, describing an early version of his team’s project as being “made up of a webcam that is mounted in a 3D-printed soft fingertip which tracks internal pins, designed to act like the touch receptors in human fingertips.

Since then, the work has steadily advanced. To that end, the team recently published new research revealing the latest steps in the project: Creating 3D-printed tactile skin that may one day give prosthetic hands or autonomous robots a sense of touch far more in keeping with flesh-and-blood human hands.

The 3D-printed mesh consists of pin-like papillae which mimic similar dermal structure that are found between the outer epidermal and inner dermal layers on human skin. These can produce artificial nerve signals, which, when measured, resemble the recordings of real neurons that enable the body’s mechanoreceptors to identify the shape and pressure of items or surfaces when touched.

“When we did this comparison of the signals coming off our artificial fingertips with the real data, we found a very similar match between the two datasets, with the same kind of hills and valleys [found on both],” Lepora explained.

Combining this 3D-printed skin receptor information with data taken from tiny embedded cameras might, the team hopes, be the key to unlocking a long-term dream in artificial intelligence and robotics: An artificial sense of touch.

All five senses

While not every researcher would necessarily agree, perhaps the broadest fundamental aim of AI is to replicate human intelligence (or, at least, the ability to carry out all the tasks that humans are capable of) inside a computer. That means figuring out ways to recreate the five senses – sight, hearing, smell, taste, and touch — in software form. Only then can potential tests of Artificial General Intelligence, such as the proposed “Coffee Test” (a truly intelligent robot should be capable of walking into a house, and sourcing the necessary ingredients and components needed to make a cup of coffee), be achieved.

To date, plenty of attention and progress has been made when it comes to image and audio recognition. Less attention, but still some, has been paid to smell and taste. AI-equipped smart sensors can identify hundreds of different smells in a database through the development of a “digital nose.” Digital taste testers, able to give objective measures with regards to flavor, are also the subject of investigation. But touch remains tantalizingly out of reach.

Human touch is extremely nuanced.

“We’re more consciously aware of areas like vision,” said Lepora, explaining why focus has frequently been elsewhere for researchers. “Because of that, we ascribe more importance to it in terms of what we do every day. But when it comes to touch, most of the time we’re not even aware we’re using it. And certainly not that it’s as important as it is. However, if you take away your sense of touch, your hands would be totally useless. You couldn’t do anything with them.”

This isn’t to say that robots have steered clear of interacting with real-world objects. For more than half a century, industrial robots with limited axes of movements and simple actions such as grab and rotate have been employed on factory assembly lines. In Amazon fulfilment centers, robots play a crucial part in ensuring the one-day delivery process is made possible. Thanks to a 2012 acquisition of robotics company Kiva, Amazon warehouses feature armies of boxy robots similar to large Roombas that shuffle around shelves of product, bringing them to the human “pickers” to select the right items from.

However, while both of these processes greatly cut down on the time it would take humans to complete these tasks unassisted, these robots perform only limited functionality – leaving humans to carry out much of the precision work.

There’s a good reason for this: Although dexterous handling is something most humans take for granted, it’s something that’s extraordinarily difficult for machines. Human touch is extremely nuanced. The skin has a highly complex mechanical structure, with thousands of nerve endings in the fingertips alone, allowing extremely high-resolution sensitivity to fine detail and pressure. With our hands we can feel vibrations, heat, shape, friction, and texture – down to submillimeter or even micron-level imperfections. (For a simple, low-resolution vision of how difficult life is with limited touch capabilities, see how smoothly you can get through a single day while wearing thick gloves. Chances are that you’re tearing them off long before midmorning!)

Sensory feedback

“The thing that gives humans that flexibility and dexterity is the sensory feedback that we get,” Lepora said. “As we’re doing a task, we get sensory feedback from the environment. For dexterity, when we’re using our hands, that dominant sensory feedback is our sense of touch. It gives us the high-resolution, high-information content, sensations, and information about our environment to guide our actions.”

Cracking this problem will take advances in both hardware and software: More flexible, dexterous robot grippers with superior abilities to recognize what they’re touching and behave accordingly. Smaller, cheaper components will help. For example, approaches to robot grippers that use cameras to perceive the world date back at least as far as the 1970s, with projects like the University of Edinburgh’s pioneering Freddy robot. However, it’s only very recently that cameras have gotten tiny enough that they can conceivably fit into a piece of hardware the size of a human fingertip. “Five years ago, the smallest camera you could buy was maybe a couple of centimeters across,” Lepora said. “Now you can buy cameras that are [just a couple of] millimeters.”

There’s still much work to be done before innovations like sensing soft fingertips can be incorporated into robots to give them tactile sensing abilities. But when this happens, it will be a game-changer – whether for building robots that are able to carry out a greater number of end-to-end tasks in the workplace (think an entirely automated Amazon warehouse) or even act in “high-touch” jobs like performing caregiving roles.

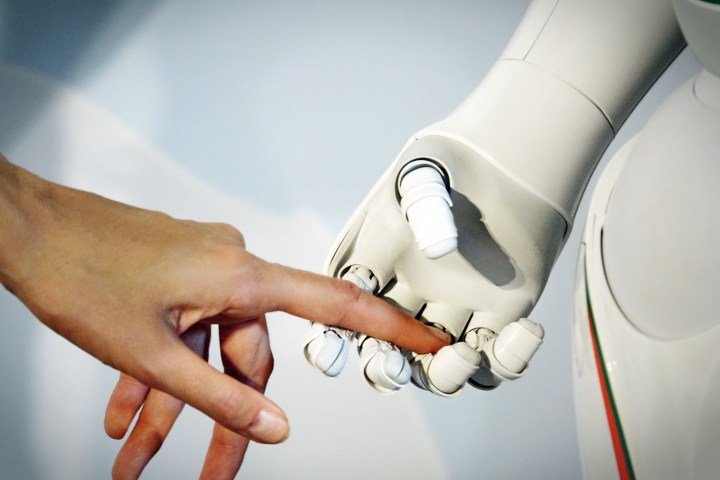

As robots become more tightly integrated with life as we know it, the ability to interact safely with those around them will become more important. Ever since 1979, when a Michigan factory worker named Robert Williams became the first person in history killed by a robot, robots have frequently been separated from humans as a safety precaution. By giving them the ability to safely touch, we could begin breaking down this barrier.

The power of touch

There’s evidence to suggest that, by doing so, robots may enhance their acceptance by humans. Living creatures, both human and otherwise, touch each other as a means of social communication – and, no, not just in a sexual manner. Infant monkeys that are deprived of tactile contact with a mother figure can become stressed and ill-nourished. In humans, a pat on the back makes us feel good. Tickling makes us laugh. A brief hand-to-hand touch from a librarian can result in more favorable reviews of a library, and similar “simple” touches can make us tip more in a restaurant, spend more money in a restaurant, or rate a “toucher” as more attractive.

One study of the subject, a 2009 paper titled “The skin as a social organ,” notes that: “In general, social neuroscience research tends to focus on visual and auditory channels as routes for social information. However, because the skin is the site of events and processes crucial to the way we think about, feel about, and interact with one another, touch can mediate social perceptions in various ways.” Would touch from a robot elicit positive feelings from us, making us feel more fondly toward machines or otherwise reassureus? It’s entirely possible.

As robot interactions become more commonplace, touch is likely going to be an important aspect of their social acceptance.

One study of 56 people interacting with a robotic nurse found that participants reported a generally favorable subjective response to robot-initiated touch, whether this was for cleaning their skin or providing comfort. Another, more recent piece of research, titled “The Persuasive Power of Robot Touch,” explored this topic also.

“[Previous research has shown] that people treat computers politely, a behavior that at first glance seems unreasonable toward computers,” Laura Kunold, assistant professor in the faculty of Psychology in the Human-Centered Design of Socio-Digital Systems at Germany’s Ruhr University Bochum, told Digital Trends. “Since robots have physical bodies, I wondered if positive effects such as positive emotional states or compliance, which are known from interpersonal touch research, could also be elicited by touch from a robot.” She noted: “Humans — students in our work — are generally open to nonfunctional touch gestures from a robot. They were overall amused and described the gesture as pleasant and non-injurious.”

As robot interactions become more commonplace, touch is likely going to be an important aspect of their social acceptance. As George Elliot writes (not, it should be said, specifically about robots) in Middlemarch, “who shall measure the subtlety of those touches which convey the quality of soul as well as body?”

Robots are getting more capable all the time. Several years ago, Massachusetts Institute of Technology built a soft robot delicate enough to capture and then release a live fish as it swims in a tank. Fruit- and vegetable-picking robots can also identify and then pick delicate produce like tomatoes without squashing them into passata. Hopefully, they’ll soon be reliable enough to do the same thing with human hands.

Thanks to work like that being carried out by researchers at Bristol Robotics Laboratory, they’re getting closer all the time.

Editors' Recommendations

- Digital Trends’ Tech For Change CES 2023 Awards

- How will we know when an AI actually becomes sentient?

- Algorithmic architecture: Should we let A.I. design buildings for us?

- Emotion-sensing A.I. is here, and it could be in your next job interview

- World’s Fair 2.0: The mission to resurrect the greatest tech expo of all time