“We are trying to, if you like, invent a completely new way of designing robots that doesn’t require humans to actually do the designing,” said Alan Winfield. “We’re developing the machine or robot equivalent of artificial selection in the way that farmers have been doing for not just centuries, but for millennia … What we’re interested in is breeding robots. I mean that literally.”

Winfield, who has been working with software and robotic systems since the early 1980s, is a professor of Cognitive Robotics in the Bristol Robotics Lab at the University of the West of England (UWE). He’s also one of the brains behind the Autonomous Robot Evolution (ARE) project, a multiyear effort carried out by UWE, the University of York, Edinburgh Napier University, the University of Sunderland, and the Vrije Universiteit Amsterdam. It will, its creators hope, change the way that robots are designed and built. And it’s all thanks to borrowing a page from evolutionary biology.

The concept behind ARE is, at least hypothetically, simple. How many science fiction movies can you think of where a group of intrepid explorers land on a planet and, despite their best attempts at planning, find themselves entirely unprepared for whatever they encounter? This is the reality for any of the inhospitable scenarios in which we might want to send robots, especially when those places could be be tens of millions of miles away, as is the case for the exploration and possible habitation of other planets. Currently, robots like the Mars rovers are built on Earth, according to our expectations of what they will find when they arrive. This is the approach roboticists take because, well, there’s no other option available.

But what if it was possible to deploy a miniature factory of sorts — consisting of special software, 3D printers, robot arms, and other assembly equipment — that was able to manufacture new kinds of custom robots based on whatever conditions it found upon landing? These robots could be honed according to both environmental factors and the tasks required of them. What’s more, using a combination of real-world and computational evolution, successive generations of these robots could be made even better at these challenges. That’s what the Autonomous Robot Evolution team is working on.

“The idea is that what you land on the planet is not a bunch of robots, it’s actually a bunch of RoboFabs,” Winfield told Digital Trends, referring to the ARE robot fabricators he and his team of investigators are building. “The robots that are then produced by the RoboFabs are literally tested in the real planetary environment and, very quickly, you figure out which ones are going to be successful and which ones are not.”

Matt Hale, a postdoc in the Bristol Robotics Lab who is building the RoboFab and designing the process by which it manufactures physical robots, told Digital Trends: “The key feature for me is that a physical robot will be created that wasn’t designed by a person, but instead automatically by the evolutionary algorithm. Furthermore, the behavior of this individual in the physical world will feed back into the evolutionary algorithm, and so help to dictate what robots are produced next.”

Welcome to the EvoSphere

Mimicking evolutionary processes through software is a concept that has been explored at least as far back as the 1940s, the same decade in which ENIAC, a 32-ton colossus that was the world’s first programmable, general-purpose electronic digital computer, was fired up for the first time. In the latter years of that decade, the mathematician John von Neumann suggested that an artificial machine might be built that was able to self-replicate — meaning that it would create copies of itself, which could then create more copies.

Von Neumann’s concept, which predated artificial intelligence by more than half a decade, was revolutionary. It sparked interest in the field that has come to be known as Artificial Life, or ALife, a combination of computer science and biochemistry that attempts to simulate natural life and evolution through the use of computer simulations.

Evolutionary algorithms have shown genuine real-world promise. For example, a genetic algorithm created by former NASA scientist and Google engineer Jason Lohn was used to design satellite components used on actual NASA space missions. “I was fascinated by the power of natural selection,” Lohn told me for my book Thinking Machines. What was shocking about Lohn’s satellite component, which was iterated by the algorithm over many generations, is that it not only worked better than any human design, but it was totally incomprehensible to them as well. Lohn remembered the component looking like a “bent paper clip.”

This is what the ARE team is excited about — that the robots that can be created using this evolutionary process could turn out to be optimized in a way no human creator could ever dream of. “Even when we know the environment perfectly well, artificial evolution can come up with solutions that are so novel that no human would have thought of them,” Winfield said.

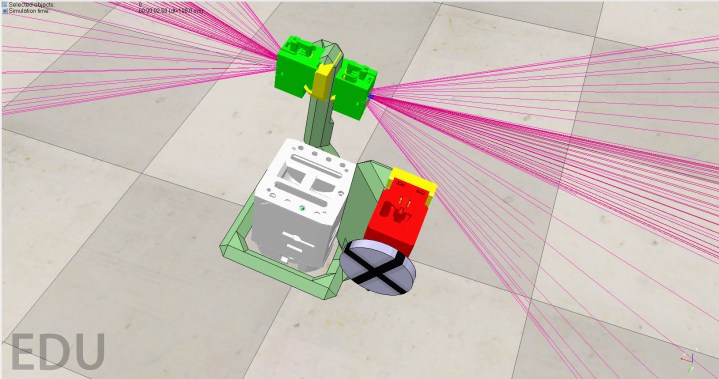

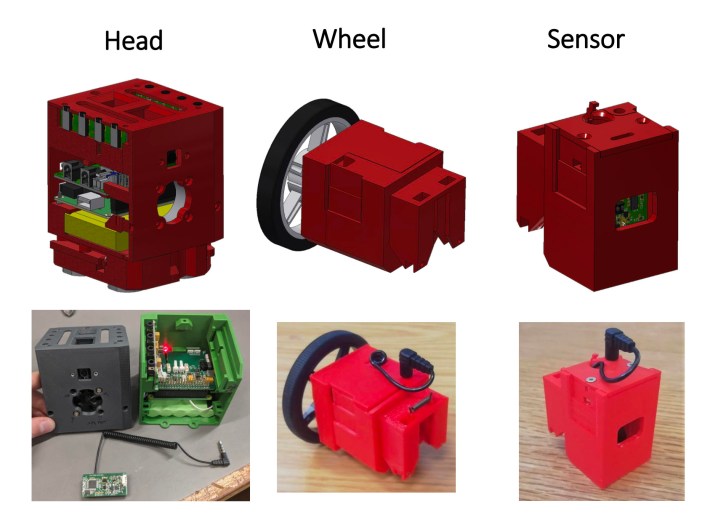

There are two main parts to the ARE project’s “EvoSphere.” The software aspect is called the Ecosystem Manager. Winfield said that it is responsible for determining “which robots get to be mated.” This mating process uses evolutionary algorithms to iterate new generations of robots incredibly quickly. The software process filters out any robots that might be obviously unviable, either due to manufacturing challenges or obviously flawed designs, such as a robot that appears inside out. “Child” robots learn in a controlled virtual environment where success will be rewarded. The most successful then have their genetic code made available for reproduction.

The most promising candidates are passed on to RoboFab to build and test. The RoboFab consists of a 3D printer (one in the current model, three eventually) that prints the skeleton of the robot, before handing it over to the robot arm to attach what Winfield calls “the organs.” These refer to the wheels, CPUs, light sensors, servo motors, and other components that can’t be readily 3D-printed. Finally, the robot arm wires each organ to the main body to complete the robot.

“I won’t get too technical, but there’s a problem with evolution in simulation which we call the reality gap,” Winfield said. “It means that stuff that is evolved exclusively in simulation generally doesn’t work very well when you try and run it in the real world. [The reason for that is] because a simulation is a simplification, it’s an abstraction of the real world. You cannot simulate the real world with 100% fidelity on a limited computing budget.”

Try as you might, it’s tough to simulate the actual dynamics of the real world. For example, locomotion that works in theory may not work in messy reality. Sensors might not provide the kind of clean readings available in simulation, but rather fuzzy approximations of the information.

By combining both software and hardware into a feedback loop, the ARE researchers think they may have taken a big step toward solving this problem. As the physical robots travel around, their successes and failures can be fed back to the Ecosystem Manager software, ensuring that the next generation of robots are even better adapted.

The risk of inadvertent replicators

“The big hope is that sometime during the next 12 months or so, we’ll be able to press the start button and see this entire process running automatically,” Winfield said.

This won’t be in space, however. Initially, applications for this research are more likely to focus 0n inhospitable scenarios on Earth, such as helping to decommission nuclear power plants. Hale said that the ultimate goal of a “fully autonomous system for evolving robots doing a real-world task is several decades away,” although in the meantime, aspects of this project — such as the use of genetic algorithms to, in Winfield’s words, “evolve a heterogeneous population” of robots — will make useful advances closer to home.

As part of the project, the team plans to release its works in an open-source manner, so others can build EvoSpheres if they want. “Imagine this as a kind of equivalent of a particle accelerator, except that, instead of studying elementary particles, we’re studying brain-body coevolution and all of the aspects of that,” Winfield said.

As for that timeline of self-replicating robots in space, it’s likely to be long after he retires. Does he foresee a time at which we’ll have colonies of self-replicating space robots? Yes, with caveats. “The fact that you’re sending this system to a planet with a limited supply of electronics, a limited supply of sensors, a limited supply of motors means that the thing cannot run away because those are finite resources,” he said. “Those resources will diminish because parts will fail over time, so in a sense, you’ve got a built-in time limit because of the fact that those components will eventually all fail — including the RoboFabs themselves.”

He was keen to make clear this “safety aspect” of the project, which will, presumably, exist for as long as it’s not possible for robots to harvest materials from their surroundings and use these to 3D-print critical organ components.

“The reason that we prefer the approach that has a centralized bit of hardware is that it’s easy to stop the process, it’s easy to kill the process,” he said. “What we don’t want to end up with is inadvertently creating von Neumann replicators. That would be a very bad idea.”

Editors' Recommendations

- The future of automation: Robots are coming, but they won’t take your job

- Prosthetics that don’t require practice: Inside the latest breakthrough in bionics

- These robots taser weeds to death so farmers don’t need chemical herbicides