Not too long ago, Apple was rumored to be internally working on something called Apple GPT, a chatbot based on the company’s own AI model with the goal of emulating what OpenAI’s ChatGPT does. In the months that followed, we have seen generative AI products appearing everywhere, from Google Pixels and Samsung’s Galaxy S24 phones to an upstart like the Nothing Phone 2a.

In the meanwhile, all we got from Apple were vague, but bold claims. Apple CEO Tim Cook told investors that Apple’s generative AI push will “break new ground” when it arrives later this year. However, it appears that Apple will have a helping hand from Google to realize those dreams. Or maybe even OpenAI will come to the rescue.

According to Bloomberg, Apple is reportedly in talks with Google to license the Gemini AI models for iPhones. That sounds like the same strategy Samsung followed for the Galaxy S24 series phones, which can run Google’s Gemini Nano model on-device, while more powerful versions are available on the cloud.

The terms of the deal haven’t been finalized yet, as discussions are said to be very much in flux. However, Apple is reportedly in talks with OpenAI as well. To recall, OpenAI’s foundation tech, such as the GPT-4 model and Dall-E, is currently available across Microsoft’s suite of products and through standalone apps and services like ChatGPT Plus. It’s an interesting development in Apple’s AI ambitions — and one that has me equally excited and worried.

Apple’s AI journey (so far)

In the final month of 2023, without much fanfare, Apple introduced a series of model libraries and frameworks under the MLX group designed to operate on its proprietary silicon. This move is poised to introduce generative AI capabilities to the Mac series, akin to Qualcomm’s endeavors with its Snapdragon X Elite platform.

At the beginning of this year, Apple’s research division unveiled a paper on a generative AI tool named Keyframer — enabling users to produce animated content. It is fundamentally based on the GPT-4 model from OpenAI, yet it incorporates vector graphics for processing still images.

Furthermore, Apple’s experts also pushed a research paper describing an AI tool that facilitates image editing through simple verbal instructions. This feature bears resemblance to the voice-assisted media editing toolkit touted by Qualcomm’s latest premier Snapdragon chips.

A subsequent report by Bloomberg highlighted that Apple has been augmenting its dedicated team responsible for examining generative AI functionalities, with the grand aim of making these tools accessible to developers by 2024.

It’s rumored that Apple’s inaugural batch of generative AI functionalities will debut with iOS 18, which is lined up for a June reveal. But as per Bloomberg’s latest report, those features are geared towards native on-device systems and not really generative AI facilities that are usually cloud-connected, such as those provided by ChatGPT, Gemini, or Perplexity.

In September, The Information disclosed that Apple has been developing “foundation models” aimed at enhancing Siri. This initiative is purportedly similar to how Gemini is advancing Google Assistant.

What could Gemini do on iPhones?

Now, Gemini brings a ton of capabilities to a phone while running locally and when connected to the internet. When implemented on-device, as is the case with the Google Pixel 8 Pro, it can summarize conversations in the Recorder app even when the phone is offline.

For folks running the Gboard keyboard app, Gemini Nano brings Smart Reply to the table, starting with apps like WhatsApp. In a nutshell, it reads your conversation and accordingly suggests replies based on the context. On-device AI also adds offline translations, a feature that is already shipping on the Gemini-ready Samsung Galaxy S24 series phones.

Right now, with the Gemini app installed on a phone, it can accomplish the following tasks.

- Just like Google Assistant or ChatGPT, you can engage in natural language conversations with Gemini and get help with writing, coming up with ideas, and more.

- Quickly summarize the information in your emails or files after activating the Workspace extension. Information can be summarized in formats like lists, charts, and tables.

- Generate images using text prompts a la OpenAI’s Dall-E engine.

- Get help using your camera in new ways. In the Gemini app, point the camera at a scene and ask the AI for information about objects in the frame.

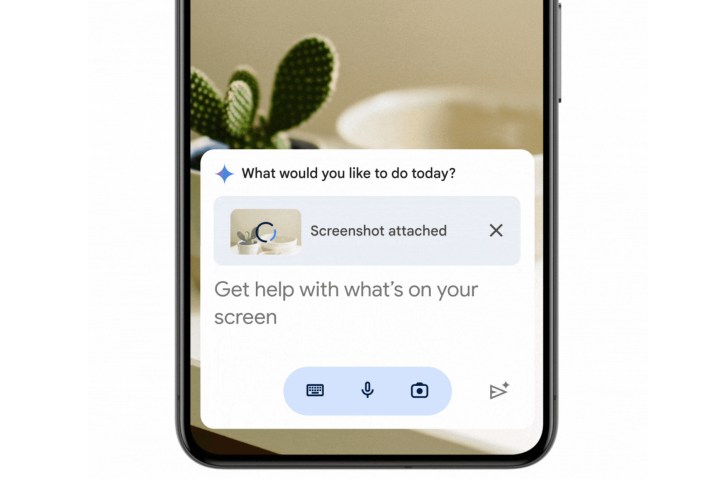

- Understand what’s on your screen. Summon Gemini with a “Hey Google” command to get the job done. For example, it can summarize the article you are currently reading.

- Use Google Maps and Google Flights to plan trips and even create customized routines.

How an Apple-Google AI deal might play out

As mentioned above, Samsung worked closely with Google to get the Gemini Nano AI model running on its flagship phones. But Gemini is not merely limited to flagships. In February this year, MediaTek announced that its mid-range Dimensity 8300 silicon is now optimized for Google Gemini alongside the flagship Dimensity 9300.

Doing something similar for Apple shouldn’t be much of a hassle. If an on-device licensing deal doesn’t pan out, there’s always the application route. For now, it’s unclear what strategy Apple implements, assuming the deal goes through in the first place.

The bigger question is whether a Gemini licensing deal will meaningfully change how users interact with their iPhones. And more importantly, will Gemini foster any change for Siri? To put it bluntly, Siri still has a lot of ground to cover before it can catch up with Google Assistant.

But then, even Google hasn’t quite figured out where Gemini exists, or replaces Google Assistant in its entirety. Right now, when you install Gemini on an Android phone, it replaces Google Assistant. Or, at least, it tries to.

Your phone still relies on Google Assistant for a wide range of mundane yet meaningful tasks — like making a call, setting an alarm, sending a message, controlling smart home devices, and creating calendar entries. Similarly, for navigation, voice typing in Gboard, and Android Auto, Google Assistant is still the trusty AI, not Gemini.

Moreover, Google Assistant is still the go-to AI companion on smart displays and Wear OS smartwatches. Considering how tightly Apple interweaves its software across the hardware ecosystem, especially between iPhones and the Apple Watch, a staggered approach where Gemini, Google Assistant, and/or Siri shoulder only a share of the responsibilities is going to create a lot of confusion for an average user.

Alternatively, Apple could work closely with Google and create exclusive integrations, tying Gemini with Apple ecosystem features such as Siri, Mail, Notes, Safari, Calendar, Health, and more. However, given the current state of Gemini data storage policies, we are not holding our breath for such a tight system-level integration.

The risks of Gemini for Apple

Of course, Apple missed the first wave of generative AI on smartphones, and if reports are to be believed, the company is frantically working to catch up. But a Gemini licensing deal also means we might never see Apple’s own work with generative AI development under the project “Ajax.” Or maybe we will only see it in a diluted form while Gemini does the AI heavy lifting on iPhones.

But Gemini is not without its faults. On the contrary, it has fumbled more astonishingly than any other mainstream generative AI tool. A few weeks ago, Gemini users noticed that it was producing grossly inaccurate images, particularly mishandling skin tone, ethnicity, and historical accuracy.

The controversy escalated to the extent that Google paused text-to-image creation for Gemini. “To be clear, that’s completely unacceptable, and we got it wrong,” Google CEO Sundar Pichai wrote in an internal memo, which was reported by NPR.

Across the Atlantic, Gemini became mired in another controversy when its remarks regarding India’s Prime Minister Narendra were deemed derogatory and went viral on social media. “To simply then say ‘… sorry, it was untested’ is not consistent with our expectations of compliance with the law,” warned (via NDTV) India’s Union Minister Rajeev Chandrasekhar.

The controversy once again thrust AI regulation into the debate and an advisory was also issued for major AI players, requiring them to get explicit approval before tools like Gemini are released publicly.

For a company that plays it as safe with government regulations as Apple, licensing Gemini for hundreds of millions of devices worldwide is no small risk. Particularly when Google itself warns that “Gemini will make mistakes” and says that you should always double-check for inaccuracies.

Editors' Recommendations

- I compared Google and Samsung’s AI photo-editing tools. It’s not even close

- An Apple insider just revealed how iOS 18’s AI features will work

- iPhone 16: news, rumored price, release date, and more

- iPhone SE 4: news, rumored price, release date, and more

- Here’s how Apple could change your iPhone forever