“We believe there’s a real opportunity to develop and use a spectral imager in a smartphone. Despite all the progress which has been made with different cameras and the computing power of a smartphone, none can really identify the true color of a picture.”

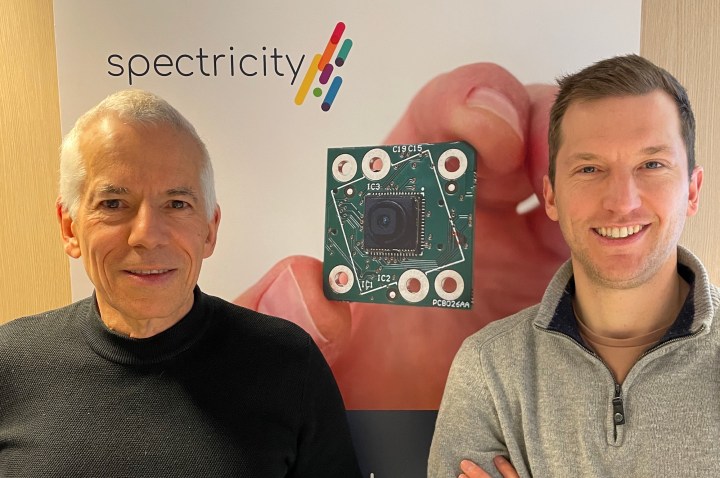

This is how Spectricity CEO Vincent Mouret described the company’s mission to Digital Trends in a recent interview, as well as the reason why it’s making a miniaturized spectral image sensor that’s ready for use in a smartphone. But what exactly is a spectral sensor, and how does it work? It turns out, it can do a whole lot more than just capture pretty colors.

Have you ever seen this color?

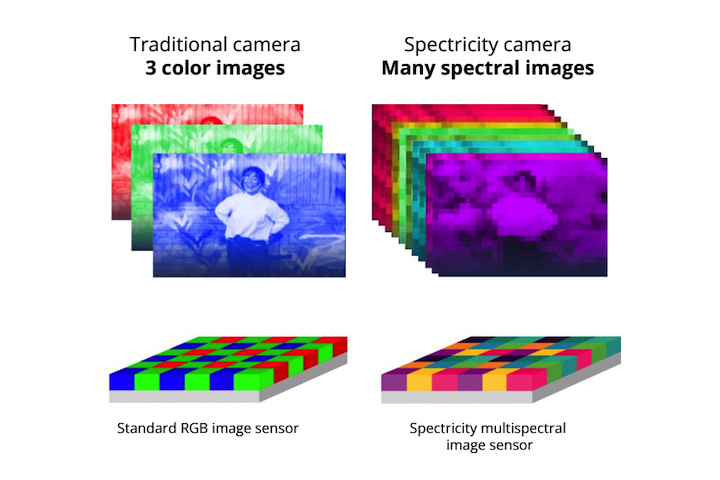

Before we get to all that, let’s talk about what Spectricity’s S1 multispectral image sensor does for cameras. Most phone cameras use three-color RGB (red, green, and blue) sensors, but the Spectricity S1 sensor looks further into the visible light and near-infrared range to reproduce more natural, more consistent colors, along with considerably improved white balance.

“A standard camera integrated into a smartphone has an RGB sensor that sees red, green, and blue,” Mouret said. “We add filters to create up to 16 different images with different colors, different wavelengths of light, light coming from different sources, and the reflected light coming from the object of the scene. You can identify many different properties thanks to these different images compared to a standard RGB.”

What this means is that no matter the lighting conditions, the images taken by a phone with the S1 sensor will have more consistent colors throughout, as you can see in the example image below. During our conversation, I saw a live demonstration that replicated the color reproduction and consistency seen in the example, but it’s worth noting the sensor was operated by a PC and not a smartphone.

“You will see with Xiaomi, Samsung, Apple, and Huawei cameras the colors are very different in different lighting conditions. In the same scene taken with our spectral imager, there’s slight differences, but minor ones. The colors out of images are the colors seen by our naked eyes. You can see all the smartphones produce very different colors.”

This color accuracy will also improve the ability of smartphone cameras to better reproduce different skin tones. In another example image, the S1 sensor appeared to show considerable improvements here too.

“You can see the skin tones are totally different depending on the lighting condition,” Mouret pointed out. “The solution is to use a spectral imager to analyze the lighting conditions, to really give the right tone. This is the only way. You can put a lot of AI behind it, but it’s not enough. You need to have some additional hardware.”

Unfortunately, Mouret didn’t have a Google Pixel phone on hand to compare the S1 sensor with Google’s Real Tone computational technology, which promises to do something similar, only by using software enhancements.

Is it a sensor, or a camera?

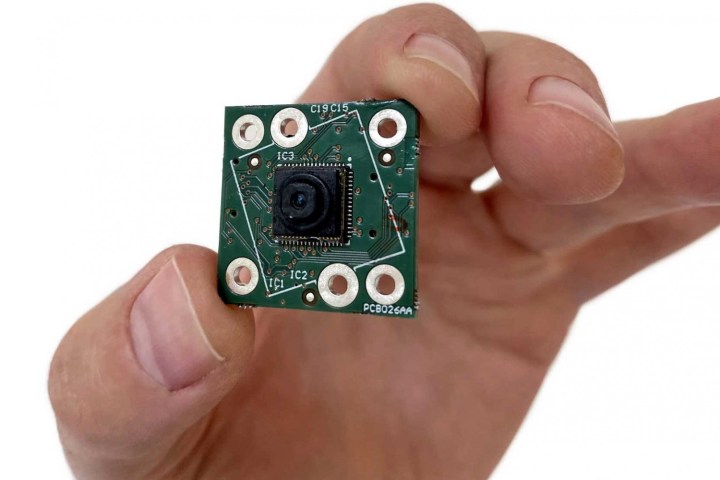

The benefits of a spectral image sensor like the S1 seem to be clear, and who doesn’t want more effective white balance and natural, consistent colors in their photographs? But the way Mouret described the S1’s ability made it sound more like a camera than a sensor. Which is it? Can it really fit inside a smartphone? If so, what modifications will be needed?

Spectricity application engineer Michael Jacobs, who ran the demo I watched, explained what the S1 actually is.

“It’s really a companion sensor, very similar to a depth sensor or a 3D sensor used with an RGB camera,” he confirmed.

However, it does technically take photos, just not ones you’d want to use individually, as Mouret explained:

“Our image sensor has a VGA resolution, 800 x 600 pixels. You need to combine this smaller spectral image with an RGB image. The module itself is really very small. It has been designed to be integrated in a smartphone. So it’s 5mm by 5mm by 6mm,” Mouret told me, before saying more about how the sensor is built. “It’s not easy, but it’s nothing very sophisticated, I would say,” he explained, saying the module is built using the same methods as regular CMOS sensors. “In terms of overall cost, the complete module will be the same cost as a high-volume standard camera.”

Right now, the sensor will integrate with a standard Image Signal Processor (ISP) used by smartphone processors from Qualcomm and MediaTek, but it will require additional software to operate.

The S1 multispectral image sensor isn’t a one-trick pony, and actually has an intriguing second function, in that it can recognize and analyze skin biomarkers. What this means is it’s able to tell if it’s “seeing” a real person, which could be used for security applications — for example, it knows if someone is wearing a mask to conceal their identity — skin care, and even in health applications when combined with AI and other software. It could also be used to enhance Portrait Mode photos.

When will it be used in a phone?

Manufacturers have been working to improve color reproduction on phones for a while, going back to the LG G5 in 2016, which used a dedicated color spectrum sensor alongside its laser autofocus sensor to improve color performance. Huawei took a different approach and abandoned an RGB sensor on the P30 Pro in favor of an RYYB sensor, in its effort to better reproduce colors and improve lowlight performance. Olympus and Imec have also experimented with near-infrared RGB sensors for different applications.

The Spectricity S1 sensor is a world’s first and goes in a different direction agin from these examples. Based on the demo and example images, it’s showing considerable promise — but when should we expect to see it on a phone?

“In the beginning, it will be introduced on high-end phones,” Mouret said. “It will be small volume in 2024, a higher volume in 2025, and then starting in 2026, it will be more widespread.”

Mouret also expects the first examples to come from Chinese smartphone manufacturers, and not from companies like Samsung and Apple. However, Mouret expects this to change in the future, and in the CES 2023 press release for the S1 sensor, he didn’t hold back on his expectations, saying:

“We expect the first smartphone models with the S1 to be released in 2024, and we expect all smartphones to include our technology within the coming years.”

Yes, all smartphones. It’s a big target, but if the performance we saw in the demo is what we will eventually see from a phone camera with the S1 alongside it, few manufacturers will want to be left behind.

Editors' Recommendations

- Here’s how Apple could change your iPhone forever

- Apple is about to change iPhone web browsing forever

- I compared two of the year’s best phones in an extreme camera test

- Fixing your broken Samsung phone is about to get easier

- Can a $450 phone beat the Samsung Galaxy S23’s cameras? It’s close