The aggressiveness with which artificial intelligence (AI) moved from the realm of theoretical power into real-world consumer-ready products is astonishing. For several years now, and up until a couple of months ago when OpenAI’s ChatGPT broke onto the scene, companies from the titans of Microsoft and Google down to myriad startups espoused the benefits of AI with little practical application of the tech to back it up. Everyone knew AI was a thing, but most didn’t actually utilize it.

Just a handful of weeks after announcing an investment in OpenAI, Microsoft launched a publicly-accessible beta version of its Bing search engine and Edge browser powered by the same technology that has made ChatGPT the talk of the town. ChatGPT itself has been a fun thing to play with, but launching something far more powerful and fully integrated into consumer products like Bing and Edge is an entirely new level of exposure for this tech. The significance of this step cannot be overstated.

ChatGPT felt like a toy; having the same AI power applied to a constantly-updated search database changes the game.

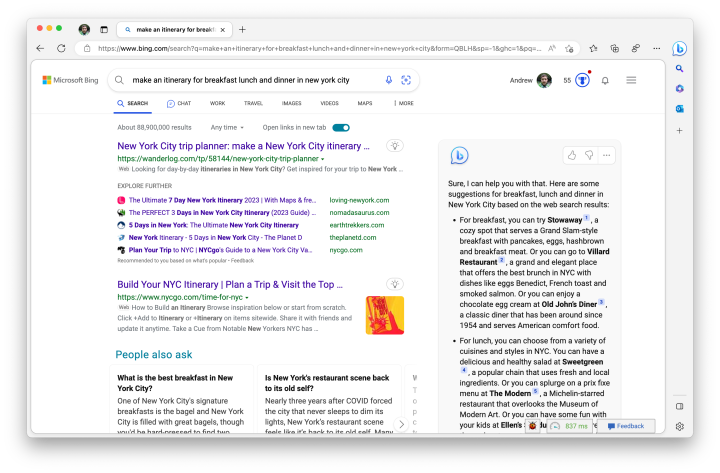

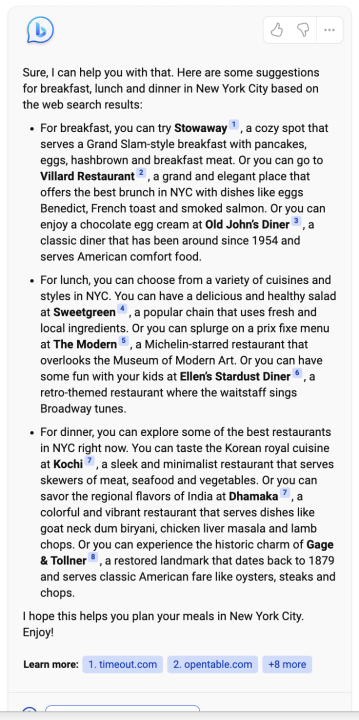

Microsoft was kind enough to provide me with complete access to the new AI “copilot” in Bing. It only takes a few minutes of real-world use to understand why Microsoft (and seemingly every other tech company) is excited about AI. Asking the new Bing open-ended questions about planning a vacation, setting up a week of meal plans, or starting research into buying a new TV and having the AI guide you to something useful, is powerful. Anytime you have a question that would normally require pulling information from multiple sources, you’ll immediately streamline the process and save time using the new Bing.

Let AI do the work for you

Not everyone wants to show up to Google or Bing ready to roll up their sleeves and get into a multi-hour research session with lots of open tabs, bookmarks, and copious amounts of reading. Sometimes you just want to explore a bit, and have the information delivered to you — AI handles that beautifully. Ask one multifaceted question and it pulls the information from across the internet, aggregates it, and serves it to you in one text box. If it’s not quite right, you can ask follow-up questions contextually and have it generate more finely-tuned results.

The biggest thing Microsoft brings to the table that was unobtainable through ChatGPT is recent information — and that differentiator truly changes the game. Whereas ChatGPT draws on a fixed data set and references a snapshot of the internet that is a couple of years old, Bing is pulling in data constantly and always has up-to-date information. It’s a search engine that’s crawling the entire internet, after all. I can get restaurant recommendations for a new place that opened last week, information about the new OnePlus 11 that launched yesterday, and up-to-date pricing on flights and hotels. This alone takes this experience from “fun toy” to “truly useful.”

Not only does that make the answers you get out of Bing’s AI copilot more relevant, but it also gives the system vastly more information to work with and build off of. When AI is constantly being served new data, it can (theoretically) improve at a faster rate. And that gets even better as it learns how people interact with it.

But at this point, conversational AI is far from applicable to every kind of search. Or frankly, most searches. And when it doesn’t work, it really falls flat.

This beats ChatGPT … but still has its weaknesses

Shortcomings we all experience when using ChatGPT aren’t so easily dismissed or forgiven when a company the size of Microsoft puts its name behind it and says it’s ready for everyone. And it took but a few minutes of testing (admittedly, an early beta version) the new AI-powered Bing to find similar shortcomings.

It’s counterintuitive, but the Bing copilot works better the less specific your questions are.

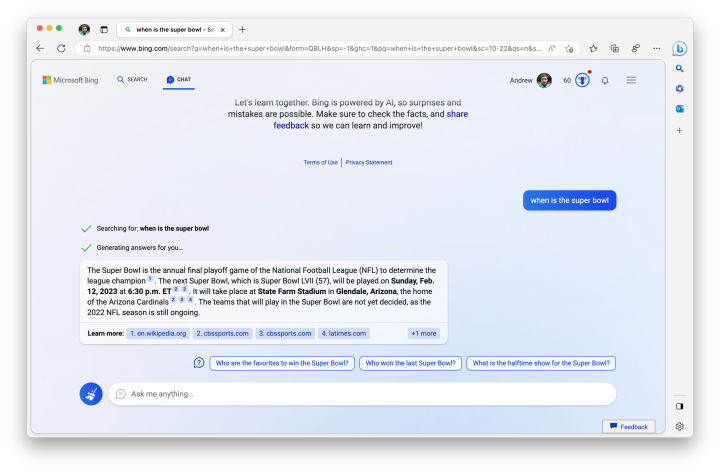

Interestingly, the more specific you are with your search, or the more certain you are about the answer you expect, the worse the copilot response. Counterintuitively, it is more capable of serving you a useful response when you give it less information to work with and leave the question open-ended for the AI to fill in the gaps.

When you ask a very specific question, you often get a very flowery answer with tons of unnecessary context and background. The answer you want is typically available within that few paragraphs and/or several bullet points, and in some cases, it’s set in bold, but it’s easy to see when the AI is trying to cover for its lack of “knowledge” by writing out a verbose response. That extra fluff is often not accurate, either, as the AI pulls from a wider set of sources that are more likely to be out of date or unrelated.

By Microsoft’s own admission, over half of web search queries are “navigational” or “informational” — meaning I have a specific question about how to get from A to B or a knowledge-base query that’s available in a structured data format. When I ask what hours a restaurant is open, or how many square miles the island of Manhattan is, I don’t need a history lesson attached to what is likely a couple-word answer. I just want the answer and to move on.

Knowing when to use AI and when to keep it simple

For this half (or more) of searches, the new AI power offers little added value right now. And in many cases, it’s actually a hindrance. Speaking with Jordi Ribas, VP of Bing, I got some much-needed context on how Microsoft is thinking about this integration of AI with its “traditional” search product, and how they are managing surfacing powerful new technology to customers when they know it isn’t applicable in every scenario and has room for improvement.

Sometimes I just want a simple answer, and the AI wants to tell me a story instead.

This debate was strong within the Bing team, Ribas said; there was a strong contingent of people who felt that moving the entire Bing interface into a “chat” window was the only way to go, while others were bearish and preferred it was a smaller part of the experience. The result, in a very Microsoft approach, is somewhere in the middle.

Getting my hands on a dev build of Edge with the copilot built in to use outside of a demo environment, I started to understand what they’re going for. The standard Bing interface starts off the same; outside of the fact that you now have a bigger text box that can take up to 1,000 characters. If the AI can generate a detailed response to your query, you get a panel on the right side with that response — but it doesn’t take over the experience, it’s complementary to the standard search results on the left. If you want to dive in with more questions, you can switch to a full-screen all-chat experience and have a conversation of sorts with the AI. It’s simple to scroll up to the chat and back down to the regular results interchangeably.

And in some cases, at least in this early version, you may not get any AI responses at all — just a standard set of blue links. Microsoft is honest about the fact that these AI responses are very computationally intensive and expensive to return (servers aren’t free!) compared to a “regular” search result, so when Bing is confident it can give a satisfactory response using the regular search model rather than the new “Prometheus” (AI) model, it’ll do that. Though in my experience it doesn’t fall back to this standard model nearly enough, as I noted above.

It’s clear that what Microsoft has been able to accomplish here is beyond just sprinkling a little AI on top of Bing. But it’s not that far beyond it, and it doesn’t take long to figure out that with this current level of technical sophistication, we don’t want AI in every aspect of our search experience.

The core issue with AI-powered Bing is that the queries that demo very well aren’t typically what you’re doing most of the time you sit down and use a search engine. For a majority of my searches I won’t be utilizing AI, and at this point, the “intelligence” often gets in my way just as often as it helps. That takes some of the edge off of the amazement of this announcement.

But I am still incredibly excited about these developments in AI, and even when you recognize that you aren’t going to be using the AI for each search query, the times you do use it can be an extraordinary experience.

This is the future of web search, there’s no doubt about it. It just isn’t necessarily the near future.

Editors' Recommendations

- Apple finally has a way to defeat ChatGPT

- OpenAI needs just 15 seconds of audio for its AI to clone a voice

- ChatGPT shortly devolved into an AI mess

- OpenAI and Microsoft sued by NY Times for copyright infringement

- Here’s why people are claiming GPT-4 just got way better