Quantum computing holds the most promise for advancing computing processes such as artificial intelligence, climate forecasting, and more. So far, though, quantum computing is in its infancy, with a great deal of research but few real-life applications. Every major technology company is working on advancing quantum computing, and as one of the leaders, Intel hopes to use “spin qubits” to help usher the technology into the mainstream.

In its most basic form, a quantum bit (qubit) is similar to the binary bit used in traditional computing. With quantum computing, information is indicated by the polarization of a photon. While standard computing, bits are always in one of two states, zero or one. With quantum computing, however, qubits can actually be in multiple states simultaneously. Without digging too much into the details, this phenomenon theoretically allows a quantum computer to perform huge numbers of calculations in parallel and to perform much faster than traditional computers at certain tasks.

While most of the industry, including Intel, is working on one specific type of qubit, known as superconducting qubits, Intel is looking into an alternative structure known as “spin qubits.” While superconducting qubits are based on superconducting electronic circuits, as the name implies, spin qubits work in silicon and, according to Intel, overcome some of the barriers that have been holding quantum computing back.

This alternative approach takes advantage of the way single electrons spin on a silicon device and this movement is controlled through the use of microwave pulses. As an electron spins up, a binary value of 1 is generated, and when the electron spins down, a binary value of 0 is generated. Because these electrons can also exist in a “superposition” state where they can essentially act as if they are both up and down at the same time, they allow for parallel processing that can churn through more data than a traditional computer.

Spin qubits hold a number of advantages over the superconducting qubit technology that drives most contemporary quantum computing research. Qubits are fragile things, easily broken down by noise or even unintended observation, and the nature of superconducting qubits mean they require larger physical structures and they must be maintained at very cold temperatures.

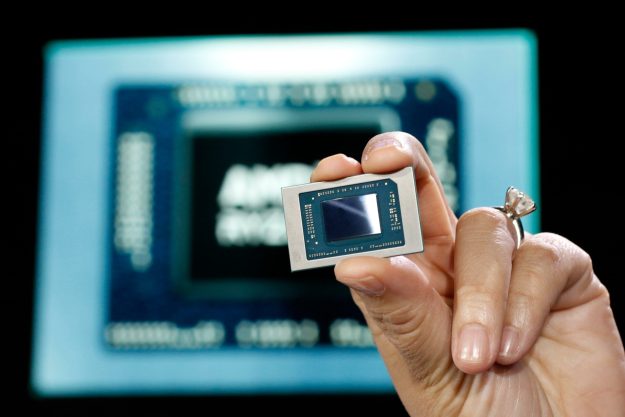

Because they’re based in silicon, though, spin qubits are smaller in physical size and they can be expected to hold together for longer periods of time. They can also work at much higher temperatures and so don’t require the same level of complexity in system design. And, of course, Intel has tremendous experience in designing and manufacturing silicon devices.

Like all of quantum computing, spin qubit technology is in its nascent stages. If Intel can work out the kinks, however, spin qubits could help bring quantum computing to actual commercial applications much sooner than currently anticipated. Already, the company plans to use its existing fabrication facilities to create “many wafers per week” of spin qubit test chips, and should begin production over the next several months.

Editors' Recommendations

- Everything we know about Lunar Lake, Intel’s big next-generation chips

- Intel is oddly enthusiastic about AI replacing everyone’s jobs

- Intel’s new CPU feature boosted my performance by 26% — but it still needs work

- Intel is cooking up an exciting DLSS 3 rival

- Confused about Core Ultra? We were too, so we asked Intel about it