Newegg, the online retailer primarily known for selling PC components, has pushed AI into nearly every part of its platform. The latest area to get the AI treatment? Customer reviews.

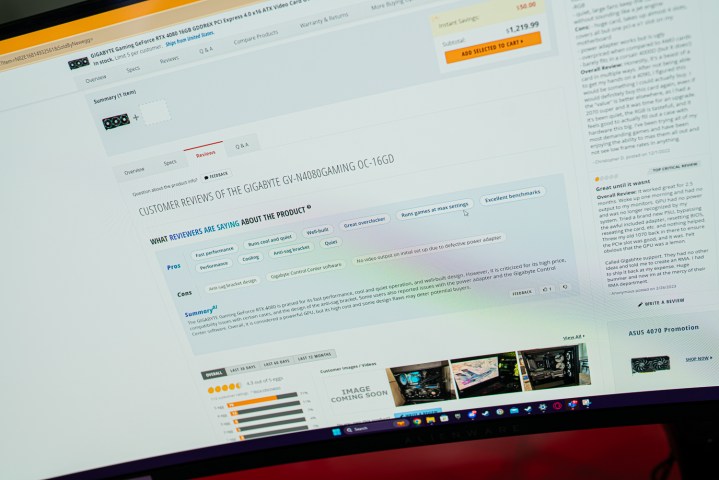

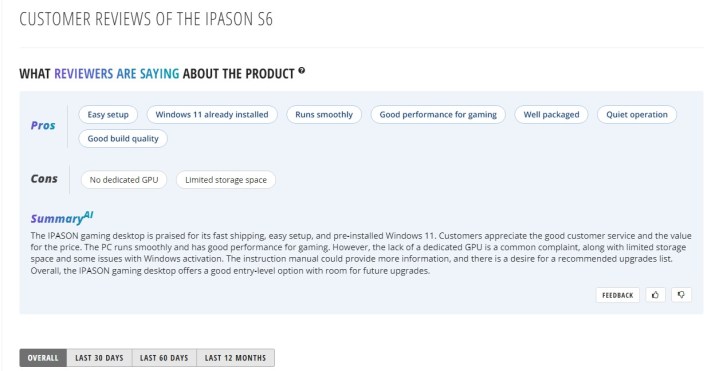

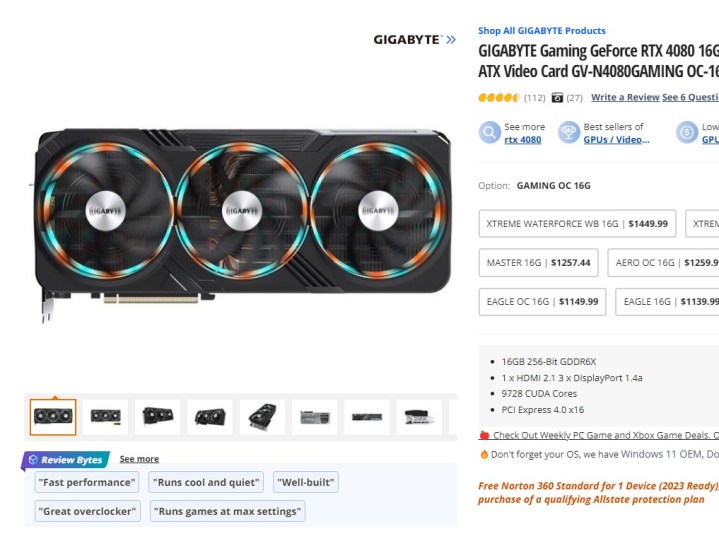

On select products, Newegg is now showing an AI summary of customer reviews. It sifts through the pile, including the review itself and any listed pros and cons, and uses that to generate its own list of pros and cons, along with its own summary. Currently, Newegg is testing the feature on three products: the Gigabyte RTX 4080 Gaming OC, MSI Katana laptop, and Ipason gaming desktop.

I’ve previously covered Newegg’s mishaps with AI via its ChatGPT-driven PC builder. The company confirmed it’s using ChatGPT once again to generate these product review summaries, and they are filled with issues.

Smart tech, dumb summaries

For instance, the MSI Katana summary is contradictory. It says the laptop is both “recommended for beginners” and also for “those seeking a high-performance laptop.” The summary also lists an “effective cooling system” early in the summary, but “loud fan noise” and “hot running temperatures” later on.

Elsewhere, with the Ipason desktop, the summary does a decent job listing the pros and cons of customer reviews. However, it fails to even mention that the 1TB hard drive in the desktop is formatted as three separate hard drives, which was a common complaint among the real customer reviews. Instead, it just says the machine has “limited storage space,” likely because the AI was confused about the formatting woes.

In addition to the summary, Newegg is now showing “review bytes” under the product photo. These are small quotes from the AI-generated review, and critically, the display doesn’t show they were generated by AI.

If you actually follow these links and go to the AI-generated section, there’s a disclaimer you can click on that reads: “Efforts have been made to ensure accuracy, but individual experiences, options, and interpretations may vary and influence the generated content.”

Problems brewing

The AI isn’t writing its own review out of thin air, but it is serving as a replacement for reading customer reviews that may have more nuance. The individual experiences shouldn’t be discounted, either. In the case of Ipason, the AI-generated review is for a product that isn’t sold by Newegg. Things like customer service and support are important for marketplace items, and the AI largely glosses over that area.

Digital Trends asked Newegg if this feature will be largely rolled out across its website, or if it will target specific items first, and hasn’t yet received a response. For products like the Gigabyte RTX 4080 Gaming OC, the summary feature works (even if it isn’t as helpful as a full RTX 4080 review). There are some quirks still. For instance, the RTX 4080 summary lists “no video output on initial setup due to defective power adapter” as a con. Care to elaborate? Because that con alone overshadows the overwhelming number of pros these AI-generated summaries list.

My main concern is for marketplace items where the potential for fake reviews is higher. It’s always tough to know if customer reviews are fake or real, even with verified badges. We don’t have any data for Newegg, but recent research by the UK government suggests that upwards of 15% of reviews on Amazon aren’t real. Maybe that’s why Amazon hasn’t released its AI-driven summary tool yet.

Ultimately, customer reviews on the internet aren’t the most reliable way to make buying decisions, and using them to generate an AI summary makes them even less reliable.

Editors' Recommendations

- The best ChatGPT plug-ins you can use

- GPT-4 vs. GPT-3.5: how much difference is there?

- We may have just learned how Apple will compete with ChatGPT

- GPTZero: how to use the ChatGPT detection tool

- Is ChatGPT safe? Here are the risks to consider before using it