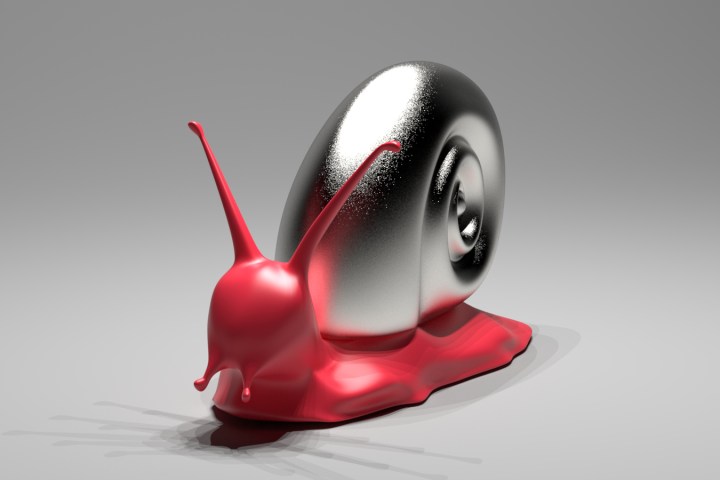

In short, they’ve figured out how to improve the way graphics software can render light as it interacts with extremely small details on the surface of materials. That means you could finally see the metallic glitter in Captain America’s shield, the tiny sparkles in the Batmobile’s paint job, and super-realistic water animations.

As a brief explainer, the reflection of light emanating from a material’s small details is called “glints,” and until now, graphics software could only render glints in stills. But according to Professor Ravi Ramamoorthi at the University of California San Diego, his team of researchers have improved the rendering method, enabling software to create those glints 100 times faster than the current method. This will allow glints to be used in actual animations, not just in stills.

Ramamoorthi and his colleagues plan to reveal their rendering method at SIGGRAPH 2016 in Anaheim, California, later this month. He indicated that what drove him and his team to create a new rendering technique was today’s super-high display resolutions. Right now, graphics software assumes that the material surface is smooth, and artists fake metal finishes and metallic car paints by using flat textures. The result can be grainy and noisy.

“There is currently no algorithm that can efficiently render the rough appearance of real specular surfaces,” Ramamoorthi said in a press release. “This is highly unusual in modern computer graphics, where almost any other scene can be rendered given enough computing power.”

The new rendering solution reportedly doesn’t require a massive amount of computational power. The method takes an uneven, detailed surface and breaks each of its pixels down into pieces that are covered in thousands of microfacets, which are light-reflecting points that are smaller than pixels. A vector that’s perpendicular to the surface of the material is then computed for each microfacet, aka the point’s “normal.” This “normal” is used to figure out how light actually reflects off the material.

According to Ramamoorthi, a microfacet will reflect light back to the virtual camera only if its normal resides “exactly halfway” between the ray projected from the light source and the ray that bounces off the material’s surface. The distribution of the collective normals within each patch of microfacets is calculated, and then used to figure out which of the normals actually are in the halfway position.

Ultimately, what makes this method faster than the current rendering algorithm is that it uses this distribution system instead of calculating how light interacts with each individual microfacet. Ramamoorthi said that it’s able to approximate the normal distribution at each surface location and then compute the amount of net reflected light easily and quickly. In other words, expect to see highly-realistic metallic, wooden, and liquid surfaces in more movies and TV shows in the near future.

To check out the full paper, Position-Normal Distributions for Efficient Rendering of Specular Microstructure, grab the PDF file here. The others listed in Ramamoorthi’s team include Ling-Qi Yan, from the University of California, Berkeley; Milos Hasan from Autodesk; and Steve Marschner from Cornell University. The images provided with the report were supplied to the press by the Jacobs School of Engineering at the University of California San Diego.

Is that water render just awesome, or what?

Editors' Recommendations

- Lenovo’s glasses-free 3D monitor has arrived, and it looks awesome

- Acer’s new gaming laptops feature mini-LED, 3D displays, and affordable prices

- Asus’ new ProArt Studiobook has a glasses-free 3D OLED screen

- Wolfenstein 3D and more classic Bethesda games join PC Game Pass

- Nintendo and HAL Laboratory want to make more 3D Kirby games