Artificial intelligence is a discipline that, historically, has rewarded big thinkers. James Marshall, professor of computer science at the U.K.’s University of Sheffield, thinks small.

That’s not intended as a slight, so much as it is an accurate description of his work. His startup, Opteran Technologies, has just received $2.8 million to continue pursuing that work. Where others are focused on building A.I. with human-level intelligence, pushing even further into the realms of “artificial general intelligence,” Marshall has his sights set on something a whole lot smaller than the human brain. He wants to build an artificial honeybee brain.

The brain of a honeybee is orders of magnitude smaller and technically more simplistic than a human brain. A human brain has, as far as we are aware, somewhere in the order of 86 billion neurons, and a volume of 1,274 cubic centimeters. A honeybee brain has 1 million neurons and is about the size of a pinhead.

Reengineering an artificial honeybee brain in silicon should be a lot simpler than building an artificial human brain. In fact, the biggest neural networks now have considerably more artificial neurons than the honeybee has real ones. If artificial neurons were all it took to build a comparable intelligence to a real animal, we should have artificial intelligence that’s significantly more advanced in general intelligence than a frog. Needless to say, we don’t.

Marshall told Digital Trends that his research interest was originally sparked by hearing about large-scale projects aiming to build a complete computer simulation of the human brain. “My initial response to that was, ‘if you’re going to start building a model of any brain on the planet, why on earth would you start with the most complicated one?’” he said.

Building smarter navigation systems

Honeybees might seem simpler — and, in a very real sense, they are — but reverse engineering a bee brain isn’t about low-hanging fruit with no practical application. Marshall said that bees are “consummate visual navigators, [adept at] long-distance navigation, with very sophisticated learning abilities. They’re much more than the simple kind of reactive automata that people often think insects are. Individually, they’re very clever.”

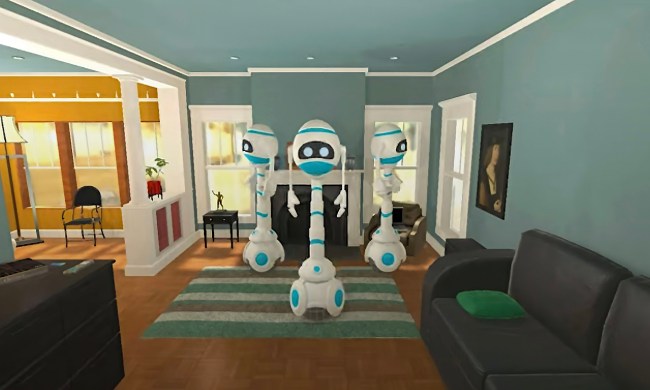

Previous research has suggested that honeybees are able to solve challenges such as the traveling salesman problem (in their case, finding the shortest route between flowers discovered in a random order) in a fraction of the time that it would take the world’s top supercomputers. Building a honeybee brain in silicon could therefore help develop sophisticated navigation tools that could be lightweight, ultra-low-powered, and orders of magnitude more efficient than the deep learning approaches,” said David Rajan, CEO of Opteran. The company’s technology could power future drones, autonomous vehicles, and various robots.

“Having a million neurons and however many synapses isn’t the end of the story; it’s how you connect them together.”

Current deep-learning methodologies are inspired by an abstraction of the brain’s visual cortex, referring to its visual recognition center. Opteran’s bee-inspired algorithms, meanwhile, more fully reflect the way the brain actually works. “When you look at a complete brain, it is highly structured,” Marshall said. “You have different brain regions that do different things, that are internally structured in different ways, with well-defined connections between them.”

Rajan, who described the company’s approach to more biomimicry-inspired brain algorithms as fundamentally different than current approaches, said he doesn’t call it artificial intelligence, but rather “natural intelligence.”

“Having a million neurons and however many synapses isn’t the end of the story; it’s how you connect them together,” said Marshall. “It’s also about the kind of information processing that’s done at the neuron level, because there’s more than one kind of neuron in the real brain, although there’s often only one neuron type in a deep net.”

Causing a buzz

Opteran’s approach to brain technology has several extremely promising elements. Its high-performance algorithm will use significantly less power than the heavy computer systems used by today’s deep-learning tools. Crucially, its creators promise no training will be required, making it significantly easier to deploy out of the box, and it will be better at dealing with black swan event-style edge cases. Furthermore, it’s predictable, with transparent rules that give it an edge over the opaque and unverifiable current approaches used by A.I. researchers.

Opteran will be launching its first commercial tools in the next 18 months, including technology for obstacle avoidance and reactive navigation, and autonomous decision-making, as well as Opteran See, a 360 degree camera.

Until then, the idea of this being a more robust approach to building sensing autonomous technologies remains open to questioning. However, early signs are promising. A recent trial involved using Opteran’s technology to pilot a small, sub-250-gram drone, with complete onboard autonomy, using fewer than 10,000 pixels taken from a single low-resolution panoramic camera. A drone that thinks like a bumblebee? That’s certainly something to keep an eye on.

But how do you know when you’ve created the brain of a bumblebee in silicon? After all, as leading neuroscientists are keen to point out, there’s plenty we still don’t know about the brain and therefore cannot hope to reverse engineer. Do the necessary milestones exist in bumblebee biomimicry to know when an A.I. modeled on a bumblebee is doing what its creators claim it is?

“What we really care about commercially is behavior, the competency of the system,” Marshall said. “As a business, we’re not fixated on saying we’re confident we’ve reproduced the way the honeybee works. [Instead, we want to say] we are confident that we have reproduced a system which is behaviorally robust, and which seems to us to behave as if it was a honeybee acting like a honeybee. This goes back to Alan Turing’s definition of an A.I. test. How do you know when you created A.I.? You can’t really look inside and say, ‘yes, that’s A.I.’ It has to be a behavioral test. That’s what the Imitation Game is; when can you fool a human observer that they’re talking to another human rather than an A.I.?”

A Turing Test for bee bots, then? The next couple of years are sounding more interesting all the time. When tomorrow’s robots are powered by a bumblebee-inspired algorithm, remember where you heard it first. And why, when it comes to A.I., thinking small isn’t so bad after all.