The most challenging aspects of creating smarter, safer cities can be witnessed at any street corner. That’s where, to coin a phrase, the rubber meets the road, because that’s where, according to the National Highway Traffic Safety Administration, roughly 20 percent of accident fatalities occur every year. And if you want to create a smart city, the best way to start is by saving lives.

Cities across the globe are installing technology to gather data in the hopes of saving money, becoming cleaner, reducing traffic, and improving urban life. In Digital Trends’ Smart Cities series, we’ll examine how smart cities deal with everything from energy management, to disaster preparedness, to public safety, and what it all means for you.

Out in Marysville, Ohio, the town is working with the state’s department of transportation and Honda, which has manufacturing and research facilities in Marysville, to figure out exactly how to make intersections safer by making them smarter. The town of 23,912 just debuted its first smart intersection this month, and it involves more than just wiring a street corner for pictures and sound. Wireless communication systems, computer vision recognition systems, infrastructure control systems, and in-vehicle telematics and alert systems all have to be coordinated.

“Our vision of a smart intersection is one that can have non-connected road users — pedestrians, cyclists — work with those that are connected, like connected cars,” Ted Klaus, vice president of strategic research at Honda R&D Americas, explained in an interview with Digital Trends.

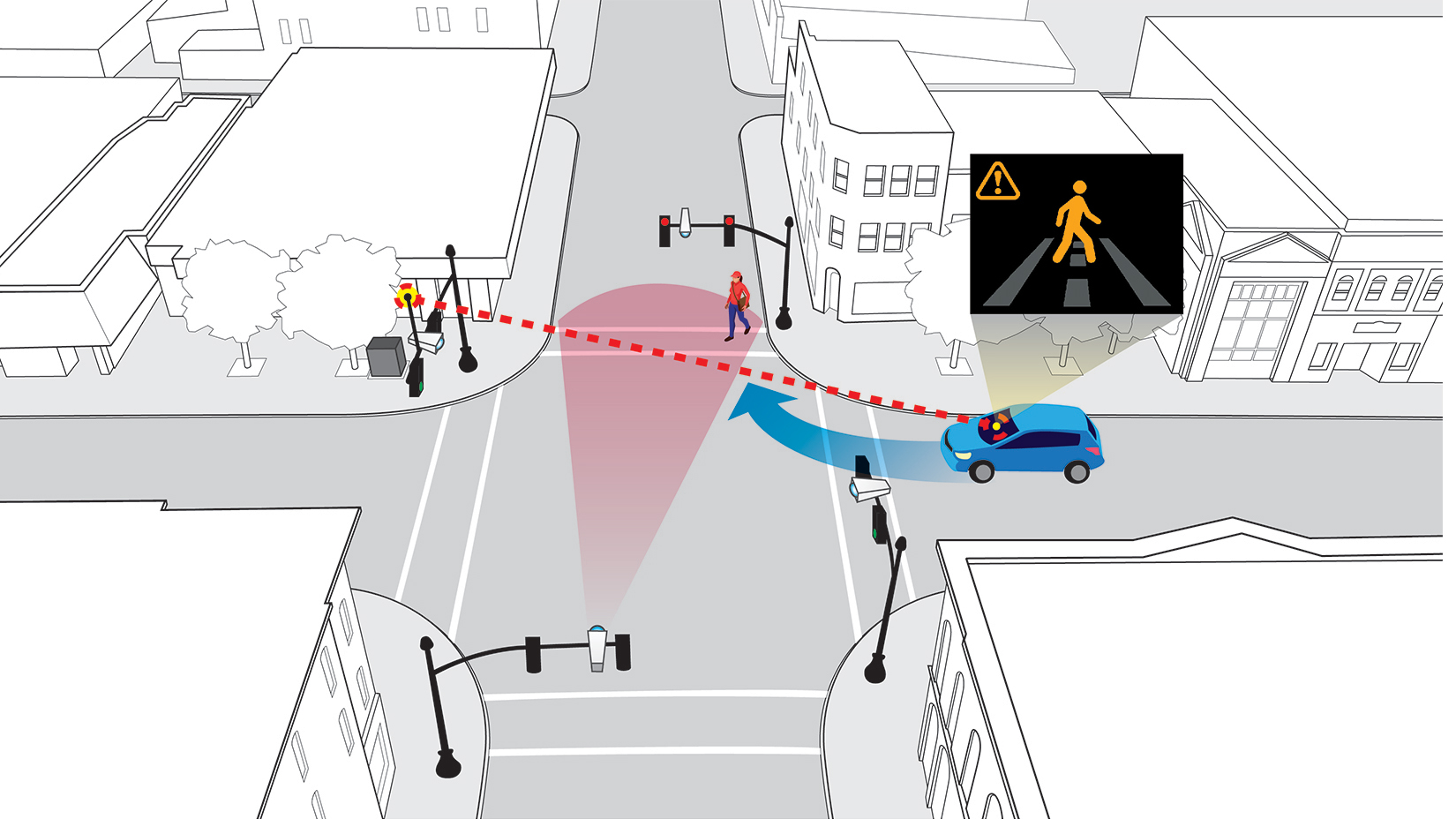

That means tracking those “non-connected” users and controlling the elements that can be controlled using technology — such as cars, traffic lights, and signaling systems — to save the things that can’t be controlled by technology — like people walking across the street.

From small-town Marysville to big-city Columbus

The Marysville project comprises just one intersection now, outfitted with four high-resolution video cameras taking in a 300-foot bird’s eye view and using Honda’s object-recognition software. The last element is perhaps the most sophisticated part of the system. Computer systems can easily detect objects but it takes more than detection for the system to work.

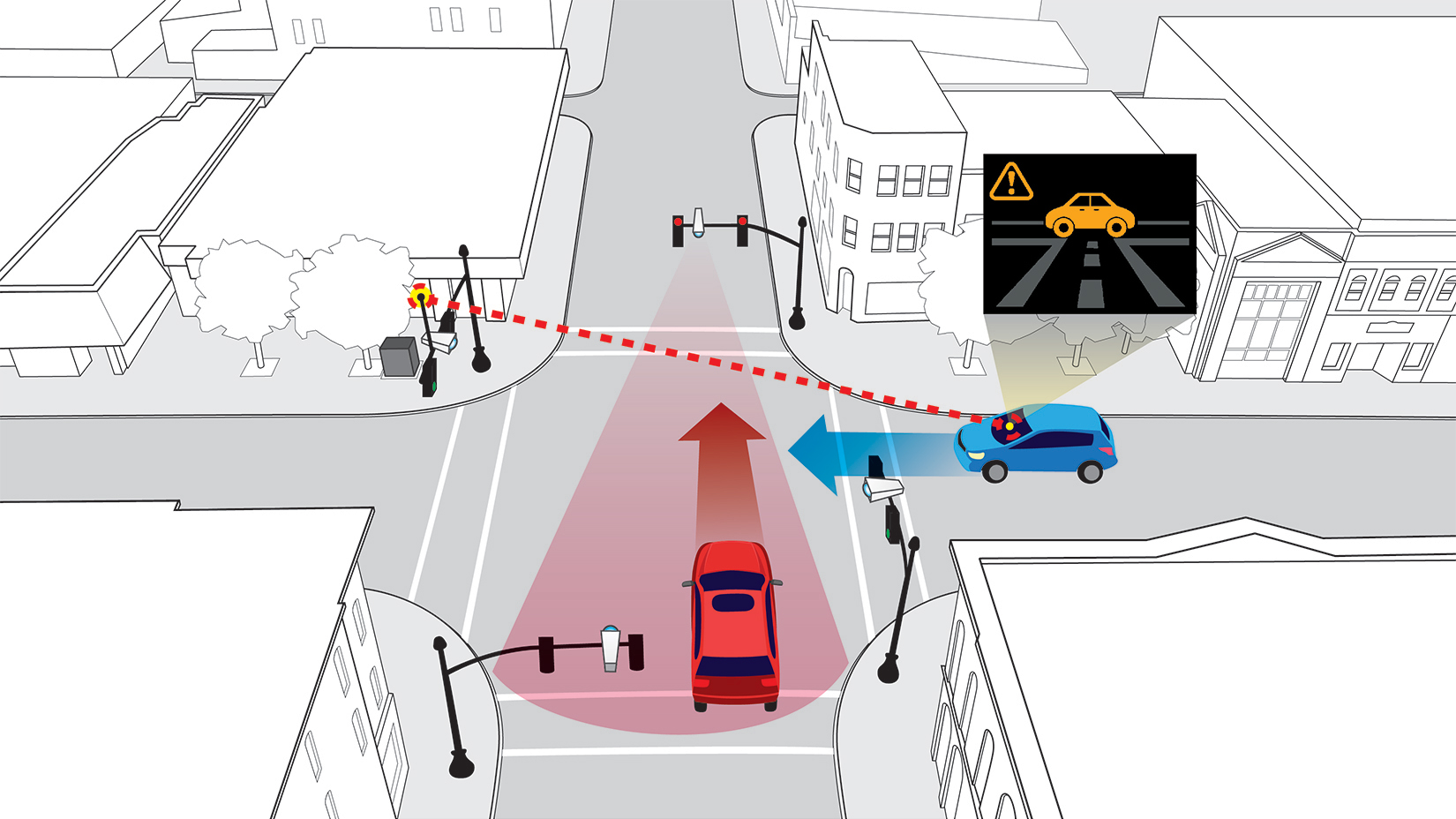

Each and every object moving in and around the intersection has to not only be seen but also recognized as, say, a pedestrian, dog, cyclist, or car. After such so-called classification, the system then has to be able to predict the object’s behavior: Is that a person who is about run across the street or a cyclist who is going to stop for the red light?

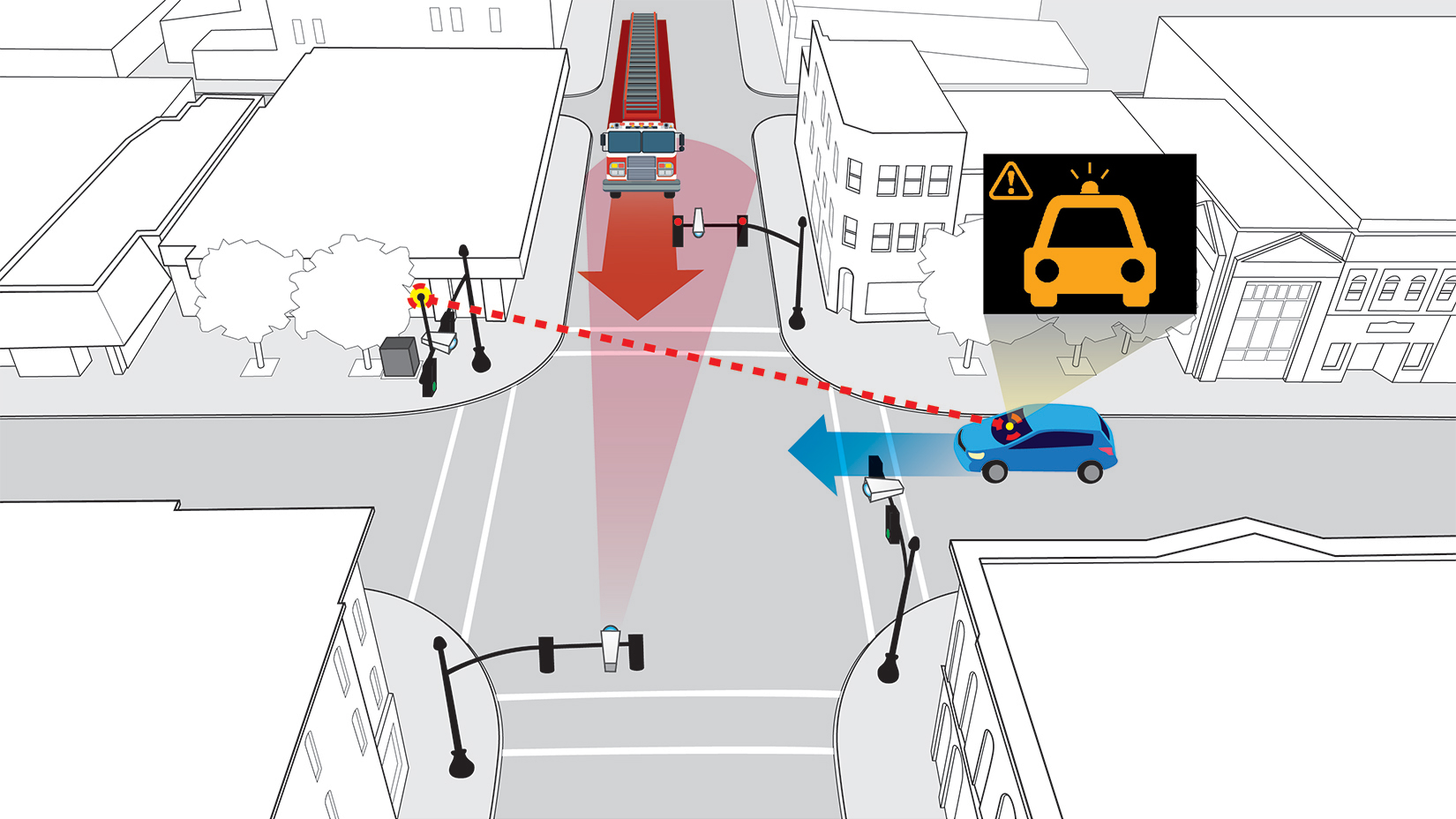

When, say, a car that is about to run a red light is detected, the intersection system transmits a warning to other drivers approaching the intersection, allowing them to virtually see around corners or other obstructions and brake to avoid a collision. The current system uses DSRC (dedicated short range communications) to communicate with the cars — so-called vehicle-to-everything or V2X communications. Each vehicle needs to be equipped with a special system to recognize DSRC messages and then display warnings in a car’s head-up display (HUD).

The idea behind these smart intersections is not to give out tickets but actually control the traffic.

Klaus said there were 20 vehicles initially outfitted with the systems, mainly Honda employees who work at nearby research and development facilities. He said up to 200 cars will eventually be part of the test, including municipal vehicles, especially those used by first responders.

“You need to control the lights for emergency vehicles,” Klaus said, and warn other drivers around of, say, an approaching ambulance. He pointed out that unlike red light camera systems, the idea behind these smart intersections is not to give out tickets but actually control the traffic.

The particular intersection in Marysville chosen for the pilot programs was selected because researchers were looking for a “a representative intersection where there were visual obstructions and enough chaos to distract drivers,” Klaus explained. You cannot see around the corners at the Marysville location, for example. By choosing such a challenging spot, the researchers hope to apply the lessons learned in the smaller town to bigger cities like nearby Columbus, which has an extensive smart city program underway.

DSRC or 5G V2X? It won’t matter

While there is some discussion about what wireless technology will eventually be used to support active safety systems and future autonomous cars, Honda chose to work with DSRC because it was more thoroughly tested and widespread, Klaus told Digital Trends. Initially, NHTSA indicated that DSRC would be the standard and brands like Cadillac and Audi began putting it in some models. However, the Trump administration has backtracked on the issue, creating some confusion in the marketplace with some vendors pushing 5G.

The competing 5G V2X communications approach hasn’t been rolled out or tested to the same degree as DSRC, however. And DSRC is a close relative of Wi-Fi, while

“It’s about quality and coverage,” Klaus said. “DSRC is proven, but for the next step we could simulate 5G. We’re agnostic.”

As for the test drivers, who are all volunteers, the researchers are focusing on warnings for three critical situations: red light runners that could cause a fatal T-bone accident, pedestrians in the intersection who could be injured, and emergency vehicles who require right of way. Warnings appear as flashing icons in the head-up display along with an audible alert.

The ultimate goal, of course, is to have active safety systems in cars that automatically brake when they receive a warning about, say, a jaywalker or car running a red light. But Klaus also noted that one wouldn’t necessarily have to buy a new car to take advantage of the smarter intersections; older cars and trucks could be retrofitted with compatible warning systems.

What kind of warnings work best in these smart intersections of the future is still a critical part of the research. Are yellow or red icons that pop up on the windshield more effective in getting a quick driver response, or are audible bells and alarms more effective?

“The timing of the warning is also important,” Klaus said. “Not every situation is highly dynamic like a red light runner. Sometimes it’s just a warning about traffic congestion ahead.”

He pointed out that there will be a lot to learn from drivers and the data generated, affecting everything from safety to traffic flow and potential infrastructure changes to help make cities smarter.

“We’re really just scratching the surface right now,” said Klaus.