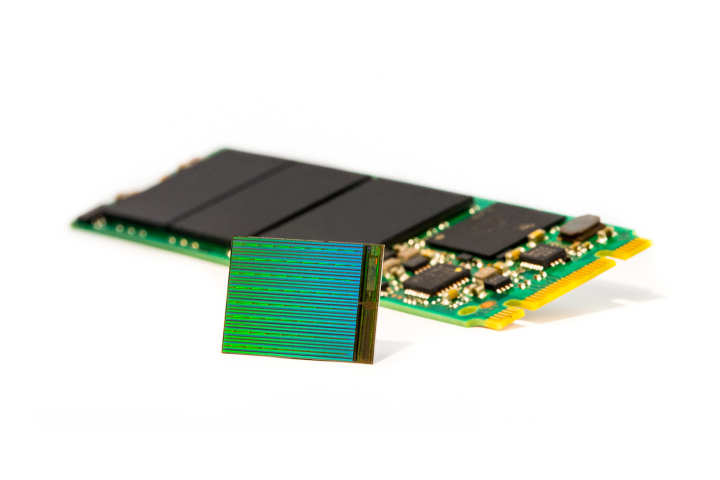

What’s most impressive about Google’s data is just how clean it is. The flash chips themselves are standard off-the-shelf parts from four different manufacturers – the same chips you’d find in almost any commercial SSD – and include MLC, SLC, and eMLC, the three most common varieties. The other half of the SSD is the controller and code, but Google takes that variable out of the equation by using only custom PCIe controllers. That means any errors will either be consistent across all device, or highlight the difference between manufacturer and flash type.

Read errors are more common than write errors

Schroeder classifies the errors into two different categories: transparent and non-transparent. Errors that the drive corrects and moves past without alerting the user are transparent, and those that cause a user-facing error are non-transparent.

Transparent errors are either accounted for by the drive’s internal error correction code, work after retrying the operation, or occur when the drive fails to erase a block. These aren’t critical errors, and the drive can get around them. The real issue are non-transparent errors.

These errors occur when the error is larger than the drive’s correction firmware can handle, or when simply attempting the operation again doesn’t do the trick. The term also applies to errors when accessing the drive’s meta-data, or when an operation times out after three seconds.

Among these non-transparent errors, the most common is a read error. This occurs when a drive attempts to read data and the operation fails even after multiple attempts. By Schroeder’s measurement, these appear in anywhere from 20 to 63 percent of drives. There’s also a strong correlation between final read errors and errors the drive can’t correct, suggesting that final read errors are largely caused by corrupted bits too long for the code to fix.

Non-transparent write errors, on the other hand, are quite rare, only popping up in 1.5 to 2.5 percent of drives. Part of the reason for that is when a write operation fails in one part of a drive, it can move to another and write there. As Schroeder puts it, “A failed read might be caused by only a few unreliable cells on the page to be read, while a final write error indicates a larger scale hardware problem.”

But what’s the useful potential of all that data about things going wrong? In order to contextualize, we need to think about errors in terms of raw bit error rates, or REBR. This rate, “defined as the number of corrupted bits per number of total bits read” is the most common for measuring drive failure, and allows Schroeder to evaluate drive failure rates across several factors: wear-out from erase cycles, physical age, workload, and previous error rate.

Drive age has a more profound correlation with failure than usage.

While the study finds that all of these elements factor into a drive’s failure rate, with the exception of previous errors, some of them are more detrimental than others. Importantly, physical age has a much more profound correlation to REBR than erase cycles, which suggests other non-workload factors are at play. It also means that tests that artificially increase read and write cycles to determine failure rates may not be generating accurate results.

SSDs are more reliable than mechanical disks

Uncorrectable errors are one thing, but how often does a drive actually fail completely? Schroeder tracks this by measuring how often a drive was pulled from use to be repaired. The worst drives had to be repaired every few thousand drive days, while the best went 15,000 days without needing maintenance. Only between five and ten percent of drives were permanently pulled within four years of starting work, a much lower rate than mechanical disk drives.

Which brings us to the final section of the study, which contextualizes the data in a more tangible way. It starts by downplaying the importance of REBR, pointing out that while it can help indicate a more relevant sense of hardware failure than other methods, it’s not good at predicting when failures will occur.

SLC chips, which are generally more expensive than MLC chips, aren’t actually more reliable than cheaper options.

That doesn’t mean we can’t draw some conclusions from it. For one, SLC chips, which are generally more expensive than MLC chips, aren’t actually more reliable than cheaper options. It is true that SLC chips are less likely to be problematic, but additional instances of MLC chip failure do not necessarily translate to a higher rate of non-transparent errors, or a higher rate of repair. It seems that firmware error correction does a good job of working around these problems, thus obscuring the difference in actual use.

Drives based on eMLC do seem to have an edge, though. They were less likely to have a fault, and drives based on eMLC were the least likely to need replacement.

Flash drives also don’t have to be replaced nearly as often as mechanical disk drives, but the error rate of SSDs tends to be higher. Fortunately, the firmware does a good job of preventing errors from becoming a problem.

Some drives are better than others

The study identifies a few key areas where more research is needed to identify issues down the line. Previous errors on a drive are a good indicator of uncorrectable errors down the road, a point which Schroeder says is already being investigated to see if bad drives can be identified early in their life.

This is only emphasized by the fact that most drives are either quite prone to failure, or solid for the majority of their lifespan. In other words, a bad drive is likely to show signs of being bad early on. If manufacturers know what to look for they may be able to weed these drives out during quality control. Similarly, owners might be able to identify a problem drive by examining its early performance.

While Google did look at drives from a number of companies, the company declined to name any names in this study, as has happened in past examinations of mechanical drives. While we now know that SSDs are more reliable than mechanical drives, and that the memory type used doesn’t have a huge impact on longevity, it’s still not clear which brand is the best for reliability.