Nvidia has just announced the AI Workbench, which promises to make creating generative AI a lot easier and more manageable. The workspace will allow developers to develop and deploy such models on various Nvidia AI platforms, including PCs and workstations. Are we about to be flooded with even more AI content? Perhaps not, but it certainly sounds like the AI Workbench will make the whole process significantly more approachable.

In the announcement, Nvidia notes that there are hundreds of thousands of pretrained models currently available; however, customizing them takes time and effort. This is where the Workbench comes in, simplifying the process. Developers will now be able to customize and run generative AI with minimal effort, utilizing every necessary enterprise-grade model. The Workbench tool supports various frameworks, libraries, and SDKs from Nvidia’s own AI platform, as well as open-source repositories like GitHub and Hugging Face.

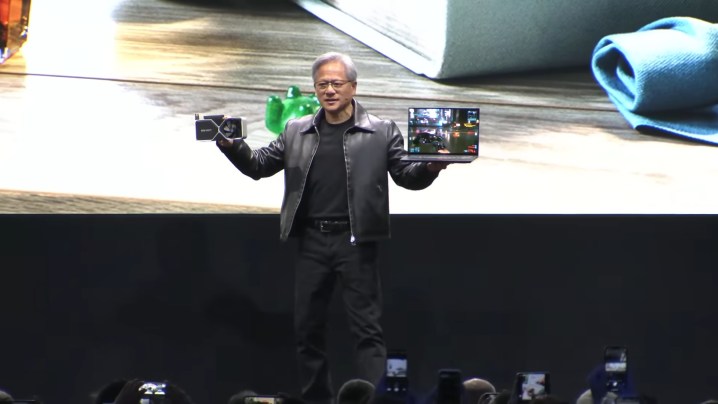

Once customized, the models can be shared across multiple platforms with ease. Devs running a PC or workstation with an Nvidia RTX graphics card will be able to work with these generative models on their local systems, but also scale up to data center and cloud computing resources when necessary.

“Nvidia AI Workbench provides a simplified path for cross-organizational teams to create the AI-based applications that are increasingly becoming essential in modern business,” said Manuvir Das, Nvidia’s vice president of enterprise computing.

Nvidia has also announced the fourth iteration of its Nvidia AI Enterprise software platform, which is aimed at offering the tools required to adopt and customize generative AI. This breaks down into multiple tools, including Nvidia NeMo, which is a cloud-native framework that lets users build and deploy large language models (LLMs) like ChatGPT or Google Bard.

Nvidia is tapping into the AI market more and more at just the right time, and not just with the Workbench, but also tools like Nvidia ACE for games. With generative AI models like ChatGPT being all the rage right now, it’s safe to assume that many developers might be interested in Nvidia’s one-stop-shop easy solution. Whether that’s a good thing for the rest of us still remains to be seen, as some people use generative AI for questionable purposes.

Let’s not forget that AI can get pretty unhinged all on its own, like in the early days of Bing Chat, and the more people who start creating and training these various models, the more instances of problematic or crazy behavior we’re going to see out in the wild. But assuming everything goes well, Nvidia’s AI Workbench could certainly simplify the process of deploying new generative AI for a lot of companies.

Editors' Recommendations

- Stability AI’s music tool now lets you generate tracks up to 3 minutes long

- I was wrong — Nvidia’s AI NPCs could be a game changer

- All RTX GPUs now come with a local AI chatbot. Is it any good?

- Intel just called out Nvidia

- AMD is taking the gloves off in the AI arms race