AMD looks ready to fight. At its Advancing AI event, the company finally launched its Instinct MI300X AI GPU, which we first heard about first a few months ago. The exciting development is the performance AMD is claiming compared to the green AI elephant in the room: Nvidia.

Spec-for-spec, AMD claims the MI300X beats Nvidia’s H100 AI GPU in memory capacity and memory bandwidth, and it’s capable of 1.3 times the theoretical performance of H100 in FP8 and FP16 operations. AMD showed this off with two Large Language Models (LLMs) using a medium and large kernel. The MI300X showed between a 1.1x and 1.2x improvement compared to the H100.

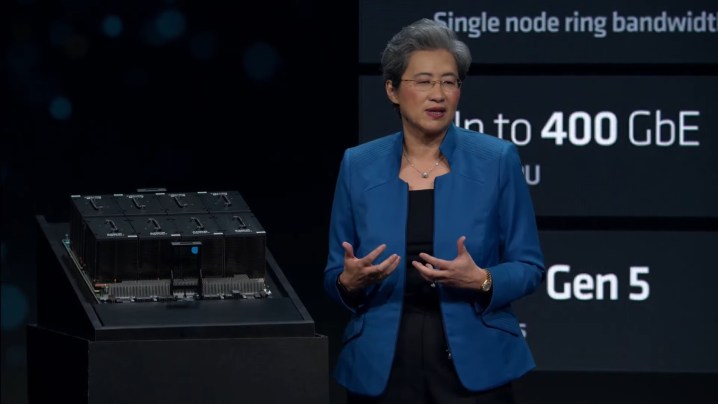

In a moment that looks ripped straight out of an Nvidia conference, AMD’s CEO Lisa Su introduced the MI300X Platform, which combines eight of the GPUs on a single board. AMD says these boards offer the same training performance as Nvidia’s H100 HGX and 1.6 times the inference performance. In addition, AMD says you’re able to run two LLMs per system, while H100 HGX can only handle one.

To illustrate how big of a deal this is, AMD brought out Microsoft’s Chief Technology Officer Kevin Scott to talk about how AMD and Microsoft are working together on AI. It’s important to highlight that the wildly popular ChatGPT is run on Microsoft servers, and it was originally trained on thousands of Nvidia GPUs.

In addition to the MI300X, AMD announced the MI300A, which is the first APU for AI, according to AMD. This combines AMD’s CDNA 3 AI GPU architecture with 24 Zen 4 CPU cores, along with 128GB of HBM3 memory. AMD says this is a balance between AI performance and high-performance computing, offering the CPU prowess that we recently saw on Threadripper 7000 to data centers.

Despite jumping on the AI bandwagon with the rest of the tech world, AMD has taken a deep backseat to Nvidia up to this point. Nvidia started investing big in AI years ago, giving it a massive head start over AMD and Intel. That early investment has catapulted Nvidia into becoming a trillion-dollar company this year.

With a GPU cluster that’s positioned to challenge Nvidia’s H100, as well as partnerships with Meta and Microsoft, AMD is finally entering the ring. AMD may exit bruised and bloodied, but it still looks like AMD is ready to put up a fight.