Following the introduction of Copilot, its latest smart assistant for Windows 11, Microsoft is yet again advancing the integration of generative AI with Windows. At the ongoing Ignite 2023 developer conference in Seattle, the company announced a partnership with Nvidia on TensorRT-LLM that promises to elevate user experiences on Windows desktops and laptops with RTX GPUs.

The new release is set to introduce support for new large language models, making demanding AI workloads more accessible. Particularly noteworthy is its compatibility with OpenAI’s Chat API, which enables local execution (rather than the cloud) on PCs and workstations with RTX GPUs starting at 8GB of VRAM.

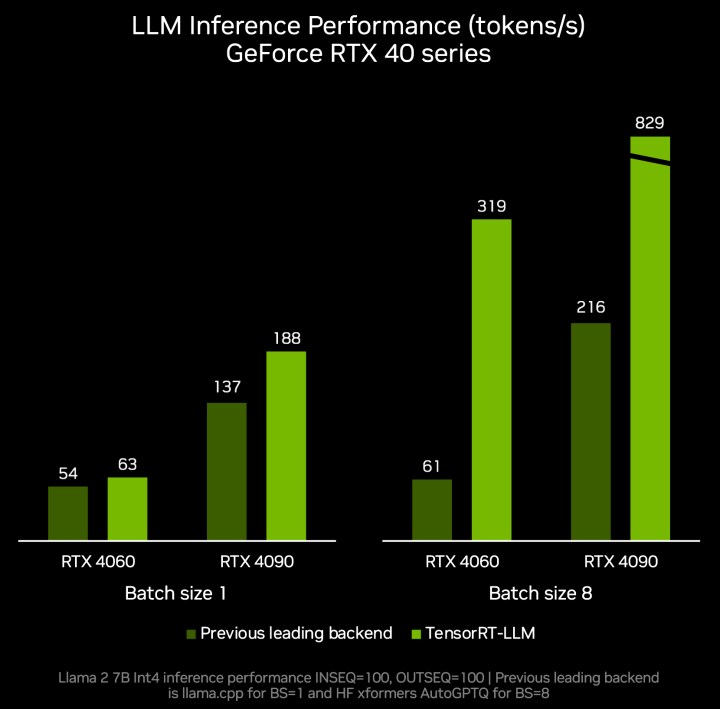

Nvidia’s TensorRT-LLM library was released just last month and is said to help improve the performance of large language models (LLMs) using the Tensor Cores on RTX graphics cards. It provides developers with a Python API to define LLMs and build TensorRT engines faster without deep knowledge of C++ or CUDA.

With the release of TensorRT-LLM v0.6.0, navigating the complexities of custom generative AI projects will be simplified thanks to the introduction of AI Workbench. This is a unified toolkit facilitating the quick creation, testing, and customization of pretrained generative AI models and LLMs. The platform is also expected to enable developers to streamline collaboration and deployment, ensuring efficient and scalable model development.

Recognizing the importance of supporting AI developers, Nvidia and Microsoft are also releasing DirectML enhancements. These optimizations accelerate foundational AI models like Llama 2 and Stable Diffusion, providing developers with increased options for cross-vendor deployment and setting new standards for performance.

The new TensorRT-LLM library update also promises a substantial improvement in inference performance, with speeds up to five times faster. This update also expands support for additional popular LLMs, including Mistral 7B and Nemotron-3 8B, and extends the capabilities of fast and accurate local LLMs to a broader range of portable Windows devices.

The integration of TensorRT-LLM for Windows with OpenAI’s Chat API through a new wrapper will allow hundreds of AI-powered projects and applications to run locally on RTX-equipped PCs. This will potentially eliminate the need to rely on cloud services and ensure the security of private and proprietary data on Windows 11 PCs.

The future of AI on Windows 11 PCs still has a long way to go. With AI models becoming increasingly available and developers continuing to innovate, harnessing the power of Nvidia’s RTX GPUs could be a game-changer. However, it is too early to say whether this will be the final piece of the puzzle that Microsoft desperately needs to fully unlock the capabilities of AI on Windows PCs.

Editors' Recommendations

- Windows 11 might nag you about AI requirements soon

- You’re going to hate the latest change to Windows 11

- Windows 11 tips and tricks: 8 hidden settings you need to try

- Stability AI’s music tool now lets you generate tracks up to 3 minutes long

- Everything you need to know about buying a GPU in 2024