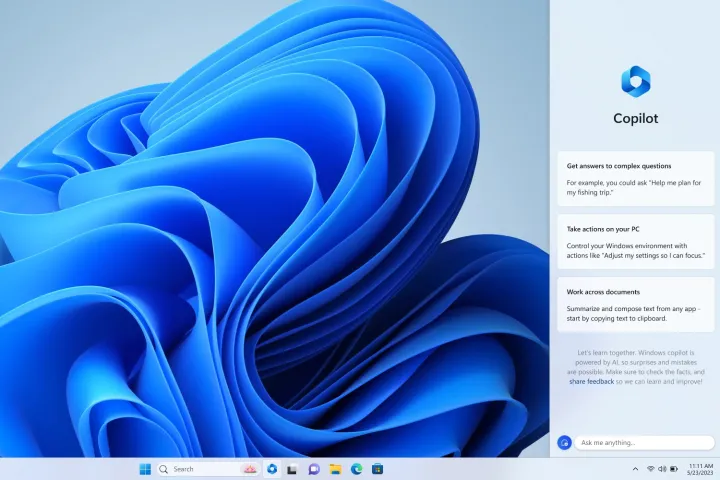

Announced at Microsoft Build 2023, Windows will now have its own dedicated AI “copilot” that can be docked right into a side panel that can stay persistent while using other applications and aspects of the operating system.

Microsoft has been highly invested in AI over these recent months, and it was only a matter of time before it came to Windows. The time is now — and it’s coming in a big way.

This “copilot” approach is the same that’s being included in specific Microsoft apps, such as Edge, Word, and the rest of the Office 365 suite. In Windows, the copilot will be able to do things like provide personalized answers, help you take actions within Windows, and most importantly, interact with open apps contextually.

The AI being used here is, of course, Microsoft’s own Bing Chat, which is based on the OpenAI GPT-4 large language model. More than that, the Copilot also has access to the different plugins available for Bing Chat, which Microsoft says can be used to improve productivity, help bring ideas to life, collaborate, and “complete complex projects.”

Microsoft also calls Windows the “first PC platform to announce centralized AI assistance for customers,” comparing it to options like macOS and ChromeOS. Right now, you have to install the latest version of the Edge browser to get access to Bing Chat, so in theory, the Windows Copilot effectively integrates generative AI into every Windows 11 computer.

Microsoft says the Windows Copilot will start to become sometime in June as a preview for Windows 11.

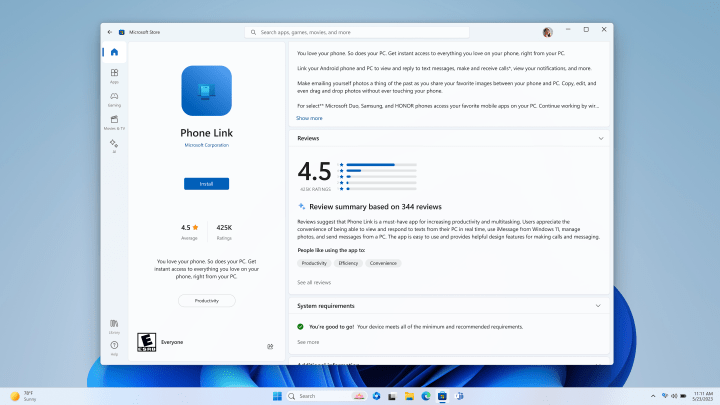

Microsoft is also creating a permanent spot for AI-driven apps in the Microsoft Store called “AI Hub.” Coming to the Microsoft Store soon, this will be a one-stop shop highlighting apps and experiences in the world of AI, both built by Microsoft and by third-party developers. There are even going to be AI-generated review summaries, that take the reviews of an application and compile them into a single summary.

Editors' Recommendations

- Scores of people are downgrading back to Windows 10

- Windows 11 might nag you about AI requirements soon

- You’re going to hate the latest change to Windows 11

- Windows 11 tips and tricks: 8 hidden settings you need to try

- Apple finally has a way to defeat ChatGPT