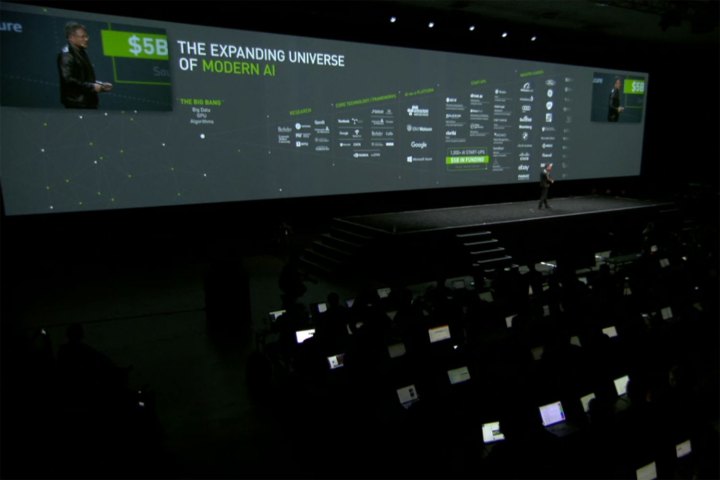

Nvidia is aware of the changing face of GPU development. Its opening keynote focused exclusively on non-gaming applications, some of which have serious implications on everything from self-driving cars, to autonomous drones.

Pascal rolls out for research, first

Nvidia’s latest GPU architecture, Pascal, has been long anticipated by gamers, but its introduction at GTC 2016 instead focused on extreme high-end gaming. We’ve covered the announcement in-depth. While the hardware shown so far isn’t designed to handle gaming, we’ll probably see a new video card line based on Pascal sometime this summer.

Nvidia opens its toolbox

Nvidia CEO and co-founder Jen-Hsung Huang took the stage at the GTC keynote and jumped right in by talking about the Nvidia SDK. This comprehensive package of tools, some of which have been in long use, like PhysX, are part of a move by the green team to expand the use of GPUs out of the gaming space.

CUDA cores are key to that approach. The distributed network of thousands of cores in a GPU are perfect for quickly managing large data sets. That means new libraries like Graph Analytics can run through thousands or millions of factors and compare them quickly, creating a neural map of the cross-points of those different datasets.

These tools also extend to other non-gaming uses. Nvidia’s IndeX is an SDK built specifically for dealing with environmental and geographic data. These datasets can easily zoom past terabytes of storage, and the systems that manage them typically require massive amounts of memory. IndeX should help researchers use GPU-accelerated computing to manage and draw conclusions from this data much faster than they ever could before.

Becoming artificially intelligent

Over the last five years, AI has morphed from a sci-fi pipe dream to a reality. Deep learning techniques have moved from simple image recognition to autonomous robots, speech understanding, and even playing Go.

But it doesn’t stop there.

GPUs are perfectly suited for AI and deep learning.

Nvidia is moving towards AI as a platform, where everything from image recognition to sorting and teaching will be handled by deep learning networks. These networks don’t just run commands, they interpret new ones, teach themselves to solve problems, and apply themselves to new jobs.

And at the heart of that effort are GPUs. With vast amounts of onboard memory and a powerful set of thousands of CUDA cores working together, the chips are perfectly suited for AI and deep learning. That’s largely thanks to Nvidia’s Tesla M40 and M4 cards.

“I don’t think deep learning is going to be in one industry, I think it’s going to be in every industry” Explained Huang.

In a particularly striking demo during the keynote, an unsupervised Facebook AI system was fed 20,000 images from a particular art period. Instead of being told what’s important and what wasn’t, the AI learned the style and eventually managed to create its own, derivative art style.

Then, the team can feed back “landscape” or “forest” and the AI will use what it’s learned to “paint” a new, original picture. It can even manage multiple factors, like cutting the clouds from a sunset on the beach.

The same algorithm, when faced with videos of people playing sports and games, is able to differentiate between them with staggering accuracy. In the demo, the system was able to tell the difference between rafting and kayaking, and correctly identified a mountain uni-cycler, something even most people might second-guess if they saw it.

Recently, an AI system built by Google’s DeepMind program was able to beat one of the best Go players in the world. The massively complicated game has long been a fantasy of programmers, and the machine’s 4-1 victory only validates the idea that AI is finally here in a meaningful way.

Photo-realistic virtual reality

One of Nvidia’s most impressive DesignWorks tools, IRay, creates a more accurate, lifelike light map than computers are able to render in real time. It does so by slowly calculating the light rays from different points to create a perfect lighting map, but it takes a while to run, which only makes it useful for rendering films or producing an architectural presentation.

Now, Nvidia is bringing that technology to VR headsets to help create photorealistic lighting and textures while wearing a headset. It works by generating 100 light probes, each with a unique view of the way light reflects and shines around the room. These 4K renders take about an hour each to run on a DGX-1, meaning the time needed to render a single experience is at least a few days.

When the headset is donned, the graphics card, which doesn’t have to be terribly powerful, compares the viewpoint to the light probes and accurately renders a result. The demo was appropriately spectacular, showing a picture perfect recreate of lighting and reflections. Don’t expect to see this in games soon, though; it only works from a fixed position, and the scene cannot change in real-time.

There’s also a lighter version of IRay VR that’s designed to run on Android devices and simple VR headsets such as Google Cardboard. It can take a 3DSMax, or other modeling scene, and generate a photo-sphere from a single point. It’s a great way to show off the techology on readily available hardware.

AI, you can drive my car

While the automotive section goes into greater detail on Nvidia’s Drive program, it’s definitely worth mentioning that developers are doing a lot of work in this space. To help support that effort, Nvidia is rolling out a massive set of SDKs just for self-driving cars, as well as a special chip designed specifically for them, with support for up to 12 high-definition cameras.

Read more about it here.

What about gaming?

Nvidia is no longer focused on producing video cards for gamers. The green team has found a new niche for its products, and Nvidia’s plans from the keynote cover a wide range of industries that have nothing to do with gaming. Whether that’s deep learning networks, AI research, or expanding our imaginations in virtual worlds, it’s starting to look like there’s nothing a fast GPU can’t do.

Editors' Recommendations

- The father of Nvidia’s controversial AI gaming tech wants to set the record straight

- Nvidia RTX 4080: What we learned from GTC 2022

- Nvidia is renting out its AI Superpod platform for $90K a month