When Microsoft announced Copilot+ PCs a few weeks back, one question reigned supreme: Why can’t I just run these AI applications on my GPU? At Computex 2024, Nvidia finally provided an answer.

Nvidia and Microsoft are working together on an Application Programming Interface (API) that will allow developers to run their AI-accelerated apps on RTX graphics cards. This includes the various Small Language Models (SLMs) that are part of the Copilot runtime, which are used as the basis for features like Recall and Live Captions.

With the toolkit, developers can allow apps to run locally on your GPU instead of the NPU. This opens up the door to not only more powerful AI applications, as the AI capabilities of GPUs are generally higher than NPUs, but also the ability to run on PCs that don’t currently fall under the Copilot+ umbrella.

It’s a great move. Copilot+ PCs currently require a Neural Processing Unit (NPU) that’s capable of at least 40 Tera Operations Per Second (TOPS). At the moment, only the Snapdragon X Elite satisfies that criteria. Despite that, GPUs have much higher AI processing capabilities, with even low-end models reaching to 100 TOPS, and higher-end options scaling even higher.

In addition to running on the GPU, the new API adds retrieval-augmented generation (RAG) capabilities to the Copilot runtime. RAG gives the AI model access to specific information locally, allowing it to provide more helpful solutions. We saw RAG on full display with Nvidia’s Chat with RTX earlier this year.

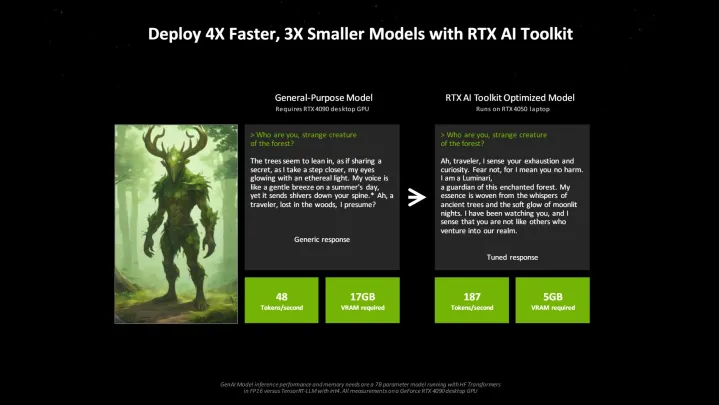

Outside of the API, Nvidia announced the RTX AI Toolkit at Computex. This developer suite, arriving in June, combines various tools and SDKs that allow developers to tune AI models for specific applications. Nvidia says that by using the RTX AI Toolkit, developers can make models four times faster and three times smaller compared to using open-source solutions.

We’re seeing a wave of tools that enable developers to build specific AI applications for end users. Some of that is already showing up in Copilot+ PCs, but I suspect we’ll see far more AI applications at this point next year. We have the hardware to run these apps, after all; now we just need the software.