Whether we like it or not, the metaverse is coming — and companies are trying to make it as realistic as possible. To that end, researchers from Carnegie Mellon University have developed haptics that mimic sensations around the mouth.

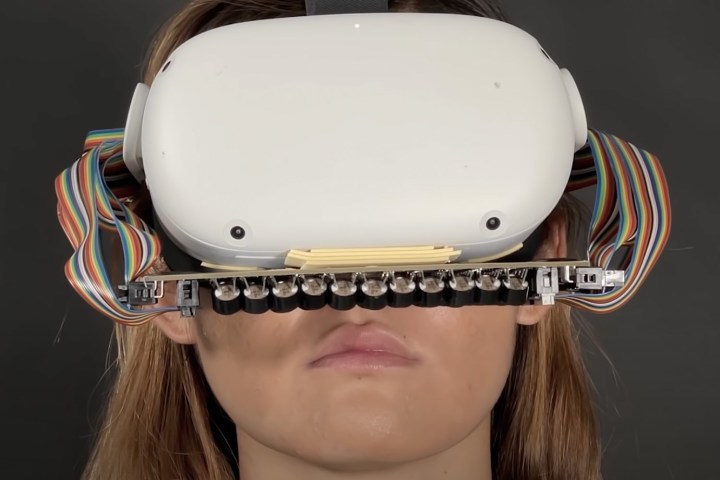

The Future Interfaces Group at CMU created a haptic device that attaches to a VR headset. This device contains a grid of ultrasonic transducers that produce frequencies too high for humans to hear. However, if those frequencies are focused enough, they can create pressure sensations on the skin.

The mouth was chosen as a test bed because of how sensitive the nerves are. The team of researchers created combinations of pressure sensations to simulate different motions. These combinations were added to a basic library of haptic commands for different motions across the mouth.

Vivian Shen, one of the authors of the paper and a doctoral student, further explained that it’s much easier to do taps and vibrations by changing the timing and frequency modulation.

To demonstrate the haptic device as a proof of concept, the team tested it on a small group of volunteers. The volunteers strapped on VR goggles (along with the mouth haptics) and went through a series of virtual worlds such as a racing game and a haunted forest.

The volunteers were able to interact with various objects in the virtual worlds like feeling spiders go across their mouths or the water from a drinking fountain. Shen noted that some volunteers instinctively hit their faces as they felt the spider “crawling” across their mouths.

The goal is to make implementing the mouth haptics easier and more seamless for software engineers.

“We want it to be drag-and-drop haptics. How it works in [user interface design] right now is you can drag and drop color on objects, drag and drop materials and textures and change the scene through very simple UI commands,” says Shen. “We made an animation library that’s a drag-and-drop haptic node, so you can literally drag this haptic node onto things in scenes, like a water fountain stream or a bug that jumps onto your face.”

Unfortunately, all was not perfect with the demo. Some users didn’t feel anything at all. Shen noted that because everyone has a different facial structure, it can be difficult to calibrate the haptics for each face. The transducers have to accurately translate the haptic commands to skin sensations in order for it to work convincingly.

Regardless, this seems like a very interesting (if not slightly creepy) application of haptics in a virtual environment. It would certainly go a long way to making object interaction more realistic.

Researchers at the University of Chicago are also delving into haptics, but using chemicals instead of sound waves. They were able to simulate various sensations such as heat, cool, and even a stinging sensation.

A startup named Actronika showed off a futuristic haptic vest at CES in January. This uses “vibrotactile voice-coil motors” to simulate a wide range of vibrations. This would allow the wearer to “feel” anything from waterdrops to bullets.

As you can see, there are plenty of efforts to make VR immersion a reality. The more we discover new ways to interact with virtual worlds, the closer we get to Mark Zuckerburg’s vision of the metaverse.