Researchers at the AI company Vicarious may have just made CAPTCHAs obsolete, however, by creating a machine-learning algorithm that mimics the human brain. To simulate the human capacity for what is often described as “common sense,” the scientists built a computer vision model dubbed the Recursive Cortical Network.

“For common sense to be effective it needs to be amenable to answer a variety of hypotheticals — a faculty that we call imagination,” they noted in a post at their blog.

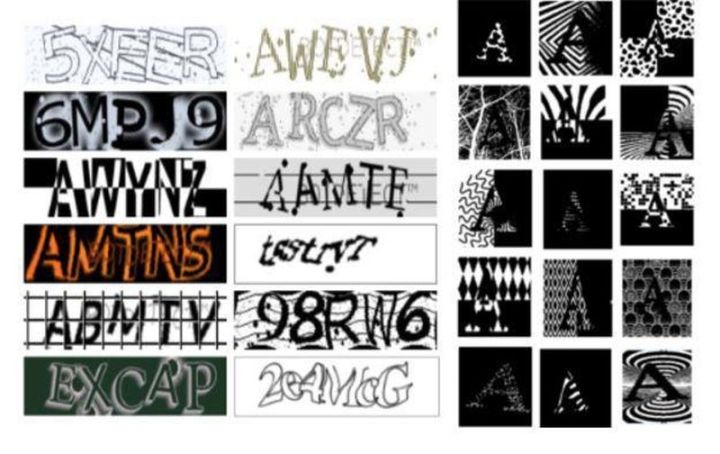

The ability to decipher CAPTCHAs has become something of a benchmark for artificial intelligence research. The new Vicarious model, published in the journal Science, cracks the fundamentals of the CATCHPA code by parsing the text using techniques that are derived from human reasoning. We can easily recognize the letter A for example, even if it’s partly obscured or turned upside down.

As Dileep George, the co-founder of the company explained to NPR, the RCN takes far less training and repetition to learn to recognize characters by building its own version of a neural network. “So if you expose it to As and Bs and different characters, it will build its own internal model of what those characters are supposed to look like,” he said. “So it would say, these are the contours of the letter, this is the interior of the letter, this is the background, etc.”

These various features get put into groups, creating a hierarchal “tree” of related features. After several passes, the data is given a score for evaluation. CAPTCHAs can be identified with a high degree of accuracy. The RCN was able to crack the BotDetect system with 57 percent accuracy with far less training than conventional “deep learning” algorithms, which rely more on brute force and require tens of thousands of images before they can understand CAPTCHAs with any degree of accuracy.

Solving CATCHPAs is not the goal of the research, but it provides insight into how our brains work and how computers can replicate it, NYU’s Brenden Lake told Axios. “It’s an application that not everybody needs,” he said. “Whereas object recognition is something that our minds do every second of every day.”

“Biology has put a scaffolding in our brain that is suitable for working with this world. It makes the brain learn quickly,” George said. “So we copy those insights from nature and put it in our model. Similar things can be done in neural networks.”