Every year, Apple touts the iPhone as having an incredible camera system — and, yes, the hardware is certainly impressive. The iPhone 14 Pro has the latest advancements that Apple offers in terms of camera upgrades, including a huge jump to a 48MP main camera with pixel-binning technology (four su-pixels to make up one larger pixel), a telephoto lens with 3x optical zoom, faster night mode, and more. Again, on the hardware front, the iPhone 14 Pro camera looks impressive. And it is!

But what good is great camera hardware when the software continues to ruin the images you take? Ever since the iPhone 13 lineup, it seems that any images taken from an iPhone, unless it’s shot in ProRaw format, just look bad compared to those taken on older iPhones and the competing best Android phones. That’s because Apple has turned the dial way up on computational photography and post-processing each time you capture a photo. It’s ruining my images, and Apple needs to take a chill pill and take it down a notch.

These ‘smart’ features aren’t as smart as they claim

To take photos, we need sensors that help capture light and detail to create those images. However, since smartphones are much more compact than a full DSLR, the sensors are pretty limited in what they can do. That’s why Apple, as well as other smartphone manufacturers, may rely on software to improve the image quality of pictures you capture on a phone.

Apple introduced Smart HDR on the iPhone in 2018, and this software feature is now in its fourth iteration with the iPhone 14 lineup. With Smart HDR 4, the device will snap multiple photos with different settings and then combine the “best” elements of each image into a single photo. There’s a lot of computational photography going on with this process, and though the intention is to make the images look good, it actually does the opposite.

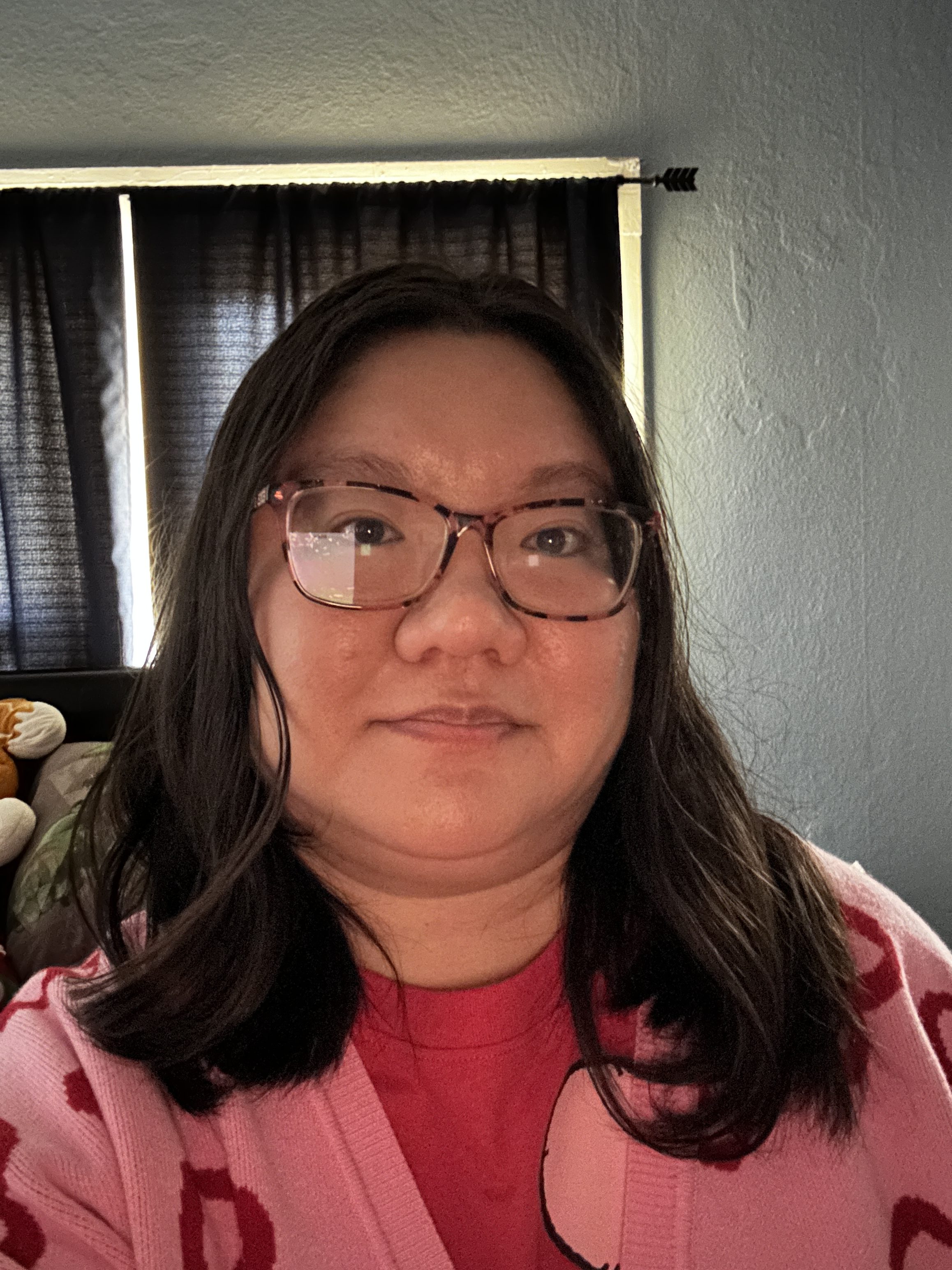

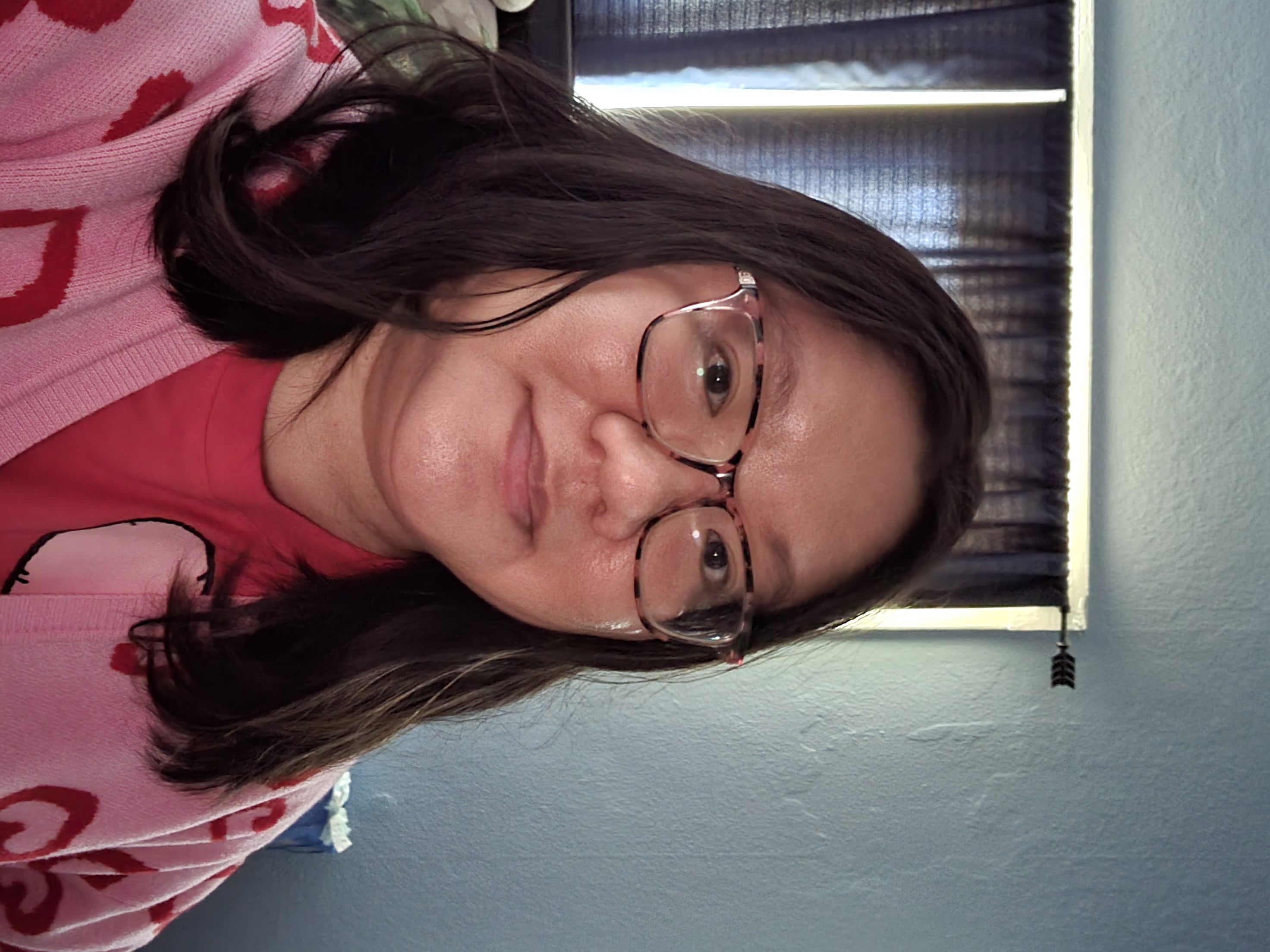

You may have noticed this when you snap a photo on your iPhone 14 (or iPhone 13) and immediately tap the thumbnail in the corner to view the images you just took. The image will look like what you captured for a quick second, but then it looks overly sharpened — with colors appearing more artificial, maybe washed out in certain areas and overly saturated in others. Even skin tone may look a little different than what you see in real life. While I loved night mode when it first debuted on the iPhone 11 series, compared to the competition now, night mode makes photos look too “bright” from overprocessing, and a lot of night photos don’t even look like they’re taken at night.

And have you ever tried to take a selfie in lowlight conditions? Sure, the iPhone has night mode for the front-facing camera too, but I haven’t found it to be of much help. Lowlight selfies look horrendous no matter what you do because iOS adds a lot of digital artifacts to the image to try and “save” it. But instead of saving the photo, it ends up looking like a bad and messy watercolor painting. In fact, I try to not take selfies on my iPhone 14 Pro when I’m in a dim environment because they almost always end up looking bad.

I’m not sure when photos taken with an iPhone began to look this way, but I definitely feel like it became more prominent starting with the iPhone 13 series. I could be wrong, but I don’t particularly remember my iPhone 12 Pro images looking this overexaggerated, and especially not my iPhone 11 Pro photos. The iPhone used to take pictures that looked realistic and natural, but all this Smart HDR and computational photography postprocessing has gotten way out of hand, making images look worse than they should be.

Apple, let us turn this stuff off

A few years ago, it was possible to not use Smart HDR at all. This was an optional setting, and one that you could toggle on or off as you see fit. This setting was only available on the iPhone XS, iPhone XR, iPhone 11 series, iPhone SE 2, and iPhone 12 models. Ever since the iPhone 13 and later, the option to turn Smart HDR has been removed.

Taking away this option was a shortsighted move, and I hope Apple brings it back at some point. Despite my iPhone 14 Pro being my personal and primary device, especially for photography, sometimes I need to edit an image before I consider it suitable for sharing with others. I get what Apple is trying to do with Smart HDR, but the resulting image usually just looks way too artificial compared to what you see in reality.

For example, when I take pictures outside in sunny conditions, the photo may have an overly saturated blue sky, making it look fake. I also think that, sometimes, the color altering makes my skin tone look a tad off — something that YouTuber Marques Brownlee (MKBHD) even talks about in a recent video.

Android competition does it better

Again, Apple has put some great camera hardware in the iPhone 14 Pro lineup, but it’s tainted by the overprocessing of the software. As I’ve picked up a few different Android devices in my time here at Digital Trends, I’ve come to enjoy how some competing Android phones handle photography over the iPhone.

For example, the Google Pixel 7 takes great photos with the main camera with no extra effort required. Just point and shoot, and the results are accurate to what your eyes see — if not better. Google still uses a lot of its own image processing, but it doesn’t make photos look unnatural the way Apple does. The only problem with it, from what I’ve noticed, is selfies. It doesn’t quite get the skin tone accurately, but otherwise, the main camera is great. Colors are balanced, not washed out, and the software doesn’t try too hard to make it “look good,” because it already is. And with fun tools like Magic Eraser, I prefer to use my Google Pixel 7 for editing shots.

I also tested out the older Samsung Galaxy S21 for a few of these photo comparisons, and while I don’t think it handles skin tone and color that well, it seems to do well for lowlight selfies, which I’m surprised by. It also handled night mode pictures quite well, making them appear natural and realistic instead of blown out with the colors off like the iPhone 14 Pro.

So while Apple can say it has the “best” camera hardware, iOS and the overreliance on computational photography is ruining how images look. Sure, some people may like this aesthetic, but I personally can’t stand how unnatural a lot of my pictures end up looking. Apple needs to tone down the Smart HDR stuff, or at least bring back the option to turn it off if we please. Because, right now, things aren’t looking great.

Editors' Recommendations

- Nomad’s new iPhone case and Apple Watch band may be its coolest yet

- 5 phones you should buy instead of the iPhone 15

- Why you should buy the iPhone 15 Pro instead of the iPhone 15 Pro Max

- There’s a big problem with the iPhone’s Photos app

- Why you should buy the iPhone 15 Pro Max instead of the iPhone 15 Pro