Over the past few weeks, Twitter has been stepping up its efforts to fight misinformation and prevent it from spreading on the platform. This initiative made headlines when Twitter decided to fact-check a tweet from the President of the United States — a move that sparked both praise and outrage, and ultimately led to an executive order from the White House.

But while Twitter has earned plaudits for calling out Trump’s verifiably false statement about mail-in ballots and voter fraud, there is no dancing around the fact that Twitter’s misinformation runs much deeper than a few high-profile tweets. The most prolific and problematic peddler of misinformation on the platform is the massive army of bots that pose as average users.

Lots and lots of bots

There is no definitive number for the total quantity of automated bot accounts active on Twitter. Some studies have pegged the number at around 15%. With more than 330 million users, that equates to a whole lot of non-human actors — 49.5 million to be exact. That’s roughly equivalent to the entire population of California and Michigan combined, in fact.

Bots are software applications of varying complexity that are programmed to perform certain tasks, whether legitimate or malicious. Not all of them are bad. Wikipedia, for example, employs bots on its platform to clean up pages, identify vandals and malicious page edits, make suggestions, and greet newcomers. When people talk about bots in the context of Twitter, however, they are not referring to helpful bots, but rather bots which frequently pose as ordinary users — frequently, although not always, boasting the generic egg-shaped avatar Twitter assigns to users as a default — but are known for helping to spread fake news and peddle conspiracy theories.

Twitter bots have been blamed for everything from the outcome of the 2016 Presidential election to Brexit in the U.K. In both cases, bots heavily favored both the Trump and “Leave” sides of the debate. According to Jamie Susskind in the book Future Politics, pro-Trump bots, using hashtags such as #LockHerUp, outgunned the Clinton campaign’s own bots by a ratio of 5:1. Meanwhile, one-third of Twitter traffic prior to the European Union Brexit referendum was generated by bots.

Recent research from Carnegie Mellon University found more than half of the accounts tweeting about reopening America after the COVID-19 economic shutdown were bots, though some have questioned the accuracy of the report.

Twitter has said CMU didn’t request access to its data and had appeared to be based on publicly viewable accounts. Twitter added that much of its bot removal efforts happen before the accounts are ever seen by the public.

“We always support and encourage researchers to study the public conversation on Twitter – their work is an important part of helping the world understand what’s happening,” according to a Twitter spokesperson. “But research reports that only analyze a limited quantity of public data and are not peer-reviewed are misleading and inaccurate.”

Misinformation and disinformation

“Misinformation and disinformation is a significant problem on social media platforms,” Christopher Bouzy, creator of Bot Sentinel, told Digital Trends. “Inauthentic accounts are sharing and amplifying this stuff 24/7, and millions of legitimate users are exposed to this stuff. First, nefarious individuals usually buy old accounts on the black market. These accounts are mostly abandoned hacked accounts, or accounts created years ago for some other purpose and are no longer needed. Then these accounts are made to look like something they are not. It can be a supporter of a candidate, a BLM activist, or any other countless personas.”

Bouzy refers to these as the “top-tier inauthentic accounts.” These top-tier accounts can then have their signal boosted through the use of other, newly created accounts which follow them to give the impression that these accounts are popular.

“Other legitimate users follow the top-tier inauthentic accounts because they feel these accounts are several years old and they have a significant number of followers,” Bouzy said. “Then, after weeks or months of gaining the trust of their followers, they begin tweeting misinformation and disinformation intended to be shared quickly without questioning the authenticity of the content. One inauthentic account can expose thousands of legitimate users to disinformation — and it doesn’t take a lot of resources to accomplish this.”

Bot Sentinel uses machine learning to categorize and weed out automated accounts that tweet and retweet certain content. It was trained to classify Twitter accounts by feeding thousands of accounts and millions of tweets into a machine learning model. Its creators claim it can correctly classify accounts tweeting primarily in English with an accuracy level of 95%.

Cracking down on bots

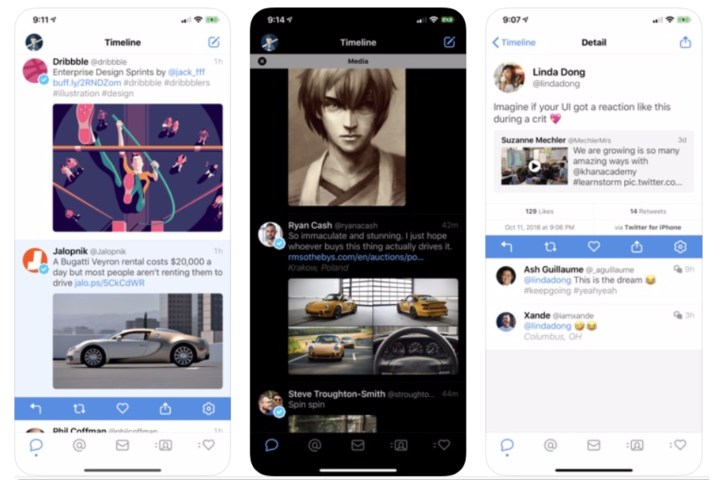

To be fair to social media companies like Twitter, there have been sustained efforts to crack down on bots throughout their history. Despite the fact that social media companies’ livelihood depends on their exponential ability to grow a user base, there has also been a serious attempt to deal with the bot dilemma — even when this means deleting millions of user accounts. In 2018, for instance, Twitter began suspending suspected bot accounts at a rate of more than 1 million per day.

That year alone it reportedly eliminated more than 70 million fake accounts. More recently, it has explored a new checkmark-style system to make it more apparent which users are bots and which are real people. Last month, Twitter updated its Developer API policy to note that developers must clearly indicate in their user bio or profile “if they are operating a bot account, what the account is, and who the person behind it is, so it’s easier for everyone on Twitter to know what’s a bot — and what’s not.” It also added a new page, designed to help users sort bots from non-bots.

???? Bot or not? ????

Just because an account doesn’t appear as advanced at using Twitter or disagrees with you, doesn’t mean the account is a bot. But we hear this a lot, so let’s break it down. https://t.co/mOkqJXs3eF

— Twitter Comms (@TwitterComms) May 22, 2020

But still, as Bouzy points out, bots persist on the platform. “For example, anyone can search Twitter for #WWG1WGA and #QANON, and they can see the countless inauthentic accounts spreading misinformation and disinformation regarding those hashtags,” he said. “And yet the accounts and content remain on the platform until someone reports it. If we can identify inauthentic accounts with public information, then Twitter can also identify these accounts.”

Provided that bots can be identified from human users, either by electively declaring themselves to be bots or through a Blade Runner-style investigative system to unearth robots in hiding, rules could be put in place to stop them from interfering.

I’m just a bill (to kill bots)

“One measure would be to prohibit bots taking part in certain forms of political speech,” Susskin told Digital Trends. “The Bot Disclosure and Accountability Bill offers a step in this direction in that it would prohibit candidates and political parties from using any bots intended to impersonate or replicate human activity for public communication. It would also prevent PACs, corporations and labor organizations from using bots for ‘electioneering communications.’”

Susskind said that it is also important that we think about more “dynamic” rules that could govern how bots operate on internet platforms. “Perhaps … bots should make only up a certain proportion of online contributions per day, or [be limited to] a number of responses to a particular person,” he continued. “Bots spreading what appears to be misinformation could be required by other bots to provide backup for their claims, and removed if they can’t. We need to be imaginative.”

For now, though, this remains a complex issue — regardless of whether we assume that regulations to control bots should come from within companies or be imposed by external forces. Lili Levi, a professor of law at the University of Miami School of Law, has previously written on the topic of bots and fake news. Levi raised just a few of the potential issues that will be faced by attempts to control the prevalence of bots on social media platforms.

“It strikes me that trying to answer that question requires thinking about a variety of priors,” Levi told Digital Trends. “Should the solutions be voluntary or mandatory? If mandatory, there are always legal questions — [such as] constitutionality of regulations, depending on their character. If voluntary, what arguments would lead the social media platforms to solutions? Would Facebook adopting some solutions necessarily lead the others to go along? Should the solutions be technical or disclosure-based? What are the kinds of technical solutions available to the platforms? Are they the same across social media platforms?”

One piece of the social media problem

Ultimately, the bot problem is just one small part of the issue faced by social networks. As Yuval Noah Harari writes in 21 Lessons for the 21st Century, humans have always been something of a post-truth species. That is to say that, in Harari’s words, “We are the only mammals that can cooperate with numerous strangers because only we can invent fictional stories, spread them around, and convince millions of others to believe them.”

Believing the same fictions is an essential part of human cooperation, ensuring that, for instance, the majority of us obey the same laws or societal norms. However, it can also translate into the near-limitless number of historical examples showing how large numbers of people can be whipped up to commit terrible acts, based entirely on untruths — and with nary a hashtag in sight.

What has changed in the age of social media is the speed with which these fictions can travel, along with the shorter period our overworked brains have to parse fact from fake. Even in the event that fact-checking bots were implemented as Susskind suggests, these would need to be carried out quickly enough that fake news could be spotted and removed before it became a problem. In a nuanced world where alternative facts are not always the same as outright fake news, that could pose a major problem.

“In our experience, bots are certainly one of the main means for activating the spread of fake news,” Francesco Marcelloni, professor of data processing systems and business intelligence at the University of Pisa, who last year published a paper titled “A Survey on Fake News and Rumour Detection Techniques” with Ph.D. student Alessandro Bondielli, told Digital Trends. “In the end, however, it is always people who make fake news viral. Thus, limiting the action of bots can slow the spread — but it might not prevent fake news from [spreading widely online].”

Free speech advocate Alexander Meiklejohn once famously observed that, “What is essential is not that everyone shall speak, but that everything worth saying shall be said.” Social media platforms are, by design, a different kind of free speech: A free speech where everyone gets a voice to speak as loudly as possible, even when this means drowning out opposing voices. This is, at the end of the day, why bots are viewed as so problematic. It is not simply that they are espousing views that may be opposed to our own; it is the scale on which they operate as tools for disseminating potentially false material.

Until these problems are addressed, misinformation will remain a huge problem on social media platforms like Twitter. Regardless of how many presidential tweets get flagged by fact-checkers.

Editors' Recommendations

- The 10 big ways that Threads is totally different from Twitter

- Australia threatens Twitter with huge fines over hate speech

- Twitter will soon be a bit less irritating for many people

- Twitter’s SMS two-factor authentication is having issues. Here’s how to switch methods

- Twitter has reportedly suspended signups for Twitter Blue