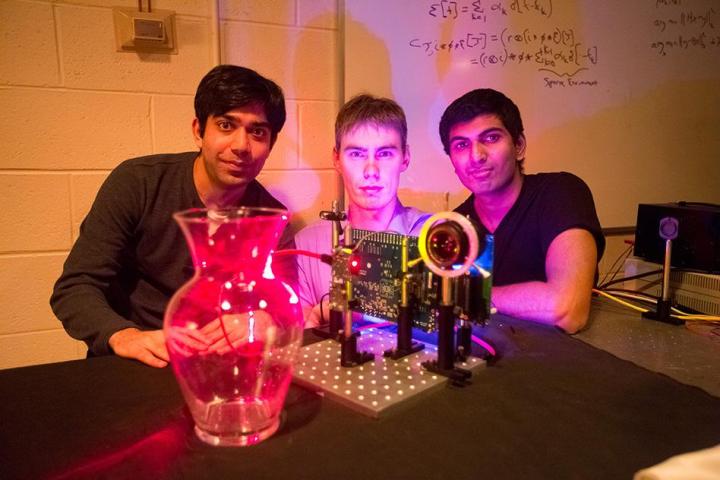

Researchers at the Massachusetts Institute of Technology have developed a low-cost 3D “nano-camera” that can operate at the speed of light, improving upon current technology that’s used in cameras like the one in the second-generation Xbox Kinect. Costing $500, the camera, which was demonstrated last week at the Siggraph Asia conference in Hong Kong, could be used in medical imaging and vehicle collision-avoidance detection, as well as enhanced accuracy of motion tracking and gesture-recognition devices in gaming. The development team dubbed the technology as “nanophotography.”

The nano-camera is based on a technology called “Time of Flight,” which determines the location of an object by calculating how long it takes a light sensor to bounce light off an object and return to the sensor. The problem with the current technology is that it can’t function as well with translucent objects, rain, or fog, making it difficult to gather an accurate measurement of distance.

“Using the current state of the art, such as the new Kinect, you cannot capture translucent objects in 3-D,” says Achuta Kadambi, the coauthor of the project. “That is because the light that bounces off the transparent object and the background smear into one pixel on the camera. Using our technique you can generate 3-D models of translucent or near-transparent objects.”

The MIT camera, on the other hand, can’t be fooled by those obstacles. To achieve its accuracy the nano-camera uses an encoding technique for measuring signals that’s common in the telecommunications industry, operating in nanoseconds. It also incorporates technology that’s similar to similar techniques for remove blurring in photos – the camera “unsmears” the individual optical paths to create a sharper image. Because it uses typical off-the-shelf parts, the MIT team were able to make the camera for $500.

“We use a new method that allows us to encode information in time,” says Ramesh Raskar, associate professor of media arts and sciences, and the head of the Camera Culture group at MIT Media Lab that developed the system. “So when the data comes back, we can do calculations that are very common in the telecommunications world, to estimate different distances from the single signal.”

(Via Phys.org)