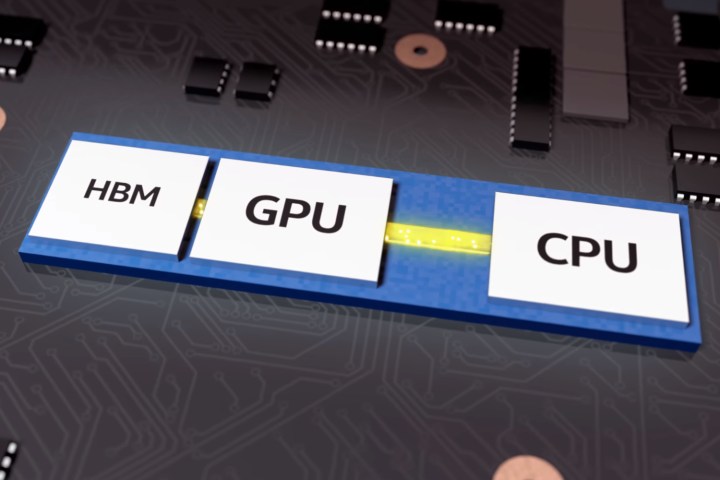

Both the photograph and Intel’s official render show the processor cores housed in one chip, and the Radeon graphics cores in another chip parked next to the HBM2 video memory. All three are mounted on a small enclosed circuit board that also contains a dedicated “highway” to quickly pass data between the three components. The module itself is mounted on a small motherboard akin to Intel’s Next Unit of Computing (NUC) all-in-one, small-form-factor PCs.

Speculation stemming from the now-removed photograph pegs the graphics component to possibly be AMD’s Polaris 20 graphics chip used on the Radeon RX 580 graphics card, as both appear similar in size. Meanwhile, the height of the HBM2 memory stack suggest at maximum 4GB capacity, backing up previous benchmark leaks showing two modules sporting 4GB of HDM2 memory each.

But leaked benchmarks also reveal that the Radeon graphics component reports 24 compute units, with translates into 1,536 stream processors. The Radeon RX 580 consists of 36 compute units (2,304 stream processors), the Radeon RX 570 has 32 compute units (2,048 stream processors), and the RX 560 consists of 16 or 14 compute units, depending on the card. What we’re seeing is the custom Radeon graphics component confirmed in Intel’s official announcement.

That said, the module’s Radeon component should hit a performance level residing between the RX 570 and the RX 560, or in the case of Nvidia, between the GeForce GTX 1050 Ti and the GTX 1060. Adding to that, the Core i7-88809G module will supposedly have a base graphics speed at 1,190MHz, and a memory bus clocked at 800MHz. Meanwhile, the slower Core i7-8705G model will supposedly have a 1,011MHz base graphics speed, and a memory bus clocked at 700MHz.

Outside the pictured module, the motherboard seen in the photograph appears to be similar in size to Intel’s larger NUC kits. Two memory slots reside next to Intel’s upcoming module along with an M.2 slot supporting SSDs measuring 22mm x 80mm. You can also see two SATA controllers, and one side packed full of ports. Two of these are likely “stacked” USB ports, and one possibly serving as HDMI output.

The photograph emerges after Intel revealed that AMD’s former head of its Radeon graphics division, Raja Koduri, is now spearheading a new department at Intel dedicated to high-end graphics. The announcement arrived just after Intel revealed its collaboration to produce modules, and Koduri’s move from AMD to Intel is likely part of that collaboration. Intel’s new “Core and Visual Computing Group” will place the company as a third player in the high-end graphics market, competing with AMD and Nvidia.

Intel’s new modules are slated to be made available for PC makers in the first quarter of 2018.

Editors' Recommendations

- I’ve used Intel CPUs for years. Here’s why I’m finally switching to AMD

- Nice try, Intel, but AMD 3D V-Cache chips still win

- AMD vs. Intel: the rivalry has never been more fierce

- Some surprising details on Intel’s upcoming 14th-gen laptops just leaked

- AMD’s new CPUs decisively end the high-performance battle with Intel