ReSpec is a bit different this week. I’ve spent the week in sunny San Francisco at the Game Developers Conference (GDC), running from meeting to meeting and trying to find a moment of time to write a few words.

Instead of a normal column, we decided to post a sampling of entries from the newly launched ReSpec newsletter covering what I saw at GDC this week. If you want this same newsletter delivered to your inbox each week, sign up now and get in on the exclusive content.

Path tracing is a lie

Maybe “lie” is too strong of a word to use, but path tracing is pretty tricky when it comes to its implementation in games. I sat in on sessions for path tracing in both Cyberpunk 2077 and Alan Wake 2 at GDC, both of which described a common thread for utilizing path tracing in a game meant to run in real time, at a playable frame rate. And it’s called ReSTIR Direct Illumination.

First, how path tracing works: We take a pixel, and we trace a line from it away from the camera. It collides with something and bounces. And it keeps going, bouncing around the scene until it either goes off into the ether or ends at a light source. Developers want those paths that end at a light source, particularly for calculating shadows.

The problem in any sort of real-time context is that this process is extremely expensive. Calculating all of those rays and all of those bounces, despite the fact that only a small amount of them are going to be used, hogs a ton of resources. That’s why path tracing has been an offline technique for so long — you have to calculate the possible paths and average them.

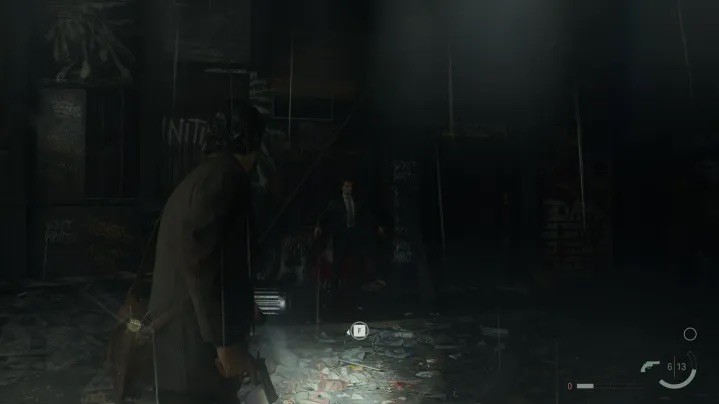

That’s not the case for Alan Wake 2 and Cyberpunk 2077. For direct lights, ReSTIR works by weighting the light sources in a scene and only sampling a selection of them. Then, these samples are shared temporally (across frames) and spatially (with nearby pixels). In the case of a game like Alan Wake 2, certain lights are weighted heavier, such as the blue and red “cinematic” lights you see in a train station.

The result is an image that comes together much faster, at least fast enough that you can play a game at a reasonable frame rate with a healthy dash of upscaling and frame generation.

It’s an interesting tidbit, and something that will hopefully become more common with titan developers from Alan Wake 2 and Cyberpunk 2077 sharing their work.

Microsoft is putting its foot down on upscaling

At GDC, Microsoft finally talked more about DirectSR, and even managed to wrangle developers from AMD and Nvidia to sit on the same panel. Together, even! DirectSR isn’t a way to end the upscaling wars, as we originally thought, but it provides a unified framework for developers to add multiple upscaling features to their games.

A big part of that is input. When interfacing with DirectSR, there’s a standardized set of inputs that developers grant to the Application Programming Interface (API). It can then pass those inputs along to upscalers that are built-in, such as AMD’s FSR 2, or variants that require specific hardware, such as Nvidia’s DLSS.

It’s not dissimilar from Nvidia’s own Streamline framework, which was built to accomplish something similar before AMD decided not to play ball. It seems like Microsoft, being the neutral third-party in this battle, was the one that could bring everyone together.

I’m still not sure how this will actually look in games. DirectSR isn’t even available to developers yet. It’s possible nothing changes for end users, and we still see multiple upscaling options in graphics menus. Maybe Microsoft will update Windows to include a universal upscaling option depending on the hardware you have. It’s not clear, but DirectSR should still make it easier for developers to implement every flavor of upscaling in their games across DLSS, FSR, and Intel’s XeSS.

One upside that didn’t come through originally was how this system works with updates. Nvidia, AMD, and Intel constantly release new versions of their upscaling tech that make minor improvements to image quality or slightly tweak how the upscaling works. With DirectSR, developers won’t need to add all of these updates to their games — they’ll just work across the API.

This is all thumbs-up for me. Upscaling has been a major point of contention, especially for big releases like Starfield and Resident Evil 4 that only supported one upscaler at launch. The only downside is frame generation. That doesn’t appear to be in the cards for DirectSR right now, so there’s still going to be plenty of back and forth in the future for major graphics brands.

Death to your CPU? Not exactly

One of the most exciting announcements out of GDC this year were Work Graphs. I talked about this last week in the newsletter, but I got a closer look at Work Graphs during Microsoft’s DirectX State of the Union. The idea behind them is to reduce the strain on your CPU by letting your GPU direct its own work.

There’s a little more nuance to the conversation. This gives more power to your GPU to decide what to do, similar to when programmable shaders were first introduced to graphics cards. A Work Graph is comprised of nodes, and those nodes can spawn further nodes for your GPU to work on rather than waiting for work from the CPU. Microsoft described it as a compute shader that can launch another compute shader.

The obvious advantage, and one PC gamers picked up on quickly, was GPU utilization. Microsoft explained that the current system requires a global sync point between the GPU and CPU. That often means that the GPU, being a highly parallel device, is without work for brief amounts of time while waiting for a sync to happen.

What I didn’t expect how it would impact memory. With current programming in DirectX 12, Microsoft explained you would use the ExecuteIndirect command, which requires you to hold several buffers. With Work Graphs, you don’t need to hold those buffers, as the GPU can start its own work and continue generating work for itself.

Robert Martin from AMD demonstrated how big of a deal this is with a scene that required 3.3GB of memory. With Work Graphs, the memory usage was just 113MB, and it came with a small performance boost. As the presentation described, “memory footprint scales with the size of the GPU, not the size of a workload.”

Work Graphs are invisible to end users, but it really is the next frontier for graphics programming, and as AMD, Nvidia, and Microsoft all said, it’s something that developers have been working toward for years. Less memory usage and better performance sounds good to me. We’ll just have to wait until Work Graphs make a splash across real games.