There’s one thing that will strike fear into the heart of any PC gamer: a CPU bottleneck. It’s unimaginable that you wouldn’t get the full power of your GPU when playing games, which is often the most expensive component in your rig. And although knowledge of what CPU bottlenecks are and how to avoid them is common, we’re in a new era of bottlenecks in 2024.

It’s time to reexamine the role your CPU plays in your gaming PC, not only so that you can get the most performance out of your rig but also to understand why the processor has left the gaming conversation over the last few years. It’s easy to focus all of your attention on a big graphics card, but ignore your CPU and you’ll pay a performance price.

The common knowledge

Let’s start with a high-level definition. A CPU bottleneck happens when your processor becomes the limiting factor in performance. When playing games, your CPU and GPU work together to render the final frame you see. The CPU handles some minor tasks like audio processing, but it mainly gets work ready for your GPU. Your GPU executes that work, and then it takes a new batch from your CPU. If you ever reach a point where your GPU is waiting on more work, you have a CPU bottleneck.

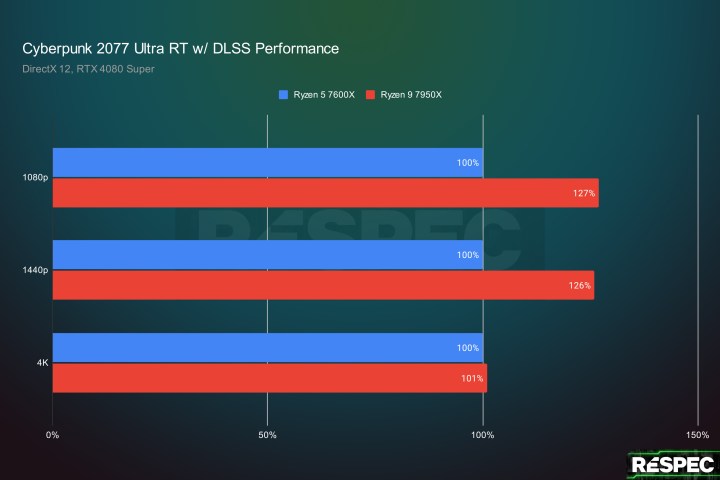

The solution is to increase the load on your GPU. Running at higher graphics settings or playing at a higher resolution means your GPU will take longer to render each frame, and in most cases, that means it won’t be waiting on your CPU for more work. You can see that in action in Cyberpunk 2077 below. The Ryzen 9 7950X is 7% faster than the Ryzen 5 7600X at 1080p, but when your GPU is forced to render the huge amount of pixels required for 4K, there’s a negligible 2% difference in performance.

Note: I’m using percentages throughout the charts in this article for the sake of illustration. You can find the full performance results in a table farther down the page.

That’s where the conversation usually ends, but that can lead to some misconceptions about how to apply the knowledge. You might think, for example, that you don’t need some monstrous processor if you plan on playing games at

The idea behind a CPU bottleneck is the same as it has always been, but the practical application can get messy quickly. With modern games, you’re often not rendering the game at your output resolution, and you have to contend with large, open worlds that put a lot more strain on your processor. Taking the decades-old lessons of CPU bottlenecks and applying them to modern games doesn’t hold up.

Challenging status quo

I wanted to start with Cyberpunk 2077 because it’s very heavy on your graphics card. The Red Engine that the game uses is remarkably well-optimized for CPUs, easily scaling down to six cores like you find on the Ryzen 5 7600X and up to 16 on the Ryzen 9 7950X. In nearly every situation, you’ll be GPU-limited in the game, which is exactly where you want to be for the highest frame rates.

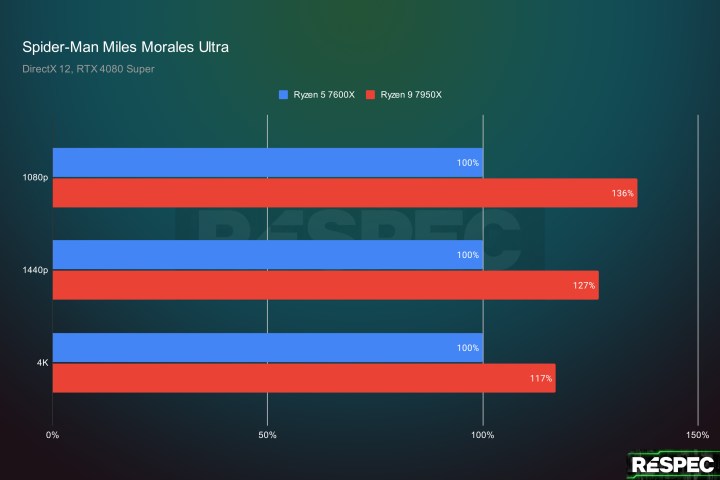

Let’s look at the flipside. Spider-Man Miles Morales is very intensive on your CPU, so it shouldn’t come as a surprise that there’s a 36% jump in performance with the Ryzen 9 7950X at 1080p. However, there’s still a jump of 17% all the way up at

It’s not just Spider-Man Miles Morales, either. In Cyberpunk 2077, if we flip on the Ultra RT preset and enable DLSS to Performance mode — a way you might actually play the game — there’s a performance gap of around 26% at both 1080p and 1440p. That disappears at

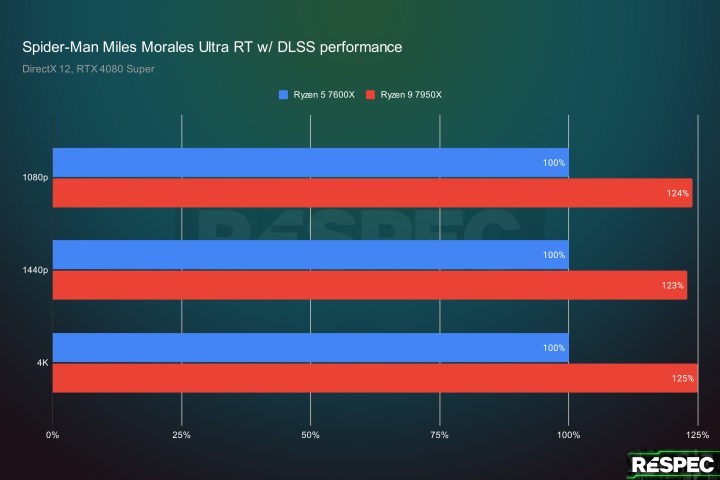

When you play a demanding game in 2024, you often won’t be playing at native resolution. You’ll turn on the highest graphics settings your GPU can muster and flip on upscaling either through DLSS or AMD’s FSR. The result is that you’re generally putting more strain on your CPU. By rendering the game at a lower resolution and upscaling, your

This can lead to some very strange situations, and Cyberpunk 2077 already shows some of that behavior. The performance gap is almost identical at 1080p and 1440p, but it completely disappears at

From 1080p up to

A balancing act

The idea behind a CPU bottleneck holds up today, but tools like upscaling challenge PC gamers to think more critically about the role their CPU plays in gaming performance. Every system is different and every game is different, but you can dig deeper into your system’s performance to understand how it reacts to different games.

First, let’s look at the circle of life between your GPU and CPU. Instead of thinking about one component waiting on the other, you can think of them as individual pieces of your PC with a certain capacity for performance. You could say that your CPU and GPU are both capable of a certain frame rate, or that they both take a certain amount of time to complete their work.

| Ryzen 5 7600X | Ryzen 9 7950X | |

| Cyberpunk 2077 1080p | 169 fps | 180 fps |

| Cyberpunk 2077 1440p | 119 fps | 122 fps |

| Cyberpunk 2077 4K | 55 fps | 56 fps |

| Cyberpunk 2077 RT 1080p w/ DLSS | 100 fps | 127 fps |

| Cyberpunk 2077 RT 1440p w/ DLSS | 100 fps | 126 fps |

| Cyberpunk 2077 RT 4K w/ DLSS | 84 fps | 85 fps |

| Spider-Man Miles Morales 1080p | 112 fps | 152.6 fps |

| Spider-Man Miles Morales 1440p | 112.6 fps | 143.2 fps |

| Spider-Man Miles Morales 4K | 112.7 fps | 132.2 fps |

| Spider-Man Miles Morales 1080p w/ DLSS | 86.1 fps | 106.7 fps |

| Spider-Man Miles Morales 1440p w/ DLSS | 84.8 fps | 104.7 fps |

| Spider-Man Miles Morales 4K w/ DLSS | 82.9 fps | 103.3 fps |

Thinking about bottlenecks this way is helpful for identifying them within your own system, and my full results above show why. In Spider-Man Miles Morales with DLSS on, you can see that the Ryzen 5 7600X is only capable of about 85 frames per second (fps), while the Ryzen 9 7950X is capable of about 105 fps. And we can confidently tie the performance to those parts because there’s virtually no change in performance from 1080p up to

Cyberpunk 2077 with ray tracing and DLSS provides an even more clear example. The Ryzen 5 7600X is capable of about 100 fps while the Ryzen 9 7950X is capable of about 127 fps. In both cases, the GPU is able to render more frames than what the CPU can. It’s only at

You don’t need a bunch of spare hardware and a spreadsheet to monitor performance in your own games. I like to use Special K to see how my CPU and GPU are interacting and what frame rate they’re producing. This app, which I’ve written about previously, includes a latency widget that sits over the top of your games while you play. It shows the latency — or time — that your CPU and GPU are taking to render frames, in real-time. It even has a line showing if you’re CPU-limited.

I like to turn on the widget whenever I’m playing a new game. I’ll turn it off eventually, but it’s remarkably helpful for understanding how the game works with the hardware I have. That knowledge can guide you a long way while you’re tweaking settings. In a game like Spider-Man Miles Morales, for example, I can immediately tell that turning on DLSS to Performance mode at

Between games that are taxing on your CPU like Spider-Man Miles Morales and Dragon’s Dogma 2, upscaling features, and broader

Editors' Recommendations

- Ghost of Tsushima is a great PC port with one big problem

- A friendly reminder that your PC power supply will eventually destroy itself

- Intel finally responds to CPU instability but only makes it more confusing

- Intel Core i9 CPUs are about to get hit with a downgrade, report says

- The simple reasons your PC games don’t play as well as they should