Nvidia has two standout features on its RTX 30-series and RTX 40-series graphics cards: ray tracing and DLSS. The PlayStation 5 and Xbox Series X have both done a good job of introducing most people to ray tracing, but DLSS is still a little nebulous. It’s a little complex, but it lets you play a game at a virtualized higher resolution, maintaining greater detail and higher frame rates without taxing your graphics card as much. It gives you the best of all worlds by harnessing the power of machine learning, and with the introduction of DLSS 3, the technology just got even more powerful.

But there’s a little more to the story than that. Here’s everything you need to know about DLSS, how it works, and what it can do for your PC games.

What is DLSS?

DLSS stands for deep learning supersampling. The “supersampling” bit refers to an anti-aliasing method that smooths the jagged edges that show up on rendered graphics. Over other forms of anti-aliasing, though, SSAA (supersampling anti-aliasing) works by rendering the image at a much higher resolution and using that data to fill in the gaps at the native resolution.

The “deep learning” part is Nvidia’s secret sauce. Using the power of machine learning, Nvidia can train AI models with high-resolution scans. Then, the anti-aliasing method can use the AI model to fill in the missing information. This is important, as SSAA usually requires you to render the higher-resolution image locally. Nvidia does it offline, away from your computer, providing the benefits of supersampling without the computing overhead.

This is all possible thanks to Nvidia’s Tensor cores, which are only available in RTX GPUs (outside of data center solutions, such as the Nvidia A100). Although RTX 20 series GPUs have Tensor cores inside, the RTX 3060, 3060 Ti, 3070, 3080, and 3090 come with Nvidia’s second-generation Tensor cores, which offer greater per-core performance.

Nvidia’s newest graphics cards from the RTX 40-Series lineup bring the Tensor cores up to their fourth generation. This makes the DLSS boost even more powerful. Thanks to the new 8-bit floating point tensor engine, the cores have had their throughput increased by as much as five times compared to the previous generation.

Nvidia is leading the charge in this area, though AMD’s new FidelityFX Super Resolution feature could provide some stiff competition. Even Intel has its own supersampling technology called Intel XeSS, or Intel Xe Super Sampling. More on that later.

What does DLSS actually do?

DLSS is the result of an exhaustive process of teaching Nvidia’s AI algorithm to generate better-looking games. After rendering the game at a lower resolution, DLSS infers information from its knowledge base of super-resolution image training to generate an image that still looks like it was running at a higher resolution. The idea is to make games rendered at 1440p look like they’re running at 4K or 1080p games to look like 1440p. DLSS 2.0 offers four times the resolution, allowing you to render games at 1080p while outputting them at 4K.

More traditional super-resolution techniques can lead to artifacts and bugs in the eventual picture, but DLSS is designed to work with those errors to generate an even better-looking image. In the right circumstances, it can deliver substantial performance uplifts without affecting the look and feel of a game; on the contrary, it can make the game look even better.

Where early DLSS games like Final Fantasy XV delivered modest frame rate improvements of just 5 frames per second (fps) to 15 fps, more recent releases have seen far greater improvements. With games like Deliver us the Moon and Wolfenstein: Youngblood, Nvidia introduced a new AI engine for DLSS, which we’re told improves image quality, especially at lower resolutions like 1080p, and can increase frame rates in some cases by over 50%.

With the latest iteration of DLSS 3, the frame rate gains might be even more substantial thanks to the new frame-generation feature. Previous implementations of DLSS just had the Tensor cores make frames look better, but now frames can be rendered using just AI. We’ll discuss DLSS 3 in greater detail later.

There are also new quality-adjustment modes that DLSS users can make, picking between Performance, Balanced, and Quality, each focusing the RTX GPU’s Tensor core horsepower on a different aspect of DLSS.

How does DLSS work?

DLSS forces a game to render at a lower resolution (typically 1440p) and then uses its trained AI algorithm to infer what it would look like if it were rendered at a higher one (typically 4K). It does this by utilizing some anti-aliasing effects (likely Nvidia’s own TAA) and some automated sharpening. Visual artifacts that wouldn’t be present at higher resolutions are also ironed out and even used to infer the details that should be present in an image.

As Eurogamer explains, the AI algorithm is trained to look at certain games at extremely high resolutions (supposedly 64x supersampling) and is distilled down to something just a few megabytes in size before being added to the latest Nvidia driver releases and made accessible to gamers all over the world. Originally, Nvidia had to go through this process on a game-by-game basis. In DLSS 2.0, Nvidia provides a general solution, so the AI model no longer needs to be trained for each game.

In effect, DLSS is a real-time version of Nvidia’s screenshot-enhancing Ansel technology. It renders the image at a lower resolution to provide a performance boost, then applies various effects to deliver a relatively comparable overall effect to raising the resolution.

The result can be a mixed bag, but in general, it leads to higher frame rates without a substantial loss in visual fidelity. Nvidia claims frame rates can improve by as much as 75% in Remedy Entertainment’s Control when using both DLSS and ray tracing. It’s usually less pronounced than that, and not everyone is a fan of the eventual look of a DLSS game, but the option is certainly there for those who want to beautify their games without the cost of running at a higher resolution.

In Death Stranding, we saw significant improvements at 1440p over native rendering. Performance mode lost some of the finer details on the back package, particularly in the tape. Quality mode maintained most of the detail while smoothing out some of the rough edges of the native render. Our “DLSS off” screenshot shows the quality without any anti-aliasing. Although DLSS doesn’t maintain that level of quality, it’s very effective in combating aliasing while maintaining most of the detail.

We didn’t see any over-sharpening in Death Stranding, but that’s something you might encounter while using DLSS.

Better over time

DLSS has the potential to give gamers who can’t quite reach comfortable frame rates at resolutions above 1080p the ability to do so with inference. DLSS is certainly one of the most powerful features of the RTX GPUs. They aren’t as powerful as we might have hoped, and the ray tracing effects are pretty but tend to have a sizable impact on performance, but DLSS gives us the best of both worlds: better-looking games that perform better, too.

Originally, it seemed like DLSS would be a niche feature for low-end graphics cards, but that’s not the case. Instead, DLSS has enabled games like Cyberpunk 2077 and Control to push visual fidelity on high-end hardware without making the games unplayable. DLSS elevates low-end hardware while providing a glimpse into the future for high-end hardware.

Nvidia has shown the RTX 3090 rendering games like Wolfenstein: YoungBlood at 8K with ray tracing and DLSS turned on. Although wide adoption of 8K is still far off, 4K displays are becoming increasingly common. Instead of rendering at native 4K and hoping to stick around 50 fps to 60 fps, gamers can render at 1080p or 1440p and use DLSS to fill in the missing information. The result is higher frame rates without a noticeable loss in image quality.

DLSS is improving all the time, too, and it receives regular updates in an attempt to improve the AI algorithm. It now allows it to make smarter use of motion vectors, which essentially helps to improve how objects look when they’re moving. The update also reduces ghosting, makes particle effects look clearer, and improves temporal stability. DLSS 2 is now fairly widely adopted, and 216 games support it as of September 2022.

The improvements don’t stop there, though. In fact, things are about to get a lot more interesting with the introduction of DLSS 3.

DLSS 3 reinvents the technology by rendering frames instead of pixels

On September 20, during its GTC 2022 keynote, Nvidia announced DLSS 3 — the latest iteration of the technology that will be available to the owners of RTX 40-series graphics cards. Unlike some of the previous, smaller updates, the changes to DLSS are big this time around, and they have the potential to offer a huge increase in performance with the addition of AI-generated frames, which are created using the real frames that a GPU renders. This is very different from DLSS and DLSS 2, which just touched up real frames with AI-powered upscaling.

There are currently four GPUs that support DLSS 3:

- RTX 4090

- RTX 4080

- RTX 4070 Ti

- RTX 4070

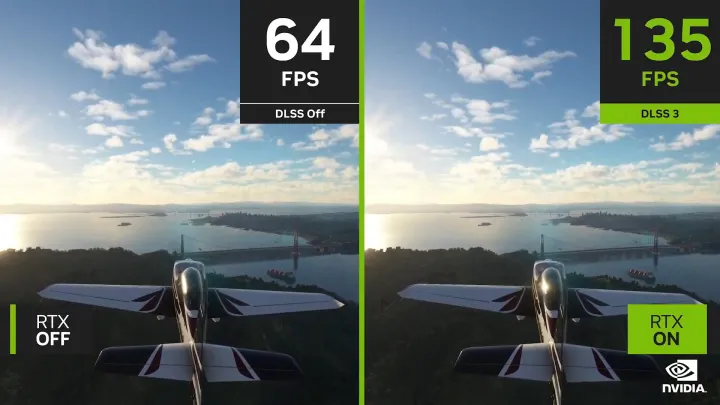

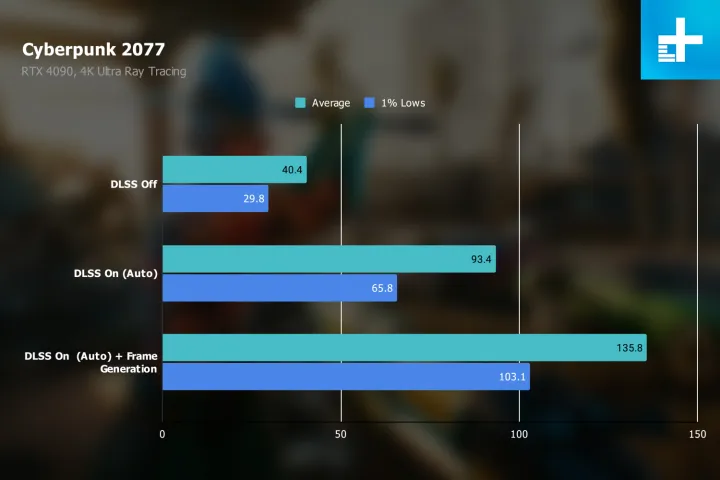

In our RTX 4090 review, we found that DLSS 3 was able to deliver significantly higher frame rates than DLSS 2. In Cyberpunk 2077 at 4K with ray tracing set to the max, turning on DLSS 3 yielded nearly 50% more frames than just using DLSS 2; compared to not using DLSS at all, DLSS 3 had over three times the frame rate. In this aspect, DLSS 3 delivers exactly as Nvidia promised.

However, there are some technical limitations with DLSS 3. Basically, DLSS inserts an AI-generated frame in between two real frames, and that AI frame is drawn up based on the differences between the two real frames. Naturally, this means the GPU can’t show you the second real frame before you see the AI-generated frame, which is why the latency is so much higher with DLSS 3. This is the reason why Nvidia Reflex also needs to be enabled for DLSS 3 to work.

The other major limitation of DLSS 3 is simply down to AI-generated frames having mistakes and weird visual bugs. In our testing, we found that there was a general quality decrease when enabling DLSS 3, which is easy to overlook when the frame rate gains are so high, but some quality issues are hard to ignore. UI or HUD elements, in particular, get garbled in the AI-generated frames, presumably because the AI is geared toward 3D environments and not 2D text that’s on top of the game itself. DLSS 2 doesn’t have this issue because the UI is rendered independently of the 3D elements, unlike DLSS 3.

Below is a screenshot from Cyberpunk 2077 comparing DLSS 3, DLSS 2, and native resolution from left to right. When it comes to the environment, both implementations of DLSS are better than the native, but you can also see that in the DLSS 3 screenshot on the left, the quest marker is distorted, and the text is unreadable. This is usually what happens in AI-generated frames in games that have DLSS 3.

On one hand, DLSS 3 pushes frames even higher and usually doesn’t have much worse visual quality than DLSS 2. But on the other hand, enabling DLSS 3 causes latency to be very high relative to the frame rate and can introduce weird visual bugs, especially on UI and HUD elements. Lowering latency and reducing visual artifacts will undoubtedly be challenging for Nvidia since these are fundamental trade-offs that come with DLSS 3. For a first-generation technology, however, it’s a good first try, and hopefully, Nvidia will be able to improve things with future iterations of DLSS 3.

DLSS 3 is slowly making its way into more games. Here are the titles that currently support DLSS 3:

- A Plague Tale: Requiem

- Atomic Heart

- Bright Memory: Infinite

- Chernobylite

- Conqueror’s Blade

- Cyberpunk 2077

- Deliver Us Mars

- Destroy All Humans 2

- Dying Light 2

- F1 22

- FIST: Forged in Shadow Torch

- Hitman 3

- Hogwarts Legacy

- Icarus

- Jurassic World Evolution 2

- Justice

- Loopmancer

- Marauders

- Marvel’s Spider-Man Remastered

- Microsoft Flight Simulator

- Midnight Ghost Hunt

- Mount and Blade 2 Bannerlord

- Naraka Bladepoiint

- Portal RTX

- Ripout

- The Witcher 3 Wild Hunt

- Warhammer 40,000 Darktide

DLSS versus FSR versus RSR versus XeSS

AMD is Nvidia’s biggest competitor when it comes to graphics technology. To compete with DLSS, AMD released FidelityFX Super Resolution (FSR) in 2021. Although it achieves the same goal of improving visuals while raising frame rates, FSR works quite differently from DLSS. FSR renders frames at a lower resolution and then uses an open-source spatial upscaling algorithm to make the game look like it is running at a higher resolution and doesn’t factor in motion vector data. DLSS uses an AI algorithm to deliver the same results, but this technique is only supported by Nvidia’s own RTX GPUs. FSR, on the other hand, can work on just about any GPU.

In addition to FSR, AMD also has Radeon Super Resolution (RSR), which is a spatial upscaling technique that makes use of AI. While this sounds similar to DLSS, there are differences. RSR is built using the same algorithm as FidelityFX Super Resolution (FSR) and is a driver-based feature that is delivered via AMD’s Adrenalin software. RSR aims to fill the gap where FSR is not available, as the latter has to be implemented right into specific games. Essentially, RSR should work in almost any game, as it doesn’t require developers to implement it. Notably, FSR is available across newer Nvidia and AMD GPUs, and RSR, on the other hand, is only compatible with AMD’s RDNA cards, which include the Radeon RX 5000 and RX 6000 series. Soon, that lineup will be expanded to include RDNA 3 and its Radeon RX 7000 series GPUs.

Intel has also been working on its own supersampling technology called Xe Super Sampling (XeSS), and unlike with FSR or DLSS, there are two different versions available. The first makes use of the XMX matrix math units, which are present in its new Arc Alchemist GPUs; these XMX units take care of all the AI processing on the hardware end. The other version makes use of the widely accepted four-element vector dot product (DP4a) instruction, thus removing the dependency from Intel’s own hardware and allowing XeSS to work on Nvidia and AMD GPUs.

Editors' Recommendations

- RTX 4090 owners are in for some bad news

- The sad reality of AMD’s next-gen GPUs comes into view

- New Nvidia update suggests DLSS 4.0 is closer than we thought

- What is VSync, and why do you need it?

- What is YouTube Music? Everything you need to know