Nvidia’s GTC (GPU Technology Conference) event has ended, and the keynote was packed to the brim with news. The new RTX 40-series graphics cards were obviously the standout announcements, kicking off an entirely new generation of graphics cards for PC gamers.

But as per usual, Nvidia’s announcement went far beyond consumer gaming, touching industries ranging from new products in the world of robotics and self-driving cars to advancements in the fields of medicine and science.

GeForce RTX 4090 and 4080

The big announcement that kicked off the keynote, of course, was three new graphics cards. The RTX 4090 is the flagship model, which Nvidia says is up to 2x faster than the previous generation (RTX 3090 Ti) in traditional rasterized games. This massive card is based on Ada Lovelace, which is the foundation of everything announced at GTC. It comes with 16,384 CUDA cores and 24GB of GDDR6X memory. And, most notably, the same 450 watts of power as the RTX 3090 Ti.

The RTX 4090 will be available on October 12 and will cost $1,599.

| RTX 4090 | RTX 4080 16GB | RTX 4080 12GB | |

| CUDA cores | 16,384 | 9,728 | 7,680 |

| Memory | 24GB GDDR6X | 16GB GDDR6X | 12GB GDDR6X |

| Boost clock speed | 2520MHz | 2505MHz | 2610MHz |

| Bus width | 384-bit | 256-bit | 192-bit |

| Power | 450W | 320W | 285W |

The RTX 4080 takes a step down from there and comes in two different configurations. The 16GB model will cost $1,199, and the 12GB model will cost $900.

These GPUs are a bit more manageable, with 320w or 285w, respectively. The

DLSS 3

Along with the new GPUs, Nvidia also announced the third generation of RTX, which includes DLSS 3. Just like stepping up from DLSS to the second generation, this appears to be a substantial evolution of the technology. This time, the machine learning can predict actual frames, not just pixels, resulting in an even larger boost to frame rates in games.

How fast? Well, Nvidia says up to four times as fast, thanks to the optical flow accelerator. This tech actually bundles three different Nvidia innovations into one, including DLSS Super Resolution, DLSS Frame Generation, and Nvidia Reflex.

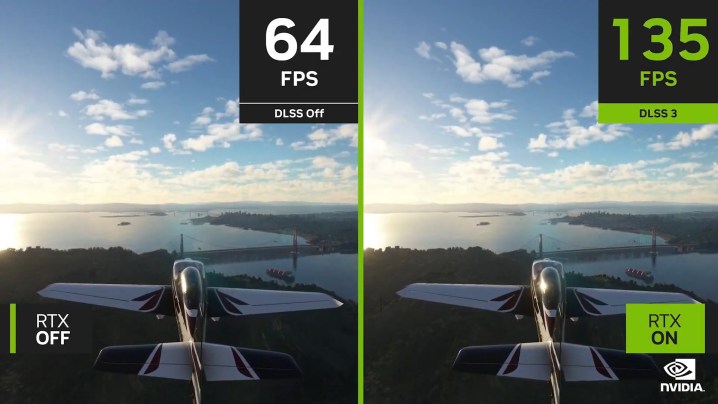

The other big feature of DLSS 3 is its ability to boost CPU-limited games. Nvidia showed a demo of Microsoft Flight Simulator, which fits the bill in an extreme way. Seeing DLSS 3 boost frame rates from 64 fps to 135 fps is seriously impressive, especially in this type of game.

Nvidia says DLSS 3 will be four times faster than conventional rendering, all in all. DLSS 3 comes out in October, and Nvidia says more than 35 games and applications will support it at launch.

Lastly, Nvidia announced Portal RTX, a modernized version of the popular PC game, now with DLSS 3 and

Nvidia Drive Thor

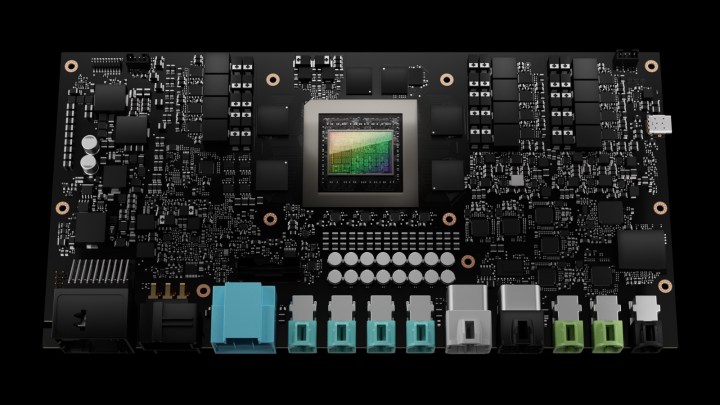

Nvidia has been trying to get its foot in the door for the future of self-driving cars for years, but its latest product feels like a significant step in the right direction. Nvidia Drive Thor is its next-generation superchip, based on the Hopper GPU architecture, paired with the Nvidia Grace CPU.

Drive Thor is the first automotive platform to have an inference transformer engine.

Nvidia says Drive Thor will be available for automakers’ 2025 car models as the true successor to Drive Orin, which is currently in production. Thor takes the place of Drive Atlan, which had been announced just last year.

Nvidia Drive Concierge

In addition to the new chip, Nvidia also showed off Drive Concierge, a complete in-vehicle infotainment system. It replaces the instrument cluster on a typical car dashboard with what Nvidia calls a “digital cockpit.” The system supports

Of course, elsewhere in the vehicle, Drive Concierge also gives passengers features like video-conferencing, video streaming, digital assistants, cloud gaming through GeForce Now, and full visualizations of the car.

The entirety of the design was created in Omniverse, and Nvidia says designing in Omniverse allows carmakers to tweak all these aspects of the car long before they’re a physical reality.

Omniverse Cloud and Nvidia’s Graphics Delivery Network

Omniverse Cloud was announced earlier this year as Nvidia’s complete suite of cloud services for people building out the future of the metaverse — without the need for all that performance in your actual computer. New additions to the suite of services include the robotics simulation application, Nvidia Isaac Sim, as well as the autonomous vehicle simulation, Nvidia Drive Sim.

Interestingly, Omniverse Cloud was mentioned alongside something called the Nvidia Graphics Delivery Network (GDN), which is the distributed data center powering Omniverse Cloud. Built on the same capabilities as GeForce Now, the company’s cloud gaming service, the GDN is the network delivering all these high-performance, low latency graphics to anyone who needs it.

Nvidia spoke at length about all the ways Omniverse and digital twins are being used, even stating that every product in the future controlled by software, must have a digital twin for testing purposes.

Jetson Orin Nano

Nvidia announced the Jetson Orin Nano modules, the latest addition to the Jetson family of small computers built for accelerating AI processes and robotics. These new “system-on-modules” claim to bring up to 40 trillion operations per second (TOPS) of AI performance, 80 times the performance of its predecessor the Orin NX modules.

The Orin Nano modules will be available starting in January, with the 8GB model starting at $199.

Everything else

- Nvidia has announced two new large language models, the NeMo API for natural language AI applications, and BioNeMo for applications in chemistry and biology.

- Lowe’s has announced it will be making its library of over 600 photorealistic 3D product assets free for other Omniverse creators. The company also discussed its exploration of using interactive digital twins and a Magic Leap 2 AR headset to give employees “superpowers.”

- Germany’s Deutsche Bahn Rail Network has announced that it’s using digital twins in the Omniverse to develop its future railway system.

- The second generation of Nvidia OVX has been announced, powered by the L40 GPU, meant for building “complex industrial digital twins.” L40 uses third-generation RT cores and fourth-generation Tensor cores for these intense Omniverse workloads and has already been equipped to companies like BMW and Jaguar Land Rover. These new OVX systems will be available from Lenovo, Inspur, and Supermicro by early 2023.

- Nvidia has announced that the H100 Tensor Core GPU has entered full production, and is ready to roll out its first Hopper-based products in October

- In the medical world, Nvidia has announced IGX, a combined hardware and software platform designed specifically for use cases such as robotic-assisted surgeries and patient monitoring.

- Nvidia also demonstrated how IGX also has applications in the industrial world, specifically in the creation of safe autonomous factories where human-machine collaboration is involved.

- A partnership between Nvidia and The Broad Institute of MIT and Harvard that brings the GPU-accelerated Clara Parabricks software to the Terra biomedical data platform, allowing researchers to speed up tasks like genome sequencing by as much as 24x. Nvidia says it’s contributing its own deep learning model to “help identify genetic variants that are associated with diseases.”

- Nvidia and Booz Allen have announced an “expanded collaboration” to use AI through GPU acceleration of its cybersecurity platform, based on Nvidia’s Morpheus framework.