We’re off to a rocky start with PC releases in 2023. Hogwarts Legacy, Resident Evil 4 Remake, Forspoken, and most recently and notably The Last of Us Part One have all launched in dire states, with crashes, hitches, and lower performance despite a minor increase in visual quality. And a big reason why is that the graphics cards of the last few years aren’t equipped to handle the demands of games today.

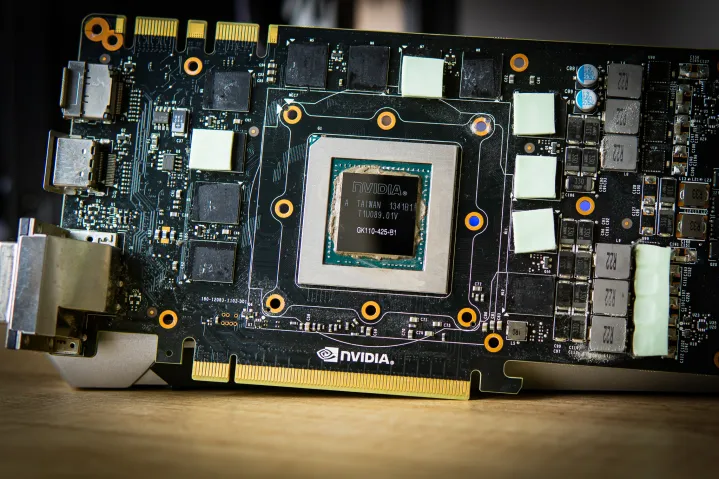

The GPUs themselves are powerful enough; games haven’t suddenly gotten more demanding for no reason. The problem is video memory or VRAM. Many of the most powerful GPUs from the previous generation weren’t set up to handle the VRAM demands of modern games, which may explain why your relatively powerful PC can’t handle the latest and most exciting new games.

What does your VRAM do anyway?

Think of your graphics card like a self-contained computer. In your PC, your processor and

Your

Shaders, especially in titles with

The RTX 3070 Ti problem

When ExtremeTech published a round-up for RTX 3070 Ti reviews, it didn’t mince words. The card had a “long-term problem” with its low VRAM, and we’re starting to see that problem take shape.

Resident Evil 4 Remake can hog up to 8GB of VRAM simply on textures, though you have the option to go much lower. The Last of Us Part One can consume nearly 7GB at its lowest graphics preset and upwards of 14GB at its highest. And Hogwarts Legacy sucked up nearly 13GB of VRAM with

The effects of this are already clear. In preliminary testing of The Last of Us Part One, Hardware Unboxed found massive stuttering with 8GB of VRAM compared to 16GB, even with two

Even powerful

I’m calling this the RTX 3070 Ti problem, but it’s not exclusive to the RTX 3070 Ti. It just serves as good touchstone for a wide swath of GPUs that are stuck at or under 8GB of VRAM, despite sporting excellent GPU power otherwise. Even the 10GB RTX 3080 isn’t immune, especially with the highest graphics settings at

Focused in one direction

It’s upsetting that

Nvidia makes the best graphics cards you can buy today, but AMD and Intel have focused some effort on boosting VRAM, even on lower-end models. For example, Intel’s Arc A770 includes 16GB of VRAM under $350. Even the $900 RTX 4070 Ti only includes 12GB. Similarly, AMD opted for 12GB of memory for its midrange RX 6700 XT, while Nvidia stuck with 8GB. That can make a difference in games like Hogwarts Legacy, where Intel’s GPU performs much better than its price would suggest.

Some of that is being rectified with newer cards. Rumors suggest Nvidia’s RTX 4070 could carry 12GB of VRAM, but it still stings that high-end GPUs capable of running the most demanding games are coming up against issues simply due to VRAM limitations. Unfortunately, there’s not a lot you can do if you’re running out of video memory outside of upgrading your

You can reduce some stuttering issues, though. If you’re limited by VRAM, turning down your texture resolution can help a lot. You can also reset your shader cache through AMD Software and try increasing your shader cache size in the Nvidia Control Panel. The ultimate fix, though, is more VRAM on

Editors' Recommendations

- AMD’s gaming revenue is down by 48%, and it won’t get better

- This simple app was a surprising upgrade to my gaming PC

- The simple reasons your PC games don’t play as well as they should

- Don’t buy the RTX 3060 in 2024

- As a lifelong PC gamer, these are the apps I couldn’t live without