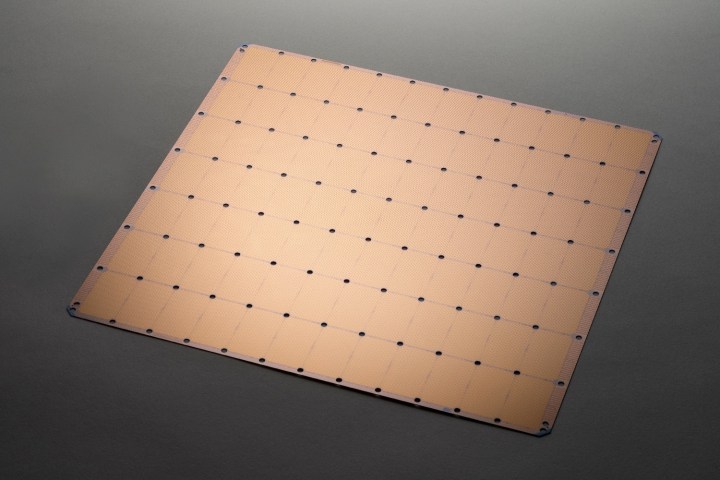

We’re used to microchips becoming increasingly miniaturized, thanks to the amazing trend of Moore’s Law letting engineers pack more and more transistors onto ever tinier chips. The same thing can’t be said for the Wafer Scale Engine (WSE) chip designed by Californian startup Cerebras, which recently emerged from stealth. Cerebras has created an immensely powerful chip designed for carrying out A.I. processes — and there’s absolutely no missing it. Partly because, unlike most microchips, this one is the size of an iPad.

The 46,225 square millimeter WSE chip boasts an enormous 1.2 trillion transistors, 400,000 cores, and 18 gigabytes of on-chip memory. That makes it the biggest chip ever created. The previous record-holder was a mere 815 square millimeters, with 21.1 billion transistors. As CEO and co-founder Andrew Feldman told Digital Trends, this means the WSE chip is “56.7 times larger” than the giant chip it beat for the title.

“Artificial intelligence work is one of the fastest-growing compute workloads,” Feldman said. “Between 2013 and 2018, it grew at a rate of more than 300,000 times. That means every 3.5 months, the amount of work done with this workload doubled.”

This is where the need for bigger chips comes into play. Bigger chips process more information more quickly. That, in turn, means that the user can calculate their computationally heavy answer in less time.

“The WSE contains 78 times as many compute cores; it [has] 3,000 times more high speed, on-chip memory, 10,000 times more memory bandwidth, and 33 times more fabric bandwidth than today’s leading GPU,” Feldman explained. “This means that the WSE can do more calculations, more efficiently, and dramatically reduce the time it takes to train an A.I. model. For the researcher and product developer in A.I., faster time to train means higher experimental throughput with more data: less time to a better solution.”

Unsurprisingly, a computer chip the size of a freaking tablet isn’t one that’s intended for home use. Instead, it’s intended to be used in data centers where much of the heavy-duty processing behind today’s cloud-based A.I. tools is carried out. There’s no official word on customers, but it would seem likely that firms such as Facebook, Amazon, Baidu, and others will be keen to put it through its paces.

No performance benchmarks have been released yet. However, if this chip lives up to its promises it will help keep us in A.I. innovation for the weeks, months, and even years to come.